You probably interact with facial recognition every day without even noticing. Unlocking your phone with a glance, passing through airport security, or even tagging photos on social media all rely on this technology. It has quietly become part of everyday life, yet what happens behind the scenes is far more fascinating.

A camera detects a face, analyzes its unique features, converts them into digital data, and compares that data to stored records. All of this happens in seconds.

But how does a machine pick out one face from millions? Or how does it understand subtle differences between individuals?

Software development companies create and optimize these systems, combining advanced programming and artificial intelligence to make them accurate, fast, and reliable. Let’s break it down step by step.

What Is Face Recognition?

Facial recognition is a type of biometric identification. Think of it as a digital fingerprint that uses your face instead of a fingertip. Early systems from the 1960s involved manually measuring facial features, but today, artificial intelligence handles the work with deep learning models. It identifies faces with impressive accuracy under ideal conditions.

This is why we see facial recognition being used more and more in places like security, shops, hospitals, and airports. But it makes us wonder: how does a machine really know who you are?

How Does Facial Recognition Work?

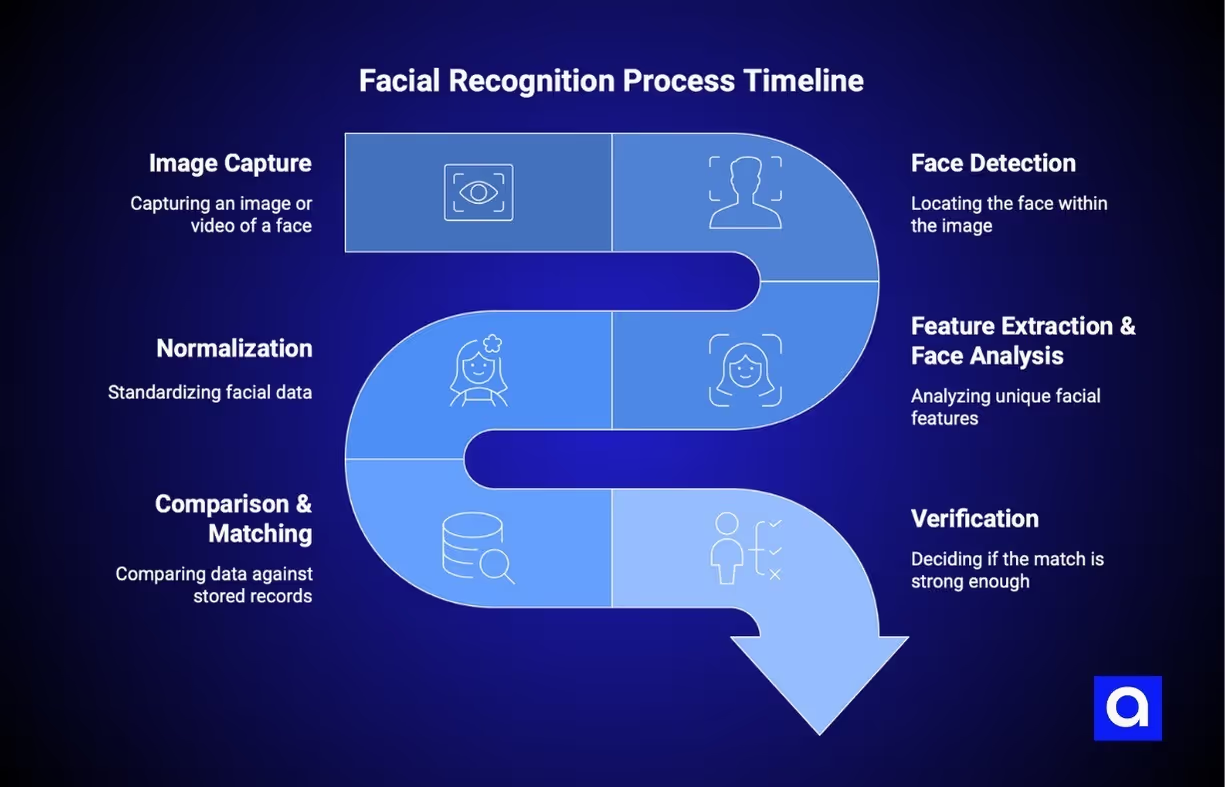

Facial recognition follows a clear sequence of steps. At a high level, the process involves capturing an image, locating a face, analyzing its features, converting those features into data, and comparing that data against stored records.

Artificial intelligence and machine learning power this entire flow. Instead of simply checking for basic elements like eyes or a nose, modern systems learn patterns from massive collections of facial images. Over time, they develop an understanding of what makes one face distinct from another.

Below is a step-by-step look at how the process works in practice.

Step 1: Image Capture

Everything starts with capturing an image or video of a face. This can come from a smartphone camera, a security system, a laptop webcam, or a dedicated biometric scanner.

The quality of this capture plays a major role in accuracy. Clear lighting, a stable image, and a visible face give the system more reliable data to work with. Some advanced systems also capture depth or infrared information. This helps distinguish real faces from photos or masks and improves performance in low-light conditions.

Step 2: Face Detection

Once an image is captured, the system needs to locate the face within it. This step separates the face from the rest of the scene, whether that scene includes cluttered backgrounds, multiple people, or movement.

Modern detection models use deep learning to recognize faces from different angles and under less-than-perfect conditions. When a face is detected, it is isolated so the system can focus only on the facial region during the next steps.

Step 3: Feature Extraction & Face Analysis

After isolating the face, the system analyzes what makes it unique. It looks at the relationships between facial features such as eye spacing, nose shape, jawline structure, and overall proportions.

These details are converted into a numerical representation often called a facial signature. Deep learning models learn which features are most useful for recognition, and allow the system to identify a person even if they change hairstyles, grow facial hair, or wear glasses.

Step 4: Normalization

Before comparison, the system standardizes the facial data. Faces can appear different due to camera angles, facial expressions, distance, or lighting conditions.

Normalization adjusts for these variations by aligning facial features, correcting orientation, and balancing brightness and contrast. This step ensures that the comparison focuses on the face itself rather than how or where the image was taken.

Step 5: Comparison & Matching

The normalized facial data is then compared against stored records in a database. The system calculates a similarity score that reflects how closely two facial signatures match.

In some cases, the comparison is one-to-one, such as unlocking a device. In others, it involves searching through a larger database to find potential matches. Speed matters here, and modern systems can process millions of comparisons in seconds.

Step 6: Verification

The final step is deciding whether the match is strong enough to be accepted. The system uses confidence thresholds to determine whether a face should be verified, rejected, or flagged for further review.

In higher-risk scenarios, facial recognition is often combined with other security methods. This layered approach helps reduce errors and improve overall reliability.

Different Types of Facial Recognition

When we talk about facial recognition, it helps to remember that not all systems work the same way. The way a face is captured and studied can change how accurate the system is, how much it costs, and where you usually see it used. As users, we often interact with these systems without even realizing which type is behind the screen.

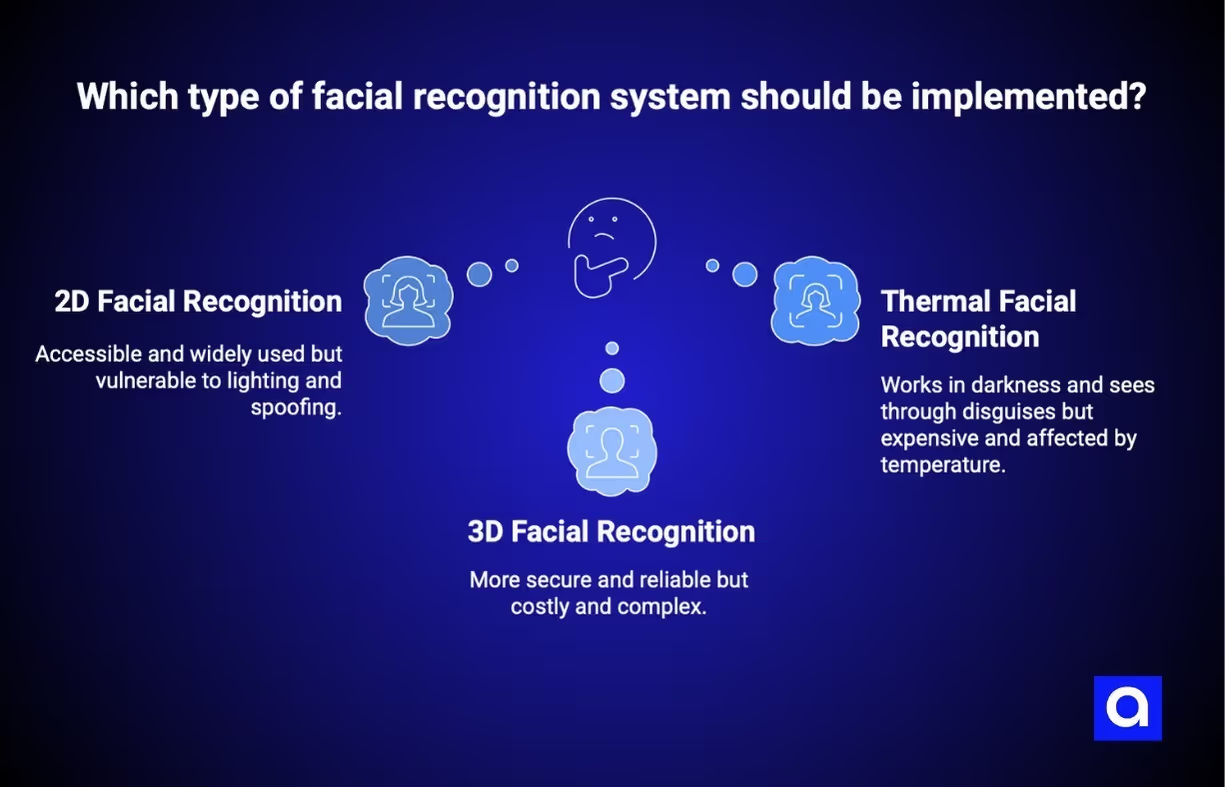

2D Facial Recognition

2D facial recognition is the most widely used and the most familiar. It works with standard flat images captured by regular cameras, the same type found in phones, laptops, and security systems.

Its biggest advantage is accessibility, because it only requires a camera. This makes it ideal for everyday use cases like photo tagging, basic device unlocking, and monitoring public spaces.

Lighting conditions have a major impact, and changes in angle or facial orientation can reduce reliability. A person turning their head or standing in uneven light gives the system less information to work with. Basic 2D systems are also more vulnerable to spoofing, since a photograph can sometimes be enough to fool them without additional protections.

3D Facial Recognition

3D facial recognition adds depth information, allowing the system to understand the actual shape of a face rather than just its appearance. Instead of analyzing a flat image, it builds a three-dimensional map of facial contours.

This depth data can be captured using structured light, stereo cameras, or time of flight sensors. These methods measure how light interacts with the face to calculate distance and shape. The result is a much richer facial model that works reliably across different lighting conditions and angles.

Because it measures physical structure, 3D recognition is far harder to deceive. Flat images and most masks do not provide the depth information the system expects. This makes it well suited for secure authentication, even in low light or complete darkness.

The downside is cost and complexity. Specialized sensors and more processing power are required, which limits where 3D systems are practical. While the technology has become more affordable over time, it is still used mainly in higher security or premium applications.

Thermal (Infrared) Facial Recognition

Thermal facial recognition takes a different path by focusing on heat rather than visible features. These systems detect infrared radiation emitted by the face, creating a thermal pattern based on underlying blood vessels and tissue.

One major advantage is that thermal systems work in total darkness and can sometimes see past surface-level disguises like makeup or masks. This makes them useful in environments where lighting is unpredictable or where visible cameras are less effective.

Thermal recognition gained attention during the pandemic, as it allowed for identity verification and temperature screening at the same time. Airports, hospitals, and controlled facilities were among the most common adopters.

However, thermal patterns can fluctuate. Physical activity, stress, and surrounding temperature can all influence heat distribution on the face. Thermal cameras are also expensive, which limits widespread use. As a result, these systems perform best in controlled settings where conditions remain stable.

Types of Facial Recognition Algorithms

Every facial recognition system relies on an algorithm that decides how a face is analyzed and compared. Over time, these algorithms have changed a lot. Early systems relied on simple math and geometry. Modern ones learn directly from massive amounts of data, much closer to how humans recognize faces.

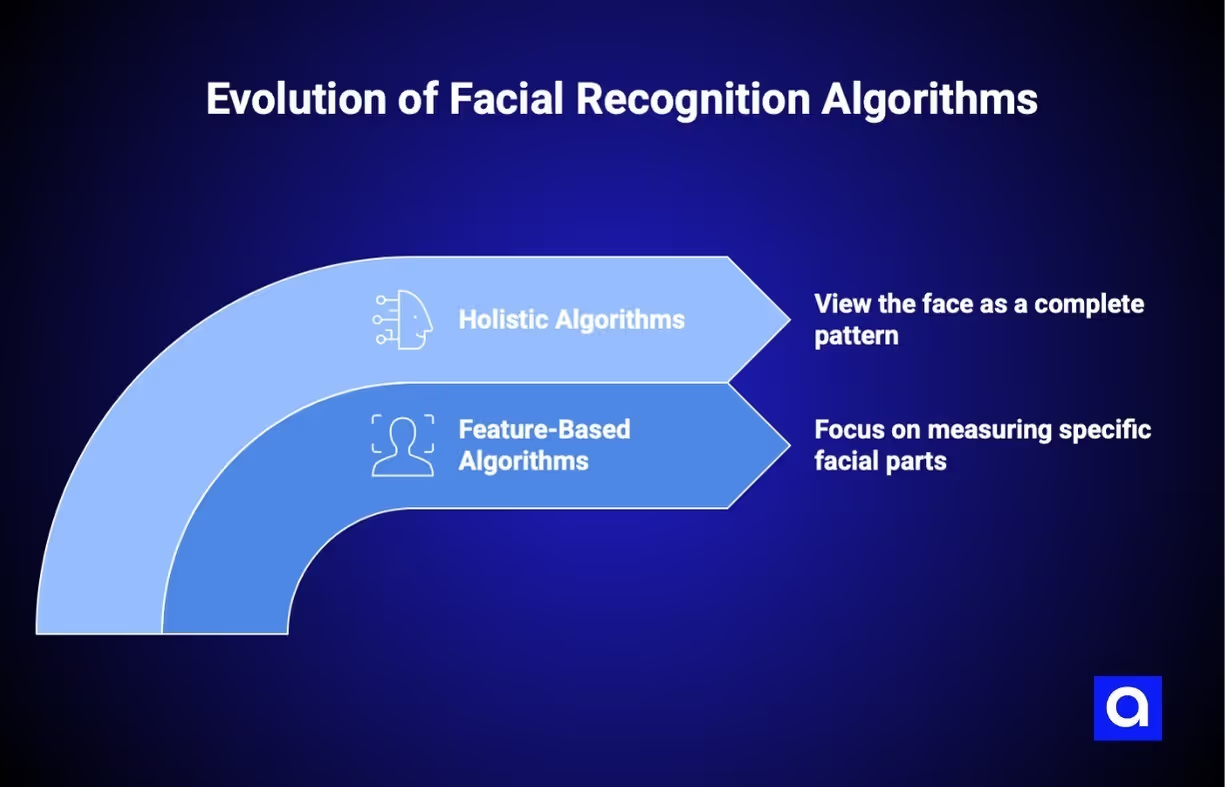

Feature-Based Algorithms

Feature-based algorithms were the foundation of early facial recognition systems. They focus on measuring specific parts of a face and using those measurements to tell people apart. While they are no longer the most accurate option, they still appear in systems where speed and low computing power matter.

Local Binary Patterns look at texture. The algorithm compares each pixel to the ones around it and turns those comparisons into patterns. This makes it good at capturing small details like skin texture and shadows, while remaining fast and lightweight.

Histogram of Oriented Gradients focuses on edges and shape. It measures how brightness changes across an image, which helps identify outlines like the jawline, nose, and eye sockets. Although it was originally designed for detecting people in images, it turned out to be useful for finding and recognizing faces as well.

Scale Invariant Feature Transform works by identifying key points in an image that stay consistent even when the face appears at a different size, angle, or lighting condition. This allows the system to match faces even when photos are taken from different perspectives.

The biggest advantage of these methods is efficiency. They do not need large datasets or powerful hardware. Older smartphones and basic security systems can still run them in real time.

Their limitation is accuracy. These algorithms struggle with real-world variation. Changes in facial expression, aging, lighting, or partial obstructions can confuse them. They can handle simple scenarios, but they fall short when conditions become unpredictable.

Holistic Algorithms

Holistic algorithms take a different approach. Instead of measuring individual features, they look at the face as a complete pattern. This group includes both early statistical techniques and today’s deep learning models.

Principal Component Analysis was one of the earliest holistic methods. It treats each face as a combination of values and finds the patterns that vary the most across many faces. The well-known Eigenfaces method used this idea to represent faces in a compressed but meaningful way.

Linear Discriminant Analysis builds on this by focusing on separation. It looks for patterns that best distinguish one person from another while reducing differences between photos of the same person.

The biggest leap forward came with deep learning. Convolutional Neural Networks learn what matters by training on millions of faces. Instead of being told which features to measure, they discover those features on their own. Models like VGGFace, ResNet, and FaceNet learn representations that capture the structure and identity of a face at a very deep level.

FaceNet introduced the idea of embeddings. Each face is converted into a numerical vector, and faces from the same person naturally group together while different people stay far apart. DeepFace showed that this approach could match or even exceed human-level performance under the right conditions.

More recent methods like ArcFace improved training techniques, making these systems even more reliable. Today, deep learning-based algorithms power almost all modern facial recognition systems, delivering the accuracy levels that allow the technology to work at scale. Generative AI development companies continue to innovate in this space, refining these models and creating more advanced tools for real-world facial recognition applications.

Key Technologies

Artificial Intelligence (AI) & Deep Learning

Modern facial recognition relies heavily on AI development. Most systems use Convolutional Neural Networks, or CNNs, which analyze images in layers. Early layers detect simple edges and textures while later layers recognize full facial structures.

These networks learn patterns from millions of labeled images, gradually understanding what makes each face unique. Transfer learning allows developers to adapt pre-trained models for new applications without massive datasets.

Accuracy can be very high under ideal conditions, but real-world factors such as poor lighting, unusual angles, or low-resolution images can still affect performance. Researchers are constantly testing new techniques, including transformer-based models and self-supervised learning, to improve reliability in everyday use.

Biometric Data & Pattern Analysis

Facial recognition is part of the larger field of biometrics, which also includes fingerprints, iris scans, and voice patterns. Faces are practical because they can be captured at a distance without any physical contact or active participation.

Recognition systems identify patterns that are distinctive across people, reasonably consistent over time, and measurable by a computer. While generally accurate, factors such as lighting, movement, or aging can cause errors. This continues to drive ongoing research and innovation in the technology.

Facial Recognition Benefits

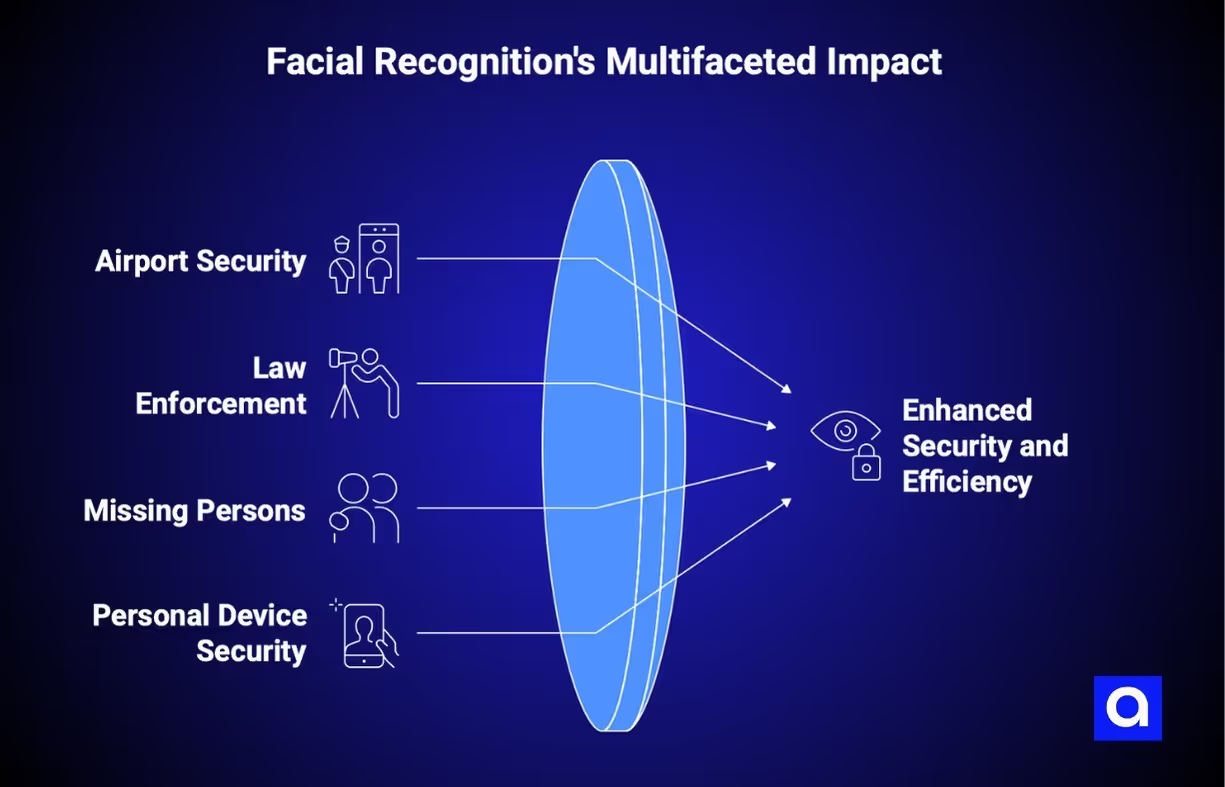

Facial recognition has sparked debate, but it also provides clear benefits across many areas. Understanding these benefits helps explain why the technology is being adopted so widely.

Enhanced Airport & Border Security

Airports are one of the most visible places where facial recognition is in use. It speeds up passenger processing and helps prevent fraudulent travel. In the United States, facial recognition has been deployed at over 30 airports, processing more than 100 million travelers. The system compares travelers’ faces with passport photos and visa images, verifying identity without requiring physical documents.

This technology reduces wait times at security checkpoints and immigration, allowing airports to handle more passengers efficiently. At the same time, it can detect travelers attempting to use fake documents or enter under false identities. International airports such as Changi in Singapore, Dubai International, and several European hubs have adopted similar systems for check-in and boarding, making facial recognition a standard part of modern air travel. These systems operate as examples of Artificial Narrow Intelligence, or ANI, designed to perform specific tasks like identification with high accuracy.

Criminal Identification & Law Enforcement Support

Law enforcement agencies increasingly use facial recognition to support investigations. The technology can help identify suspects, locate fugitives, and solve cold cases. In the United States, more than 20 states now use facial recognition for criminal investigations. The FBI’s Next Generation Identification system alone contains over 30 million criminal photos, creating a large searchable database.

Investigators can use surveillance footage to generate facial recognition searches, returning potential matches to guide follow-up work. The technology has helped solve cold cases, track fugitives, and verify identities for people providing false information to authorities. While concerns about civil liberties and wrongful identification remain important, the investigative value in the right context is significant.

Finding Missing Persons

One of the most impactful uses of facial recognition is locating missing people. The technology can search through vast numbers of photos and videos to help find individuals who might otherwise be impossible to locate. Missing children, adults with dementia, and victims of human trafficking have been identified using facial recognition.

Organizations like the National Center for Missing and Exploited Children have used it to identify children in exploitative images, leading to rescues. Age-progression algorithms can estimate what a person might look like years later, allowing searches against current databases. Public photos on social media also provide opportunities for identification when legal authority allows, creating a search capability that did not exist before.

Improved Security for Personal Devices

Most people experience facial recognition daily through smartphones and personal devices. Systems like Face ID and Windows Hello let users unlock devices and authorize transactions with a glance.

The convenience is immediate. Users do not need to remember passwords or type PINs, which increases the likelihood they will actually secure their devices. Modern systems also provide strong security. For example, Apple’s Face ID achieves a false acceptance rate of one in a million, far more secure than fingerprint authentication.

Facial recognition is also used in software development for banking transactions, purchase verification, and access to sensitive applications. In each case, it offers a combination of security and convenience that is difficult to match with traditional methods.

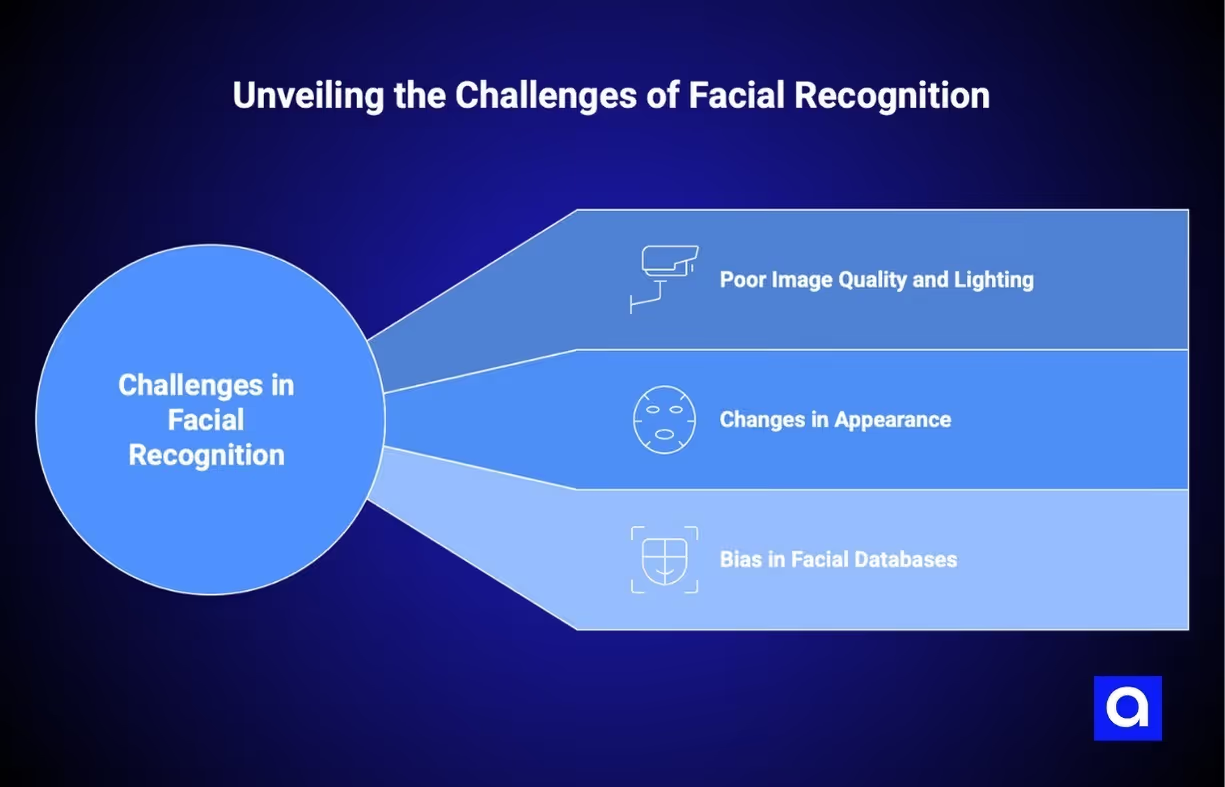

Challenges in Facial Recognition

Facial recognition is powerful, but it has real limitations that affect both reliability and fairness. Understanding these challenges is essential for anyone using or affected by these systems, even as many industries benefit from AI to improve efficiency, security, and decision-making.

Poor Image Quality and Lighting

The accuracy of facial recognition depends heavily on image quality. Low resolution, poor lighting, motion blur, and awkward angles can all reduce performance. For example, security camera footage from a robbery might be captured from a high angle, in dim light, with moving subjects and low resolution. Even the best algorithms struggle under these conditions.

Distance also matters. A face captured from across a parking lot contains far less detail than one captured up close. Modern systems handle difficult conditions better than before, but a system that performs near perfectly on high-quality frontal photos may drop to 80% accuracy or lower on challenging footage. Results from poor-quality images should be treated as leads rather than definitive identifications, with human verification whenever possible.

Changes in Appearance

Faces are not static. People grow older, change hairstyles, gain or lose weight, and may wear makeup or undergo cosmetic procedures. Facial expressions add another layer of complexity, as a smiling face looks different from a neutral or shouting face.

Aging is especially challenging. Photos from a driver’s license or passport might be years or decades old, and algorithms must bridge that gap. The COVID-19 pandemic highlighted another issue: masks significantly reduced recognition accuracy, with error rates rising from around 5% to as high as 50% in some tests. Systems trained specifically on masked faces have improved, but environmental changes can quickly make models less reliable. Intentional disguises, such as hats, glasses, or prosthetics, also create ongoing challenges.

Bias in Facial Databases

One of the most serious challenges is bias. Facial recognition does not perform equally well across all demographic groups. A 2019 NIST study found that algorithms had higher false positive rates for Asian and African American faces compared with Caucasian faces, sometimes by as much as 100 times. Women, older adults, and people with darker skin tones also experience higher error rates.

Bias often stems from training data. Algorithms trained primarily on photos of light-skinned adults learn to recognize that group more accurately, leaving underrepresented groups at a disadvantage. The consequences are serious: false matches can lead to wrongful arrests, denied services, and other harms.

The industry is actively addressing these issues through more diverse datasets, fairness-aware training, and demographic-specific testing. While progress is being made, the problem is not fully solved, and organizations using facial recognition must evaluate how well their systems perform across different populations.

Privacy and Ethical Concerns

Facial recognition is powerful, but it also raises big questions about privacy, fairness, and how the technology is used. Anyone working with or encountering it should understand these concerns.

Privacy and Consent

Facial recognition can identify people without them knowing. Unlike fingerprints, you cannot hide your face in public. Photos from social media, security cameras, or street photography can be used to track people. Some companies collect images without asking for permission. Rules vary by region. In the European Union, facial data is sensitive and usually requires consent, while some U.S. states and cities have strict limits on its use.

Mass Surveillance Risks

The technology can track people on a massive scale. In some countries, cameras monitor citizens constantly. Even in democracies, this raises worries about free speech and personal safety, because automated systems can track everyone everywhere.

Algorithmic Bias and Discrimination

Facial recognition can make mistakes that affect some groups more than others. Systems trained on unbalanced data can misidentify people with darker skin tones or underrepresented communities. This can cause real harm, from humiliation to wrongful arrests. Experts recommend using facial recognition only as a tool, not as the only evidence.

Transparency and Accountability

Many facial recognition systems are like “black boxes.” They can match faces accurately but often cannot explain how they reach their decisions. This makes accountability harder when mistakes happen. Researchers are working on explainable AI, but clear, fully transparent systems are rare.

Data Security

Facial data cannot be changed like a password. Breaches put people at long-term risk. Past incidents have exposed millions of facial records, which could be misused for identity theft or to bypass security systems.

Errors and False Matches

Even the best systems make mistakes. A false match can be a minor inconvenience or a serious problem, like a wrongful arrest. Large databases make small error rates affect many people. Human oversight and extra verification are essential to avoid harm.

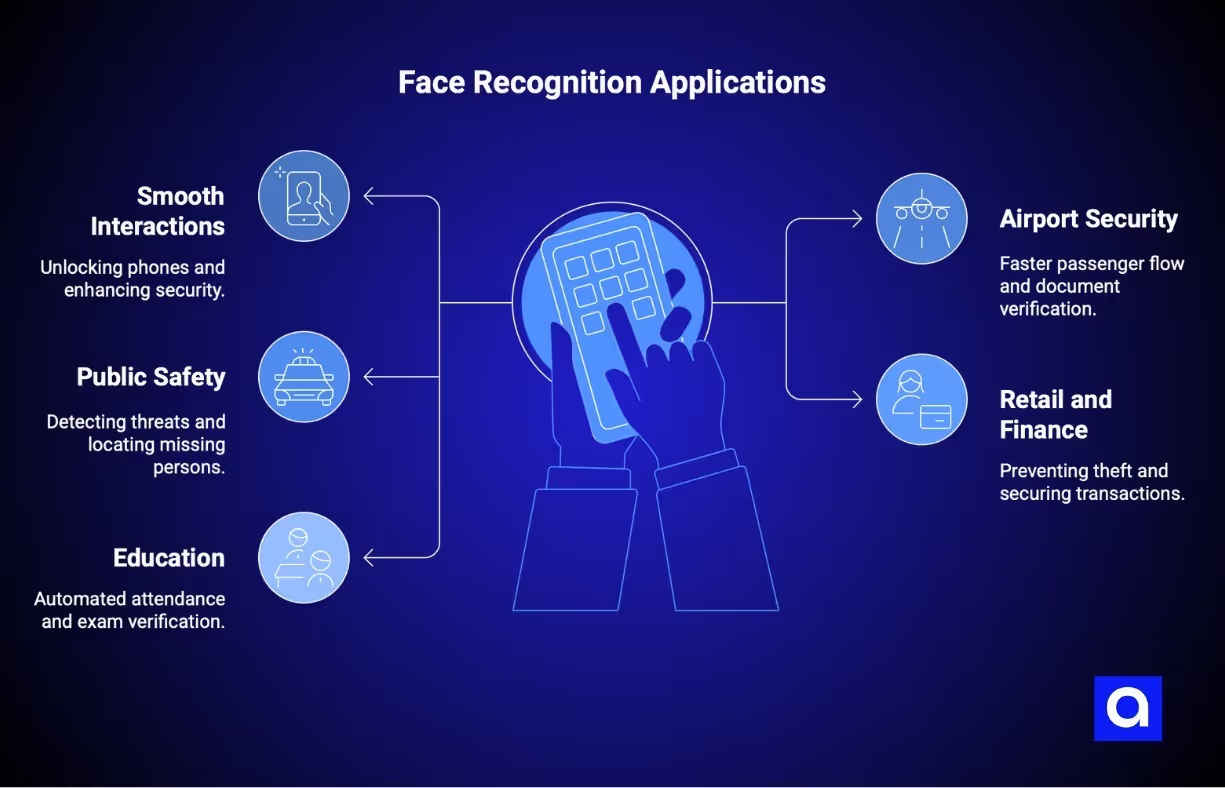

Face Recognition in Real-World Applications

Despite the challenges and concerns, facial recognition sees widespread deployment across industries. Here's how the technology gets used in practice.

- Smooth Everyday Interactions

Imagine unlocking your phone in a second without typing a password. Face ID, Windows Hello, and Android facial authentication make this possible. Beyond convenience, these systems enhance security, letting people use stronger protections they might have avoided otherwise. - Airports: Speed and Security Combined

At many airports, your face is effectively your boarding pass. Cameras at check-in, security checkpoints, and gates verify your identity, reducing wait times while preventing travelers from using fake documents. Changi Airport in Singapore and Dubai International have successfully integrated these systems into daily operations, making passenger flow faster and more secure. - Keeping Crowds and Communities Safe

In law enforcement and public venues, facial recognition can detect known threats or locate missing persons. Some police departments scan live crowds to flag wanted individuals, though this raises concerns about continuous surveillance. Healthcare software and senior care facilities also use facial recognition to track patients and prevent accidents, improving safety without intrusive monitoring. - Retail and Financial Services

Retailers use the technology to prevent theft and understand customer behavior, sometimes offering personalized experiences for returning shoppers. Banks apply facial recognition to secure transactions and verify identities at ATMs or in mobile apps, combining convenience with robust security. - Classrooms and Campuses

Some schools experiment with automated attendance tracking and exam verification. While these systems save time, they also spark discussions about student privacy and ethical use.

What's Next for Facial Recognition

Facial recognition is evolving quickly, driven by new technologies and societal pressures. Vision Transformers and other AI innovations promise greater accuracy and robustness. Edge computing allows processing on devices instead of the cloud, improving privacy and reducing delays.

Future systems may combine multiple biometrics, such as faces, irises, voices, and gait, for stronger verification. Regulators are tightening rules, with laws like the European Union AI Act shaping how the technology can be deployed. Researchers are also focusing on fairness, aiming for consistent accuracy across demographics.

At Azumo, we help organizations navigate these changes by providing AI development services, custom software development, and digital solutions tailored to modern security and authentication needs. Our expertise allows companies to implement facial recognition safely and efficiently while addressing privacy, fairness, and scalability.

The debate over where and how facial recognition should be used will continue. The technology can enhance security, streamline everyday tasks, and support law enforcement, but it also raises concerns about surveillance, discrimination, and privacy. How it impacts society will depend on the choices made in its deployment and regulation, and Azumo is here to guide businesses through these decisions with expert solutions. Contact us today to start your journey.

.avif)

.avif)