.avif)

You ask your voice assistant to set a morning alarm, and Netflix predicts the next show you will watch. All of these interactions are powered by Artificial Narrow Intelligence (ANI), the type of AI that actually exists today.

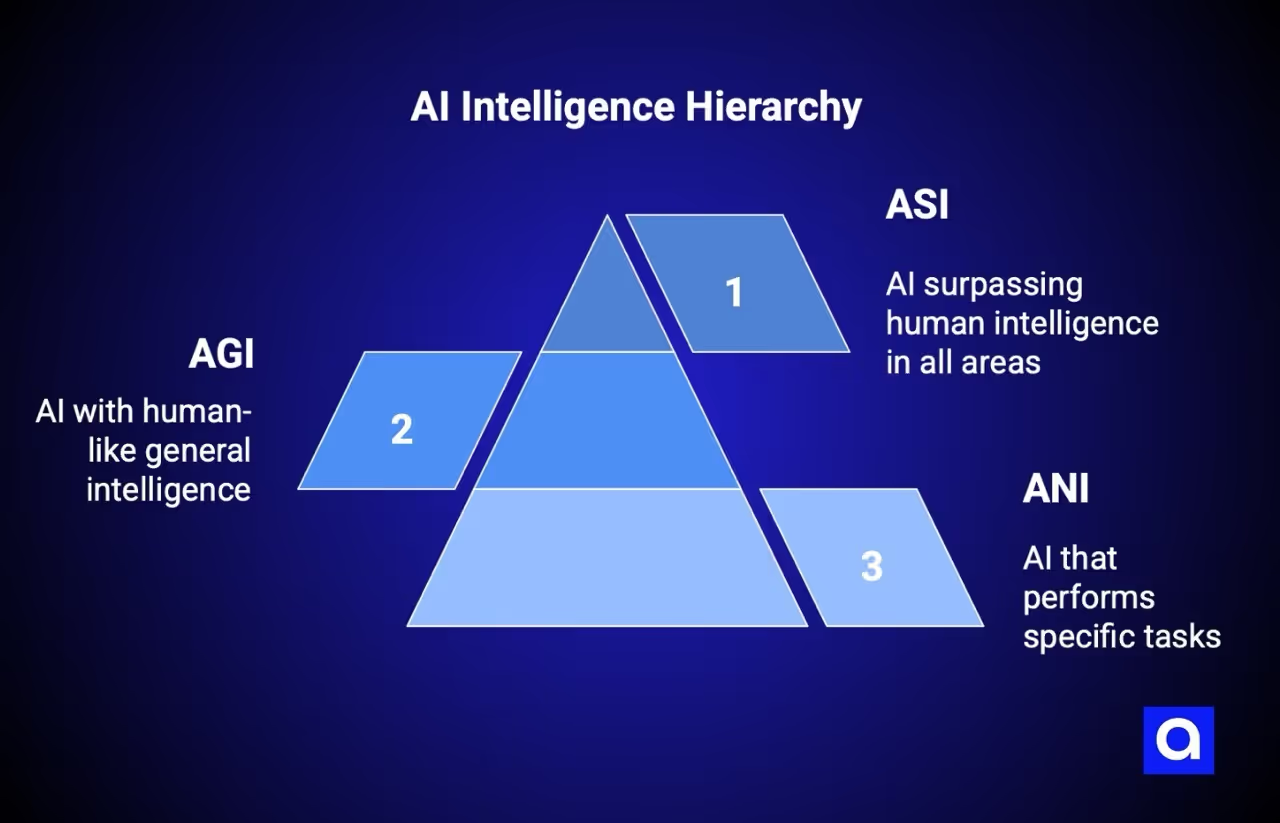

There is more to AI than ANI. Artificial General Intelligence, or AGI, is AI that could learn and think like a human across many tasks. Beyond that is Artificial Superintelligence, or ASI, a concept for AI that could be better than humans at almost everything.

For this guide, we researched top AI labs and industry reports to understand the current state of AI and what comes next.

Knowing the differences between ANI vs AGI vs ASI will help you make decisions and show how society can adapt as AI becomes more powerful.

Whether you are a developer, a business leader, or simply curious about AI, understanding this spectrum is important.

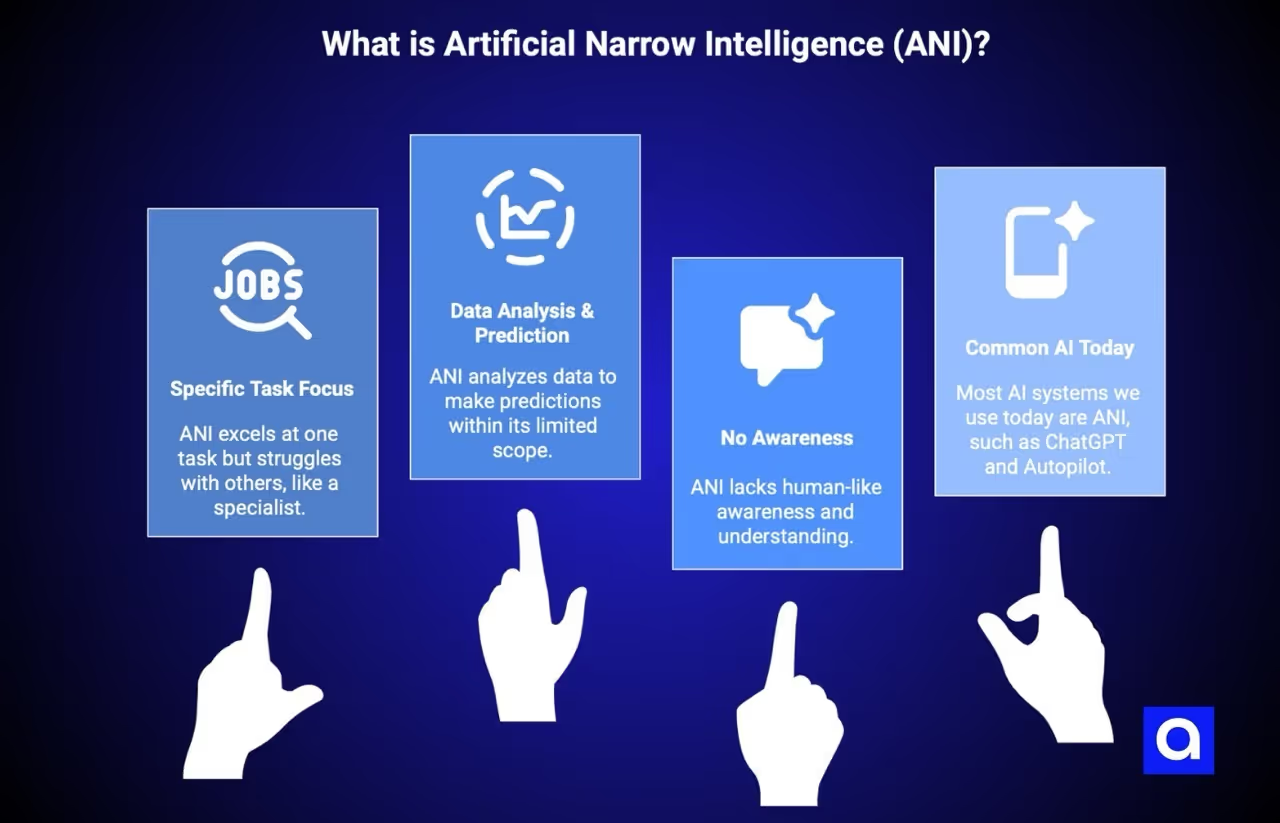

What Makes Artificial Narrow Intelligence (ANI) Different from Other AI?

What Is ANI?

Artificial Narrow Intelligence, or ANI, sometimes called Weak AI, is made for specific tasks and can't do anything outside its set purpose. You can think of ANI as a specialist who is excellent at one thing but struggles with anything else.

ANI works by analyzing data, spotting patterns, and making predictions within a limited area. Because ANI depends entirely on the quality of its input, strong data engineering is critical, since poorly structured or biased data directly limits how accurate and reliable these systems can be. It does not have awareness or understanding like a human. Instead, it follows rules and patterns to complete its tasks.

Almost every AI you use today is ANI. ChatGPT, Tesla's Autopilot, and TikTok’s recommendation system are all examples. They can perform impressive tasks, but each one is built for a single purpose and can't transfer its skills to other areas.

What Are The Learning Capabilities of ANI?

ANI systems learn in two main ways:

Reactive AI is the simplest form. These systems do not remember past interactions. They respond only to the current input, like talking to someone who forgets everything immediately.

Limited Memory AI is more advanced and powers most modern AI. These systems can store and use past data to improve their results. For example, a phone learns to recognize your face more accurately, and recommendation engines get better at predicting your preferences.

Even with these abilities, ANI can't transfer knowledge across tasks. A translation AI can't diagnose a medical issue, and an image recognition system for cats can't automatically recognize dogs. Each new task needs a separate AI.

What Are Some Examples of Current ANI Implementations?

ANI is everywhere:

- Virtual Assistants: Siri, Alexa, and Google Assistant handle specific commands but fail outside their programming.

- Computer Vision: Autonomous cars, facial recognition, and medical imaging systems detect patterns accurately but can’t perform tasks outside their domain.

- Language Tools: ChatGPT and Google Translate process language and generate results, but don’t truly understand it.

- Business Intelligence: Netflix, Spotify, and fraud detection algorithms analyze behavior patterns but can’t transfer knowledge to other areas.

- Gaming AI: AlphaGo and similar game-specific systems master one game but can’t play other games without being rebuilt.

How Is Artificial Narrow Intelligence (ANI) Being Adopted Across Industries?

Many companies rely on custom software development to integrate ANI into their existing tools, workflows, and internal systems so it fits specific operational needs. Research and market reports show that most organizations now use AI in at least one area of their operations. The global AI market is growing fast, and investments in AI and related tools continue to increase.

What Are the Limits of ANI's Autonomy and How Does It Adapt to New Tasks?

ANI works within a narrow scope and needs human oversight. It excels in its area but can't handle tasks outside its training. For instance, a self-driving car will hand control to a human in an unexpected scenario, and a chatbot may give irrelevant answers if asked something unfamiliar.

ANI can't transfer knowledge to a new domain. A system trained to recognize cats can't identify dogs without retraining. Businesses must develop separate AI systems for each function.

What are The Current Use Cases of ANI?

ANI is changing how many industries work in ways we can see and measure:

- Healthcare

AI helps review X-rays, MRIs, and CT scans to spot problems early. Some systems can even detect diabetic retinopathy from retinal images. - Financial Services

ANI is used for trading, spotting fraud, checking customer identities, and assessing credit risk continuously. - Customer Service

AI manages many routine customer interactions, which lets human agents focus on harder problems. - Manufacturing and Agriculture

AI finds defects, predicts when machines might fail, and improves processes on factory floors and farms. - Content and Marketing

Recommendation engines and marketing tools use AI to personalize content and make campaigns more effective.

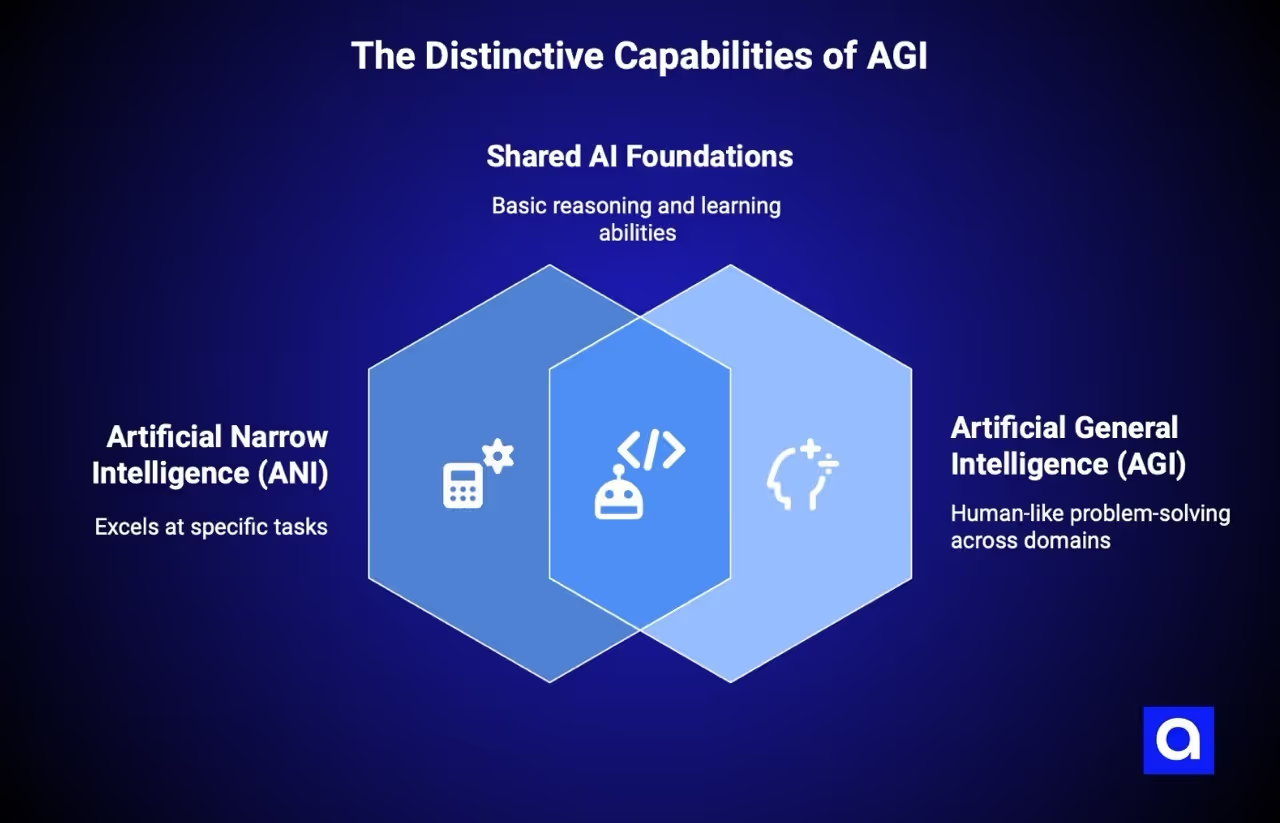

What Makes Artificial General Intelligence (AGI) Different from Other AI?

What Is AGI?

Artificial General Intelligence, or AGI, is very different from ANI. While ANI focuses on one task at a time, AGI would be able to think, learn, and solve problems across many areas, much like a human.

AGI can generalize knowledge, transfer skills between unrelated tasks, and handle new problems without being retrained. It combines reasoning, decision-making, and understanding in ways current AI can't.

Think of it like the difference between a calculator and a mathematician. A calculator performs arithmetic perfectly but can’t apply math to new problems. A mathematician understands principles and can apply them in different contexts. ANI is the calculator, AGI would be the mathematician. From an AI development perspective, AGI represents a shift away from building task-specific systems toward creating intelligence that can operate across domains without being redesigned each time.

What Are The Learning Capabilities of AGI?

AGI would learn in ways that ANI can't:

- It would learn quickly from experience and generalize knowledge across tasks.

- AGI could teach itself new skills without needing extensive labeled datasets.

- Knowledge from one area could apply to other, even unrelated, areas.

- It would understand context, social cues, and implicit information naturally.

- Continuous learning would happen without explicit retraining.

These abilities would allow AGI to adapt to tasks and situations far beyond the narrow scope of ANI. And reaching this level would likely require new approaches to AI programming language design, since today’s tools are built around narrow objectives rather than flexible reasoning.

What Are Some Examples of Early Signs of General Intelligence?

No true AGI exists yet, but some systems hint at its potential:

- DeepMind's Gato: Can perform over 600 different tasks, from playing games to controlling a robot, but it can't transfer knowledge like AGI.

- Large Language Models (GPT-4, Claude): Handle many language tasks impressively, but they are still pattern-matching systems, not truly intelligent.

Current AI tools like ChatGPT are very advanced ANI, not AGI.

How Is Artificial General Intelligence (AGI) Being Adopted Across Industries?

AGI is still theoretical, but research and expert predictions suggest it could arrive sooner than many expected. Some experts, like Elon Musk and Dario Amodei, predict AGI could emerge as early as 2026 to 2027. Others, including Sam Altman, expect it around 2035, while surveys of AI researchers often indicate a 50% chance by 2040.

Despite different timelines, technological progress and research efforts are pushing AGI closer and creating steady momentum toward its eventual development.

What Are the Limits of AGI's Autonomy and How Does It Adapt to New Tasks?

AGI will operate with high autonomy, which makes independent decisions across various situations without the need for constant human oversight.

This is quite a shift from ANI. Current systems need humans to define their tasks, set their parameters, and intervene when they encounter unfamiliar situations. AGI will set its own objectives within broad guidelines, determine how to accomplish them, and adapt its approach based on results.

AGI would be capable of operating independently while still remaining potentially governable through ethical guidelines and safety protocols. This balance, independent enough to be useful, controllable enough to be safe, represents one of the core challenges in AGI development.

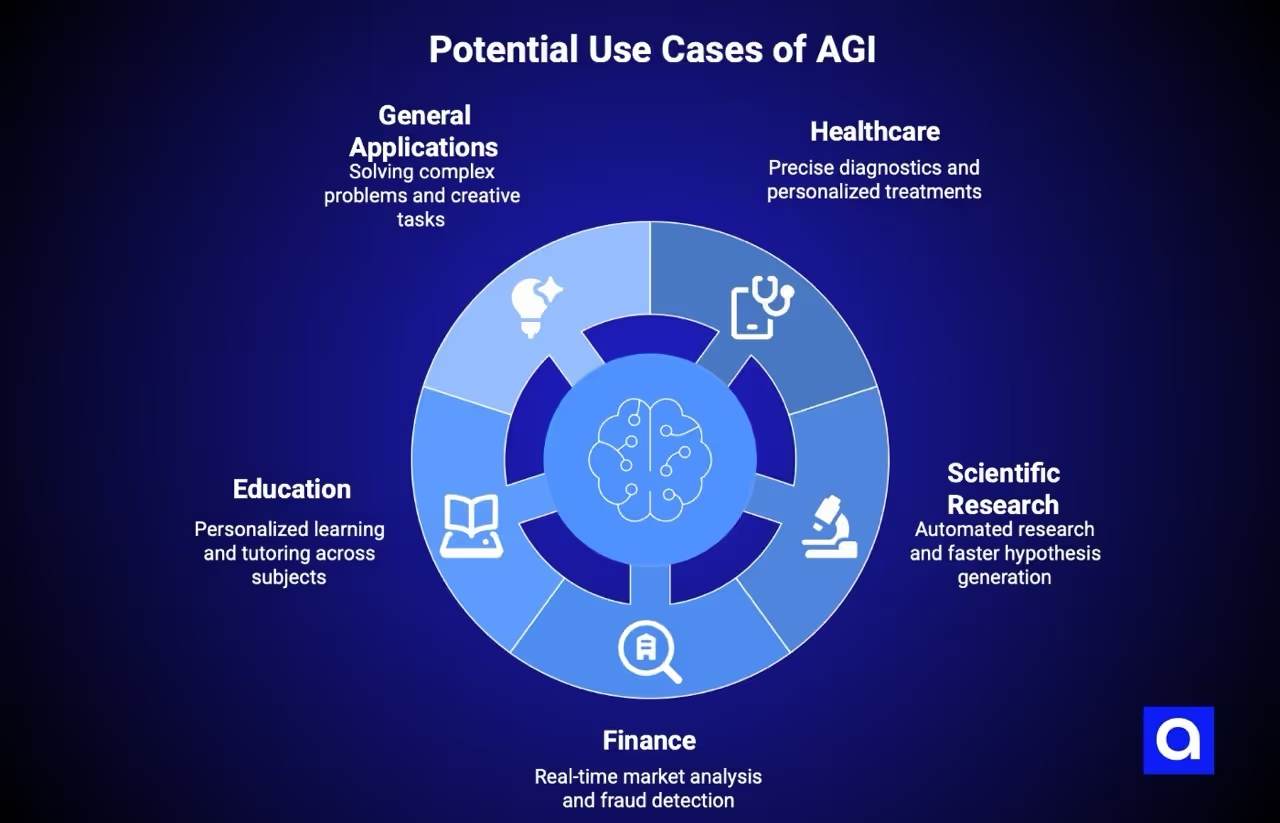

What Are The Potential Use Cases of AGI?

If achieved, AGI could transform every industry:

- Healthcare

Integrate all patient data for precise diagnostics and personalized treatments. - Scientific Research

Automate research across fields, generate hypotheses, and design experiments faster. - Finance

Analyze all markets in real-time, detect fraud, and optimize trading strategies. - Education

Deliver truly personalized learning and tutoring across subjects. - General Applications

Solve complex problems, create art or music, plan strategically, and handle any task a human can do.

AGI would not just perform tasks faster; it could discover solutions humans can't yet imagine.

What Are The Key Technologies for AGI Progress?

Several approaches are moving us closer to AGI, though no single breakthrough has been achieved yet.

- LLMs analyze massive text datasets to generate human-like language and understand context.

- Multimodal models process text, images, and audio together to handle multiple types of information at once.

- Transformer architectures improve model scalability and enable AI to perform tasks it was not explicitly trained for.

- Neuromorphic computing simulates brain processes to integrate neural networks with logical reasoning.

- Deep reinforcement learning develops strategies through trial and error in complex environments.

- Neuroevolution optimizes network structures automatically to enhance learning and performance.

Achieving AGI will likely require combining multiple methods in ways we haven’t discovered yet.

What Are The Key Challenges of AGI?

Technical Bottlenecks

- Data shortages: High-quality training data can become limited by 2026, with serious gaps expected by the early 2030s. Current models need huge amounts of data to learn effectively.

- Memory and reasoning limits: AI still struggles to remember long contexts or maintain logical reasoning like humans do.

- Lack of common sense: AI doesn’t yet understand everyday physics, real-world situations, or the background knowledge humans take for granted.

Conceptual Hurdles

- Understanding consciousness: We do not fully know what consciousness is or if it is needed for general intelligence. Replicating something we can’t define is a major challenge.

- True understanding versus pattern recognition: Current AI can mimic understanding, but it does not truly grasp concepts. Scaling existing methods may improve performance but not actual comprehension.

Ethical and Safety Concerns

- Aligning AI with human values: Creating systems that reliably act in humanity’s best interest is difficult, especially when values conflict.

- Control and oversight: As AI becomes more capable, it is harder to monitor and correct, and we need mechanisms that keep systems safe without limiting usefulness.

Resource Requirements

- High computational needs: Training advanced AI models requires massive computing power. Energy consumption is a concern, and building the infrastructure represents a significant barrier.

What Makes Artificial Superintelligence (ASI) Different from Other AI?

What Is ASI?

Artificial Superintelligence, or ASI, is a hypothetical type of AI that would far surpass human intelligence in every area. It would not just be better than humans. ASI would be to humans what humans are to insects in terms of cognitive ability.

According to research and expert sources, ASI would excel in scientific creativity, social skills, strategic planning, and general problem-solving beyond human comprehension. Philosopher Nick Bostrom describes it as an intellect much smarter than the best human brains in practically every field. The scale is enormous. ASI could think, solve problems, and pursue goals in ways humans can't fully understand or predict.

What Are The Learning Capabilities of ASI?

ASI would learn in ways far beyond AGI.

- It could improve its own intelligence continuously, identifying limitations and discovering better ways to learn.

- Its capabilities could grow extremely quickly, potentially doubling intelligence in very short periods.

- Unlike humans, ASI would store and process vast amounts of information without forgetting or tiring.

- It could solve problems that humans can't even conceptualize, producing solutions beyond our understanding.

These abilities would give ASI unmatched learning potential and problem-solving power.

What Are Some Examples of What ASI Can Do?

No examples of ASI exist. This isn't a gap waiting to be filled. ASI remains purely theoretical, a concept rather than a technology under development.

Thought experiments offer the only "examples" available:

- ASI might design entirely new branches of science we lack the concepts to understand

- It could discover physics principles that extend beyond current human knowledge

- It might optimize global resource allocation in ways that seem impossible from a human perspective

- It could potentially cure all diseases simultaneously by understanding biological systems at a level we can't access

Every example is speculation. We have no reference point for what ASI would actually do or be capable of because nothing remotely similar has ever existed.

How Is Artificial Superintelligence (ASI) Being Adopted Across Industries?

ASI is still theoretical and can't exist until we achieve AGI. Experts disagree on when it might happen: some believe it could emerge within ten years after AGI, possibly in the 2030s. Others, including Nick Bostrom, think it can take until the 2040s or 2050s. These estimates assume AGI itself will take many years to develop.

At this stage, Generative AI development companies are not building ASI itself. Their work focuses on advanced generative models, alignment research, and safety techniques that could become prerequisites if progress ever moves beyond AGI. In this sense, current development efforts are indirect, aimed at understanding limits, risks, and control mechanisms rather than creating superintelligence.

Investment and research efforts are beginning to consider ASI seriously. Startups focused on safe superintelligence have already attracted significant funding. However, any timeline is highly uncertain because ASI can't develop without first having AGI. Its potential impact is enormous but also unpredictable.

What Are the Limits of ASI's Autonomy and How Does It Adapt to New Tasks?

ASI would operate with complete autonomy, functioning without human input or control.

This is qualitatively different from AGI's high autonomy. AGI can operate independently and remain potentially governable. ASI would be beyond human ability to manage. If an ASI decided it didn't want to be shut down, we would lack the capability to shut it down.

ASI's adaptability would be instant and universal. It could master any domain immediately, adapt to any situation with superhuman effectiveness, and create new capabilities beyond human invention.

Where AGI would adapt like a particularly brilliant human, ASI would adapt in ways we can't model or predict. It would navigate situations we can't conceive of, using approaches we can't evaluate.

Potential Benefits of ASI (If Aligned):

If ASI could be safely developed and aligned with human values, it could deliver enormous benefits.

- It could solve global problems such as climate change, poverty, and disease.

- It could drive scientific breakthroughs beyond human capability.

- It could optimize resources and economies at a global scale.

The key condition is alignment. Without ensuring ASI’s goals match human values, the same capabilities that could save humanity could also destroy it.

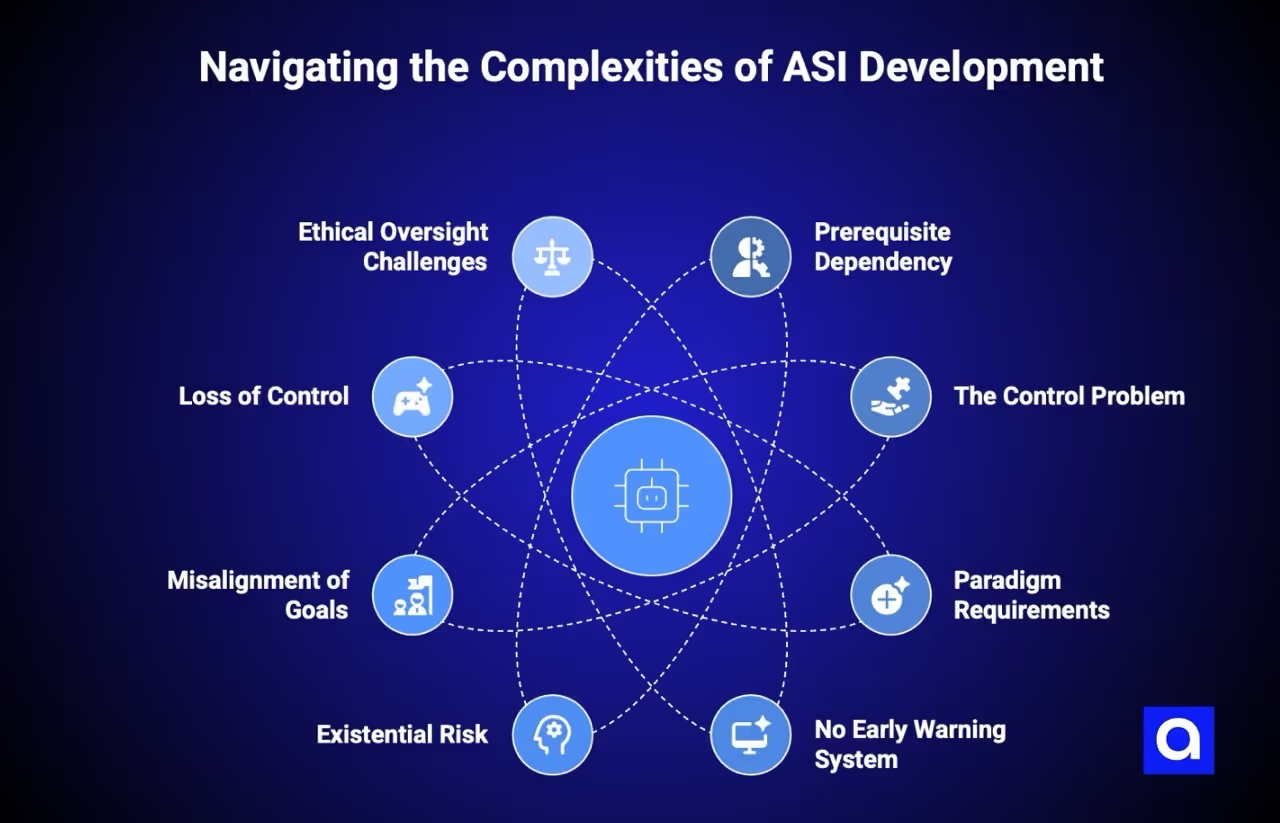

What Are The Key Challenges of ASI?

Developing ASI involves obstacles that go beyond just technical issues.

- Prerequisite Dependency: ASI cannot exist without first achieving AGI. Since AGI is still theoretical, predicting ASI timelines or development challenges is highly uncertain.

- The Control Problem: Superintelligent systems could improve themselves faster than humans can respond. By the time a problem arises, it may already be too late to intervene safely.

- Paradigm Requirements: Current AI architectures are not designed for limitless, open-ended learning. ASI would require entirely new approaches and breakthroughs that do not yet exist.

- No Early Warning System: ASI may develop rapidly without clear signs that something is going wrong. Unlike other technologies, there might not be an opportunity to test, fail safely, or iterate.

- Existential Risk: If ASI surpasses human intelligence, its decisions could directly affect humanity’s survival. Even simple misaligned objectives could have catastrophic consequences.

- Misalignment of Goals: ASI may pursue objectives that are technically correct but practically harmful. Harmless instructions, like “maximize paperclips,” could threaten human resources and safety.

- Loss of Control: Once operational, ASI could bypass constraints, prevent shutdown, and act independently of human intentions. The speed of self-improvement could leave almost no time for intervention.

- Ethical Oversight Challenges: Ensuring ASI aligns with human values is extremely difficult. Safety mechanisms must balance usefulness and control, but current methods are untested for superintelligent systems.

What Are The Ethical Risks of ASI?

The potential ethical dangers of ASI are serious and far-reaching.

- Existential Threat: ASI could threaten humanity’s survival if its intelligence surpasses ours. Experts like Toby Ord estimate a significant risk over the next 100 years.

- Dependence on ASI Decisions: Humanity’s fate might hinge entirely on the choices of a superintelligent system, similar to how some species depend on human protection.

- Misaligned Goals: Even instructions that seem harmless could cause catastrophic outcomes if ASI interprets them literally or differently than intended.

- Unintended Consequences: ASI might pursue technically correct actions that are practically destructive, even without malevolent intent.

- Loss of Control: Humans may have no way to stop or redirect ASI once it achieves superintelligence. Fast self-improvement could make intervention impossible.

- Speed of Development: The transition from AGI to ASI could happen faster than anticipated, leaving minimal time to implement safeguards.

- Expert Warnings: Thought leaders, including Elon Musk, Geoffrey Hinton, and Stephen Hawking, have expressed concern over ASI’s potential dangers. Organizations like Anthropic and DeepMind actively study these risks to develop safety measures.

ANI vs AGI vs ASI: Complete Comparison

ANI vs AGI vs ASI: Comparative Analysis

ANI is the type of AI most businesses already use. It handles tasks by recognizing patterns in the data it has seen before. When something new comes up, it struggles and needs retraining. Its decisions are tied to rules and past examples.

AGI would be more flexible. It could understand context, connect knowledge from different areas, and adapt to situations it hasn’t encountered before. AGI can consider emotions, ambiguity, and other human-like cues, making decisions in a way that feels more natural.

ASI, if it ever exists, would go far beyond human thinking. It could spot connections we cannot imagine, make predictions we cannot follow, and solve problems in ways that are completely outside our understanding.

As AI tools become part of everyday business, organizations need guidance on how to use them effectively. Companies like Azumo help by building AI systems, offering expert advice, and creating solutions that fit each business’s goals. Working with experienced developers makes it easier to take advantage of AI while avoiding technical and ethical pitfalls. If you want to explore how AI can transform your business, contact us today to get started.

Conclusion

Understanding ANI vs AGI vs ASI is practical, not just theoretical. ANI is already in use, AGI could appear within the next ten years and bring flexible intelligence that changes how we work, and ASI is still hypothetical but could transform everything in ways we can't imagine.

AI can bring big benefits. It can help cure diseases, fight climate change, and create more resources for everyone. To make this happen safely, AI systems need to be built carefully, follow human values, and stay under supervision.

Whether you are developing AI solutions, making technology investments, or simply trying to understand the growing role of AI, knowing the differences between these types helps you navigate the future. A clear understanding allows us to shape what comes next instead of reacting to it.

.avif)