.avif)

AI hosting platforms offer the infrastructure to deploy and manage AI models efficiently. This article introduces the top AI hosting solutions for 2025, detailing their standout features and how they can help your business.

Key Takeaways

- AI hosting platforms are essential for deploying AI applications, with advancements in performance, cost, and features making them more accessible for businesses.

- Key AI hosting platforms for 2025 include Azure AI Studio, AWS Bedrock, GCP Vertex AI, Hugging Face Enterprise, and NVIDIA Triton Inference Server, each offering unique advantages for different AI workloads.

- Cost-effective pricing models like pay-as-you-go and budget-friendly options are crucial for supporting startups and small enterprises in leveraging AI technology without heavy financial burdens.

Understanding AI Hosting

AI hosting platforms are specifically designed to host AI-powered applications, providing the necessary infrastructure for high-performance computing. These platforms are built to handle the substantial hardware resources required for running AI models, which go beyond the capabilities of standard servers or virtual machines. Recent advances in AI hosting options have significantly improved capabilities, pricing, and features offered, making it easier for businesses to deploy and manage AI applications.

Selecting the right AI hosting platform is pivotal for successful AI application development. The choice influences everything from performance and scalability to cost and deployment options. Expert cloud experience plays a vital role in helping clients select the most suitable AI hosting platform for their needs, ensuring they can leverage the full potential of AI. When choosing an AI hosting solution, it’s important to consider several key factors. These include scalability, cost, deployment options, and features specific to the platform.

One of the significant advancements in AI hosting is the pay-per-use billing structure, allowing users to align costs with the actual resources consumed. This model offers flexibility and cost-effectiveness, particularly for businesses with fluctuating AI workloads. Platforms like Modal offer serverless hosting for AI models, charging solely for the compute resources utilized, making them a cost-effective choice for many.

AI hosting holds significant importance. As AI applications continue to evolve and become more sophisticated, the demand for robust, scalable, and cost-effective hosting solutions will only grow. Understanding the intricacies of AI hosting and the available options is the first step toward harnessing the full power of AI in your business operations.

At Azumo, we recently worked with a retail client to implement an AI hosting platform for their customer service chatbot. The platform's powerful GPUs and serverless computing made it easy for us to quickly scale the app to handle large volumes of user queries, perfect for their traffic spikes during busy sales events.

Plus, with the pay-per-use billing model, the client only paid for the resources they used, which meant they didn’t waste any resources during quieter times. It was a cost-effective and efficient solution that helped them meet customer demand without breaking the bank.

Top AI Hosting Platforms for 2025

In 2025, the landscape of AI hosting platforms is rich with innovative solutions designed to meet diverse needs. Leading the charge are:

- Azure AI Studio - Explore how it ranks among generative AI development companies.

- AWS Bedrock

- GCP Vertex AI

- Hugging Face Enterprise

- NVIDIA Triton Inference Server

Each of these platforms offers unique features and capabilities, making them suitable for different types of AI applications and workloads.

Let’s delve deeper into what makes each of these platforms stand out.

Azure AI Studio

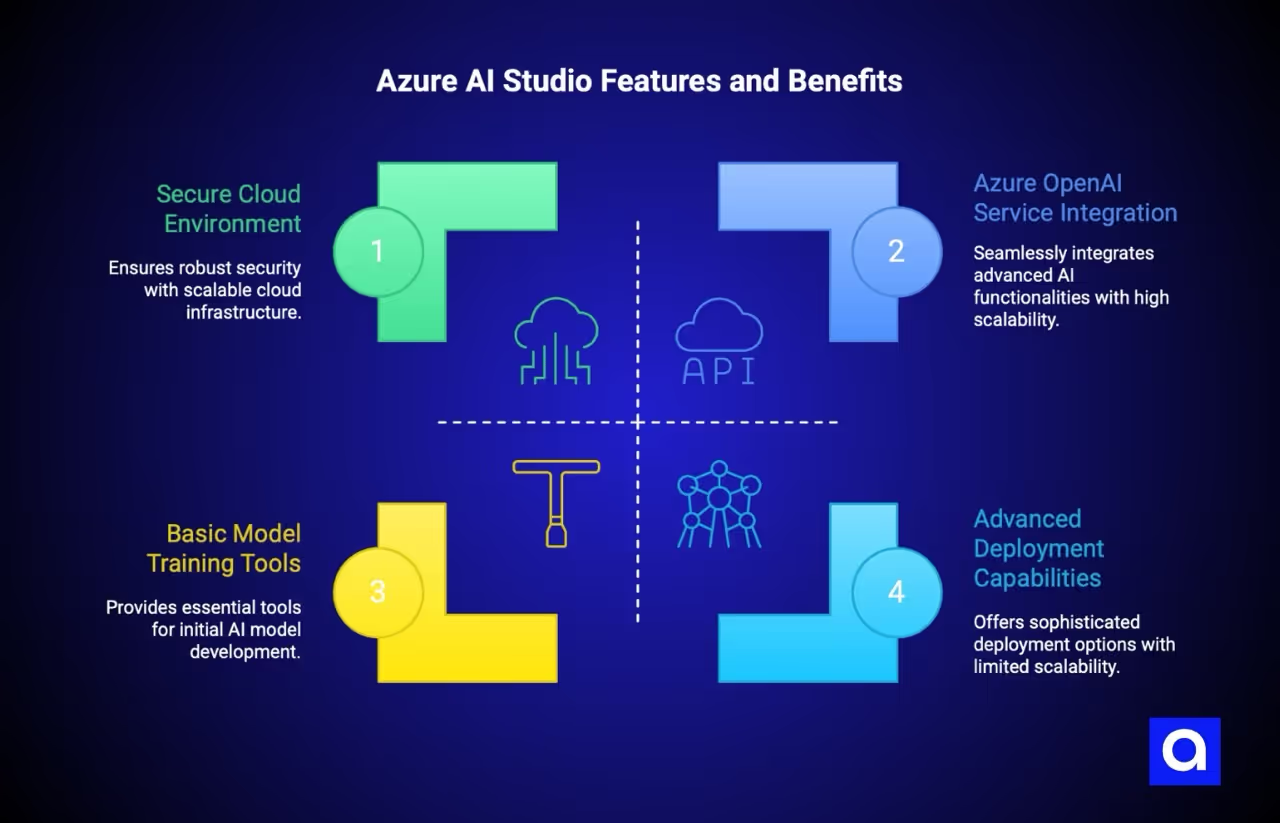

Azure AI Studio, a cloud-based platform from Microsoft, is designed for building, deploying, and managing AI models at scale. It provides a comprehensive suite of tools and services that cater to the entire lifecycle of AI development, from model training to deployment. This makes it an ideal choice for businesses looking to integrate AI into their operations seamlessly.

One of the standout features of Azure AI Studio is its secure and scalable cloud environment. This ensures that AI workloads can be handled efficiently without compromising on performance or security. The platform’s integration with the Azure OpenAI Service adds another layer of capability, allowing businesses to incorporate advanced AI functionalities like natural language processing and conversational AI into their applications.

Whether you’re developing machine learning models or deploying AI-powered apps, Azure AI Studio offers a robust and flexible platform to meet your needs. With its comprehensive tools and secure environment, it stands out as a state of the art choice for businesses looking to leverage AI in 2025.

AWS Bedrock

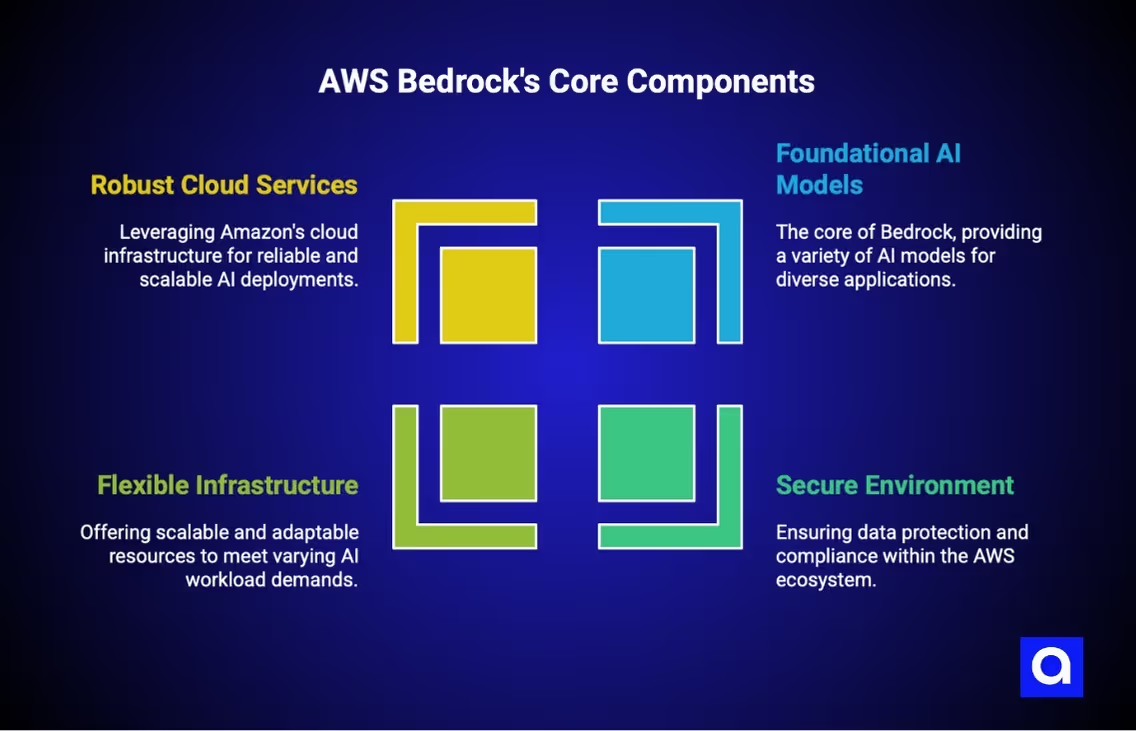

AWS Bedrock, Amazon’s cloud service, focuses on deploying foundational AI models in a secure and flexible environment. Operating within the AWS ecosystem, it provides a reliable and scalable infrastructure for AI workloads.

It stands as a strong contender for businesses deploying AI-powered apps supported by Amazon’s robust cloud services.

At Azumo, we used AWS Bedrock to build an AI solution for a financial services client. By taking advantage of Bedrock’s secure and scalable infrastructure, we were able to deploy machine learning models quickly, which allowed the client to process large amounts of data in real time. This significantly improved their fraud detection system. AWS Bedrock made it easy to integrate with their existing infrastructure, and as their needs grew, we were able to scale efficiently. The flexibility of the platform helped us keep things secure and cost-effective while meeting performance requirements.

Note: All examples described in this article are based on real engineering implementations delivered by Azumo’s development team, adapted for clarity and confidentiality.

GCP Vertex AI

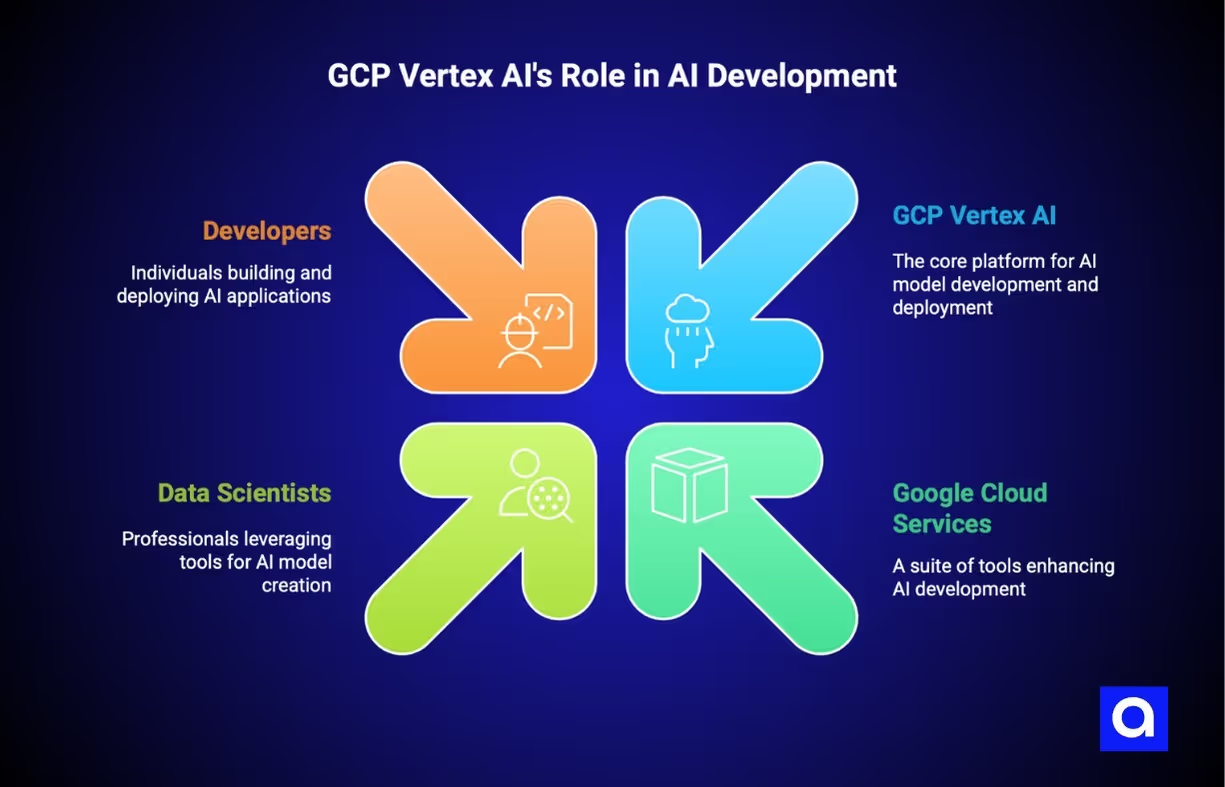

GCP Vertex AI is designed to streamline the development and deployment of machine learning models within Google Cloud’s ecosystem. It integrates seamlessly with other Google Cloud services, enabling data scientists and developers to leverage existing tools and services to improve efficiency and reduce time to market for AI solutions.

This seamless integration is particularly beneficial for businesses looking to develop generative AI models and other sophisticated AI applications. GCP Vertex AI, with Google Cloud’s suite of tools and services, offers a powerful platform for building and deploying AI solutions at scale.

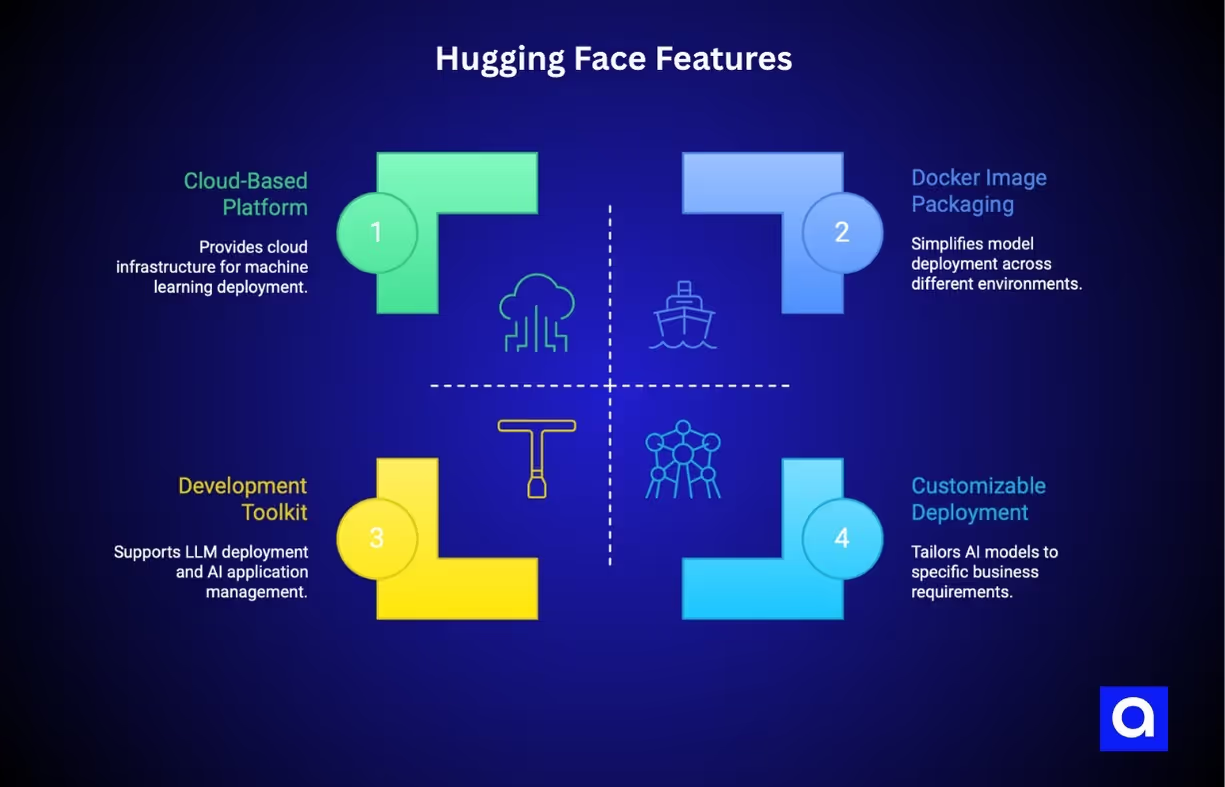

Hugging Face Enterprise

Hugging Face Enterprise provides a cloud-based platform with tools and infrastructure for machine learning deployment. Its development toolkit, Hugging Face TGI, supports the deployment and serving of large language models (LLMs), making it easier to build and manage AI applications. Additionally, Hugging Face Enterprise allows packaging models as Docker images, simplifying deployment across various environments.

The platform emphasizes customizable deployment options, enabling businesses to tailor their AI models to specific needs. Features like buffering multiple API requests, quantization, token streaming, and telemetry further enhance its capabilities, making Hugging Face Enterprise a versatile choice for deploying machine learning models.

We’ve had the opportunity to work with Hugging Face TGI to deploy custom-tuned LLMs for clients in both healthcare and e-commerce. What really makes Hugging Face unique for these projects was its ability to handle quantization and token streaming: features that were essential for optimizing performance in low-latency applications.

For example, in healthcare, we were able to process sensitive patient data in real time, delivering fast, accurate results for clinicians without compromising on security or compliance. In e-commerce, the real-time nature of token streaming allowed us to quickly analyze customer queries and provide personalized recommendations on the spot, which significantly improved the shopping experience. Hugging Face TGI's capabilities made it possible to meet the unique demands of both industries, ensuring seamless, high-performance deployments that scaled as needed.

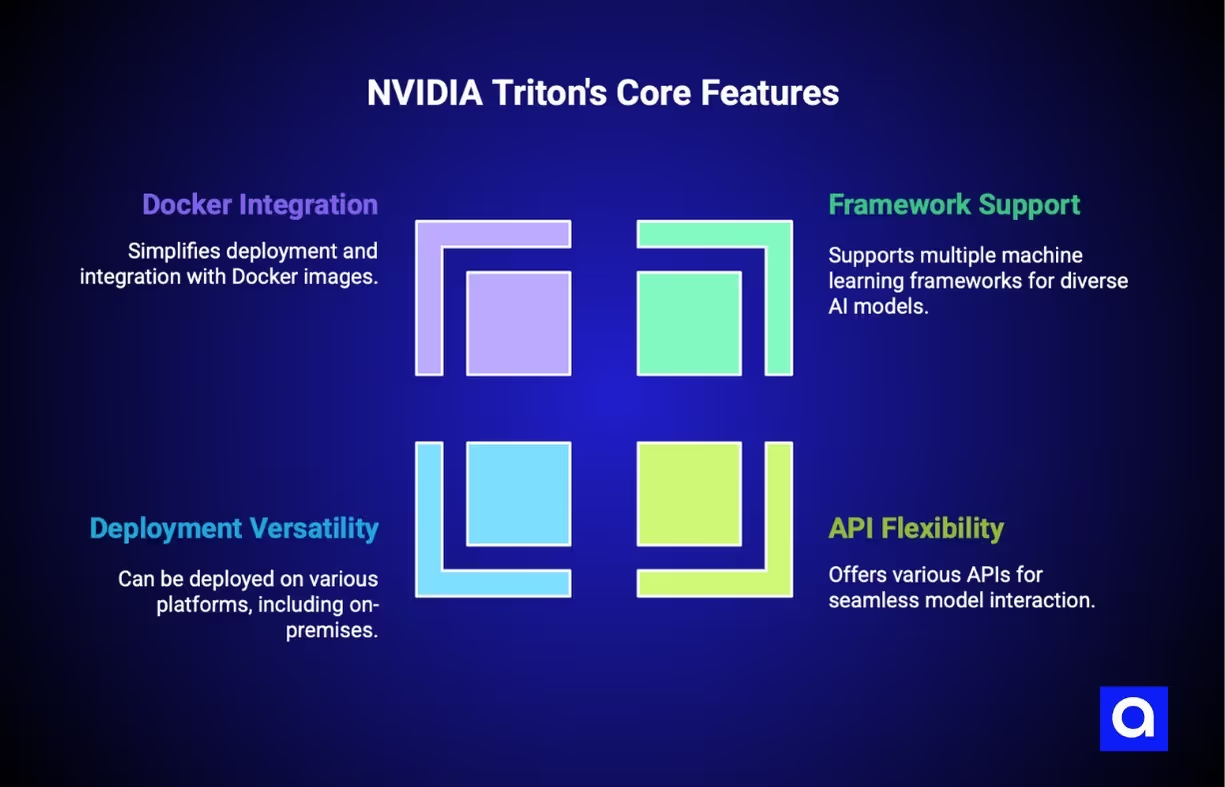

NVIDIA Triton Inference Server

The NVIDIA Triton Inference Server is a powerful tool designed for deploying AI models efficiently. It supports various machine learning frameworks and offers a range of APIs, including C, Java, HTTP, and gRPC, for model interaction. This flexibility makes it a suitable choice for diverse AI workloads.

NVIDIA Triton can be deployed robustly on various platforms, including on-premises solutions. Its distribution as a Docker image simplifies deployment and integration, providing high flexibility and scalability for AI model deployment.

Overall, NVIDIA Triton Inference Server is a top contender for businesses looking to leverage high-performance AI hosting solutions.

Custom Models and Fine-Tuning

Custom models are designed to meet specific requirements, leading to enhanced accuracy and better performance in specialized tasks. These models, enriched with domain-specific knowledge, better understand context and language unique to particular industries.

Fine-tuning models on targeted data further enhances focus on marketing personalization, allowing businesses to deliver tailored user experiences.

Full Control Over AI Models

Owning custom AI models allows businesses to tailor their functionalities closely to their operational needs without dependencies on service providers. This complete ownership ensures full control over model functionality and updates, providing the freedom to adapt and improve AI models as needed.

Platforms like Hugging Face Enterprise support open source customization and fine-tuning of models, allowing businesses to align models with their specific needs. This no vendor lock-in policy, emphasized by Together AI, gives users the freedom and control over their AI models, enhancing personalization and performance.

User-Friendly APIs

Easy-to-use APIs provide features that facilitate the fine-tuning of models, making it accessible to a broader audience. These APIs enable tailored customization and complete ownership of models, allowing users to fully adapt or fine-tune them based on specific needs.

User-friendly APIs are essential for simplifying the process of developing and deploying AI models. They enhance the efficiency and effectiveness of deploying custom AI models, making advanced AI capabilities accessible even to those without extensive technical expertise.

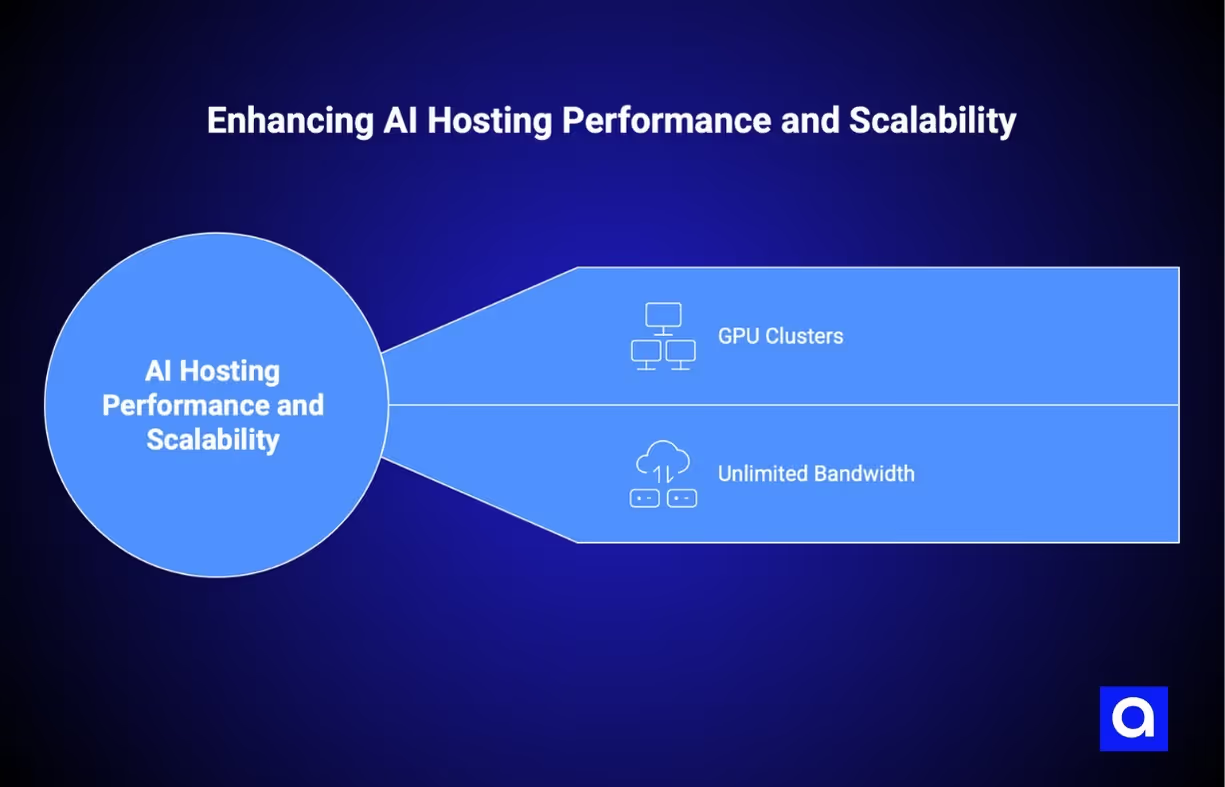

High Performance and Scalability

High performance is critical in AI hosting to ensure efficient data processing and timely decision-making, especially as AI models require rapid and reliable data transfer. Future-proof AI hosting architectures must be designed to adapt to evolving computational needs and support complex workloads, ensuring consistent and high performance for AI applications.

GPU Clusters

GPU clusters are essential for speeding up the training and inference processes of AI models, providing specialized hardware designed for parallel processing. Utilizing multiple NVIDIA GPUs and a CPU significantly enhances computational power, reducing the time required for model training and inference.

These clusters offer various configurations to meet diverse workload demands, facilitating faster model training and inference by leveraging parallel processing capabilities. This makes GPU clusters a critical component in AI hosting platforms, ensuring high performance and scalability for AI applications.

Unlimited Bandwidth

Having unlimited bandwidth is crucial for AI applications, as it ensures stable performance and facilitates the management of high-volume data transfers without interruptions. This is especially important for real-time data processing and large-scale deployments, where consistent performance and fast performance are essential.

Unlimited bandwidth allows AI applications to handle large datasets effectively, ensuring uninterrupted data flow and maintaining consistent performance levels. This makes it a vital feature for AI hosting platforms, supporting the efficient operation of extensive AI applications.

Cost-Effective AI Hosting Platforms

Cost-effective AI hosting services are essential for businesses looking to leverage AI technology without incurring heavy financial burdens. Flexible payment options and affordable hosting plans make it easier for startups and small enterprises to deploy and manage AI applications.

Pay-As-You-Go Pricing

The pay-as-you-go pricing model allows users to only pay for the resources they consume, making it easier for businesses to manage costs. This model provides flexibility, allowing users to scale resources according to demand without significant upfront commitments. Prices can vary based on the resources utilized.

Providers frequently offer promotional credits or discounts to attract startups looking for budget-conscious solutions. This can lead to quicker customer purchase decisions, making the pay-as-you-go model a cost-effective choice for many businesses.

Budget-Friendly Options

Implementing a pay-as-you-go model can significantly relieve financial strain for startups by offering flexibility in scaling resources according to demand. Many budget-friendly AI hosting providers offer tiered pricing models to cater to startups and small enterprises. Providers like Lambda Labs and Runpod are recognized for their affordable options, making them suitable for smaller businesses and startups looking to leverage AI technology without heavy financial burdens.

Some hosting providers cater specifically to startups by offering plans priced as low as $1 per month, making AI hosting more accessible. This affordability allows businesses to experiment with AI applications and scale up as needed without significant financial risks.

Security and Compliance

Ensuring robust security measures and compliance with regulations is essential for safeguarding sensitive data in AI hosting environments. Data protection and adherence to regulations mitigate risks associated with data breaches and compliance failures, maintaining trust and operational integrity.

Secure Environments

Top AI hosting platforms implement strict access control measures, strong encryption, and regular security assessments to safeguard sensitive data from unauthorized access. Leading providers incorporate advanced cybersecurity protocols such as end-to-end encryption and multi-factor authentication to protect their infrastructure from various cyber threats.

The commitment to security among AI hosting providers ensures reliable and safe deployment of AI applications. Secure environments are crucial in AI hosting to protect sensitive data and ensure compliance with regulations, giving businesses peace of mind as they develop and deploy AI solutions.

Compliance Standards

AI hosting providers often comply with standards like SOC 2 and HIPAA to ensure they meet stringent data security and privacy requirements. Compliance standards are essential for AI hosting providers to guarantee data security and privacy, helping businesses maintain trust and operational integrity.

Customer Stories and Use Cases

Real-world examples demonstrate how effectively AI hosting solutions can enhance business operations and productivity. These success stories and industry use cases showcase the potential benefits of AI hosting solutions, allowing organizations to significantly increase productivity and return on investment.

Success Stories

Customer stories are a powerful means to showcase the effectiveness of AI hosting solutions in real-world applications. For example, Amey improved employee support with SharePoint agents, enabling real-time troubleshooting and quicker information retrieval. Another notable success story involves a retail company that utilized AI hosting to personalize their customer experience, resulting in a 30% increase in sales.

These success stories illustrate the potential of AI hosting solutions to drive significant improvements in business performance and customer engagement. Leveraging AI empowers businesses to enhance operations and deliver superior services to their customers.

Industry Use Cases

Industry-specific use cases illustrate the transformative potential of AI hosting solutions across various sectors. For example, Cancer Center.AI developed a solution on Microsoft Azure that enabled faster pathology analysis, resulting in quicker diagnoses and fewer errors. Siemens Digital Industries Software created a Teams app using Microsoft Azure OpenAI Service that automates issue reporting, enhancing real-time communication for their product lifecycle management.

The effective use of AI-powered tools and applications leads to operational efficiencies and advancements in service delivery across industries. Deploying AI-powered tools and applications helps businesses improve workflows, reduce errors, and boost overall productivity.

Summary

In summary, the landscape of AI hosting solutions in 2025 is both exciting and full of potential. Choosing the right AI hosting platform is critical for leveraging the full power of AI, ensuring high performance, scalability, and cost-effectiveness. Platforms like Azure AI Studio, AWS Bedrock, GCP Vertex AI, Hugging Face Enterprise, and NVIDIA Triton Inference Server offer diverse features to meet various AI application needs.

As businesses continue to adopt AI technology, the importance of custom models, fine-tuning, high performance, and robust security measures cannot be overstated. By understanding these key aspects and selecting the right hosting solutions, organizations can significantly enhance their operational efficiencies and drive innovation.

Not sure which AI hosting platform is right for your business? At Azumo, we’ve helped companies deploy and integrate AI solutions across all major cloud platforms. Whether you’re building custom models or scaling up, we’ll help you choose the best infrastructure and strategy to meet your needs. Let’s connect and discuss how we can support your AI journey. Check out our work to see how we've helped other businesses succeed.

.avif)