.avif)

The artificial intelligence landscape has long faced a fundamental challenge: AI models operate in isolation, unable to access the rich ecosystem of external tools, databases, and services that power modern businesses. Each time developers wanted to connect an AI application to external systems, they had to build custom integrations from scratch. This created a complex web of one-off connections, which engineers refer to as the "N×M problem," where every AI application required separate integrations with every external system.

At Azumo, we build AI-powered applications and intelligent software systems. Our team has hands-on experience developing custom Model Context Protocol (MCP) server solutions for enterprise use. With about a decade of work in AI and software engineering and more than 350 successful projects behind us, we’ve helped businesses set up scalable MCP server architectures, secure AI integrations, and agent-based workflows.

This real-world experience gives us a deep understanding of MCP’s technical foundations and how it shapes modern AI systems, making us a trusted partner when clarity and experience matter most.

What is Model Context Protocol (MCP)?

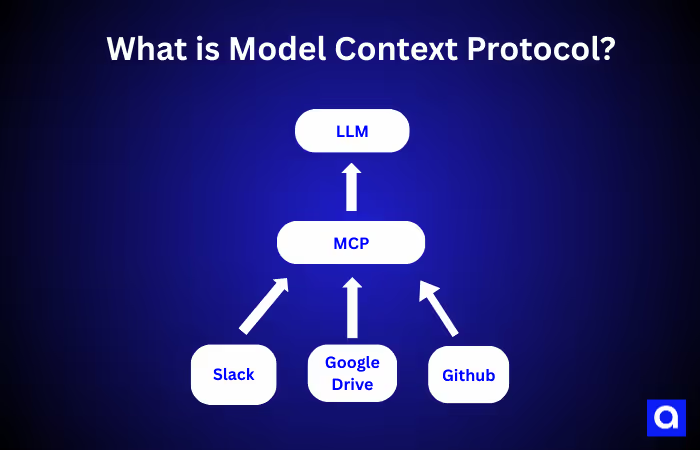

Model Context Protocol (MCP) is a framework for managing and maintaining context across interactions of AI models, particularly large language models (LLMs). It enables more coherent, stateful, and multi-turn communication between users and AI systems by structuring how context is passed, stored, and retrieved throughout an interaction. Think of it as the universal charger for AI applications, much like how USB-C has finally given us one cable that works with everything. MCP provides a single standard way for AI systems to communicate with any external service or database.

Instead of building dozens of custom connections, developers can now plug their AI applications into a growing ecosystem of standardized connectors. Whether your AI needs to check a calendar, pull data from a spreadsheet, or send a Slack message, MCP makes it possible through one consistent approach that just works.

What Problems Does MCP Solve?

As mentioned above, MCP helps connect an AI model to various business systems by providing a universal connection solution, rather than building a custom connection for each AI model. Your AI app learns to "speak MCP," and your business systems get MCP connectors. Now they can all talk to each other without any custom connection.

Imagine it like this. Let's say your company wanted an AI assistant that could help with GitHub code reviews, Slack messages, and look up customer information. Your development team would need to build three completely different connections from scratch. Each one would require:

- Time for specialized coding

- Ongoing maintenance

- Separate security setups

As a result, most AI applications ended up isolated, unable to access the real business data and tools that would make a significant difference. Development resources are wasted on building plumbing rather than on building useful AI features.

What’s the Core Architecture of MCP and How Does it Work?

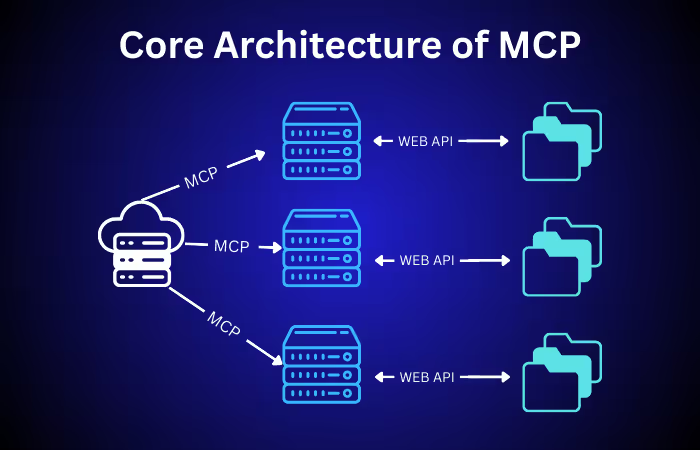

MCP works by collecting inputs from the user, such as text, metadata, messages, etc., during the interaction. Then that information is structured into a standard format that the AI model can easily read and understand.

That structured context is added to the AI’s prompt. With MCP, the AI model has all the necessary background information to generate a thoughtful and relevant response. The AI model utilizes the current prompt, along with the saved context, to develop a more refined and personalized response.

After the AI replies to your query, MCP can update the context with new information, keeping the memory fresh for the next interaction. MCP has four main parts that team up to connect your AI with business systems:

- Host

- Clients

- Server

- Transport Layer

Let's break down each one.

MCP Hosts

These are the primary applications that users interact with, AI-enhanced development environments like Cursor, or custom enterprise AI platforms. Hosts coordinate multiple connections while managing security policies and user permissions.

MCP Clients

Embedded within host applications, clients handle the actual communication with servers. Each client maintains a one-to-one connection with a specific server, which manages authentication, translates between the host's requirements and the MCP protocol, and handles real-time updates.

MCP Servers

MCP Servers are like specialized translators that help your AI communicate with various business tools. Each one focuses on one thing. For example, there is a GitHub translator that helps AI understand code repositories. There is a database translator for pulling information from your company's dataset. Also, there is a Slack translator for sending messages to your team.

Think of them as living either right on your computer for sensitive data you want to keep private, or out in the cloud for things that need to be shared across your team.

Transport Layer

The communication foundation supports multiple connection methods. For local integrations, MCP uses STDIO (Standard Input/Output) for secure, direct communication. For remote connections, it employs HTTP with Server-Sent Events (SSE) to enable real-time streaming updates.

What are the Three Pillars of MCP?

There are three main ways that MCP servers can help your AI model: access to contextual data, actionable functions, and interactions.

- Resources for accessing contextual data

- Tools for generative task compilation

- Prompts for predefined interactions and commends

Think of them as three different types of assistance. Let's discuss each one in more detail.

.avif)