What is Agentic RAG? The Future of Autonomous AI Explained

If you've been keeping up with the AI community recently, you know there's something peculiar going on. Do you remember when everyone was making a fuss about RAG (Retrieval-Augmented Generation) as if it were the next sliced bread? Well, buckle up because there's a new kid on the block that's making classic RAG seem like a flip phone compared to the smartphone.

Let me walk you through what precisely is changing and why anyone building or using AI systems now cares.

The Agentic RAG Evolution Nobody Saw Coming

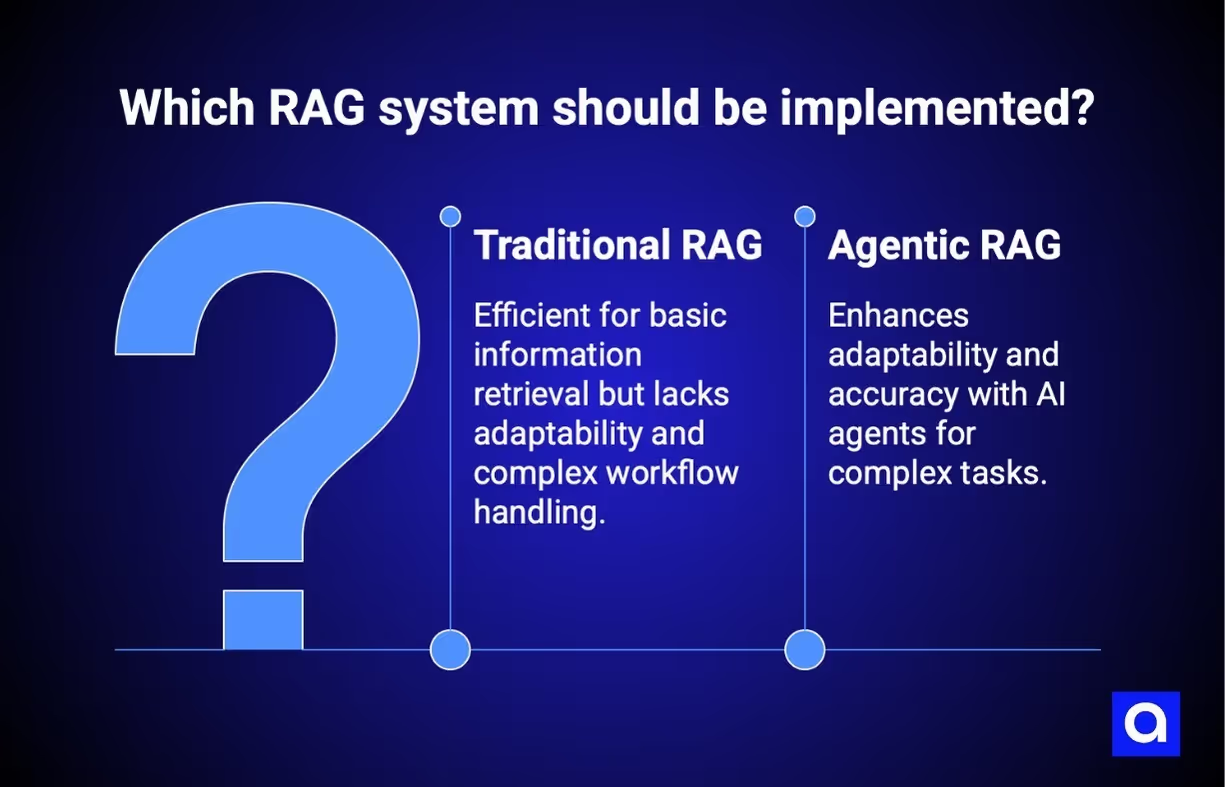

This is the thing about traditional RAG that no one really wants to confess: it's basically a very smart search engine glued to a language model. You ask it a question, it searches through documents, pulls out relevant information, and gives you an answer. Very slick I’d say. But here is where things become fascinating.

According to IBM, Agentic RAG systems add AI agents to the RAG pipeline to increase adaptability and accuracy. Think of it this way: traditional RAG is like having a brilliant librarian who can find any book you need. Agentic RAG? That's like having a whole research team that not only finds the books but also reads them, cross-references them, validates the information, and then comes back to you with exactly what you need to know.

The difference is mind-blowing when you see it in action. While traditional RAG systems allow LLMs to conduct basic information retrieval, agentic RAG allows those same models to conduct information retrieval from multiple sources and handle way more complex workflows. We're talking about AI that can actually think through problems, not just retrieve answers.

So What Exactly Makes Agentic RAG Different?

Let me break this down in a way that finally gets it. An agentic RAG, according to GetStream, is made up of four main components that work as a whole:

First, you've got AI agents who truly do reason. They're not search machines; they can reason problems stepwise. And then there are multiple retrieval aids and sources that they have access to. Need to search scholarly documents, review current data, and do calculations all at once? No problem.

The system also includes dynamic query planning and routing. Instead of following a fixed path, it figures out the best approach for each specific question. And here's my favorite part: self-validation and refinement mechanisms. The system actually checks its own work and fixes mistakes before giving you an answer.

Daily Dose of DS puts it perfectly: RAG systems may provide relevant context, but don't reason through complex queries. If a query requires multiple retrieval steps, traditional RAG falls short. There's little adaptability. The LLM can't modify its strategy based on the problem at hand.

The Real-World Difference This Makes

Let's say you're trying to scan market trends for your business. With traditional RAG, you'd ask a question, and it'd query your database and come back with an answer based on what it'd found. Simple query, simple response.

But if you need to compare market data from various regions, factor in seasonal fluctuations, check on competitors' prices, and then forecast? That kind of multi-step thinking would prove a hassle for standard RAG. For processes that involve comparing two or more sets of data or making forecasts from complex inputs, standard RAG falls behind, according to Medium's DataJournal.

This is exactly what Agentic RAG is made for. Unlike traditional RAG, which simply retrieves and summarizes information, Agentic RAG empowers AI to act as an intelligent collaborator. It can actually reason, compare data, and make decisions in real time.

How The Agentic RAG Actually Works Under the Hood

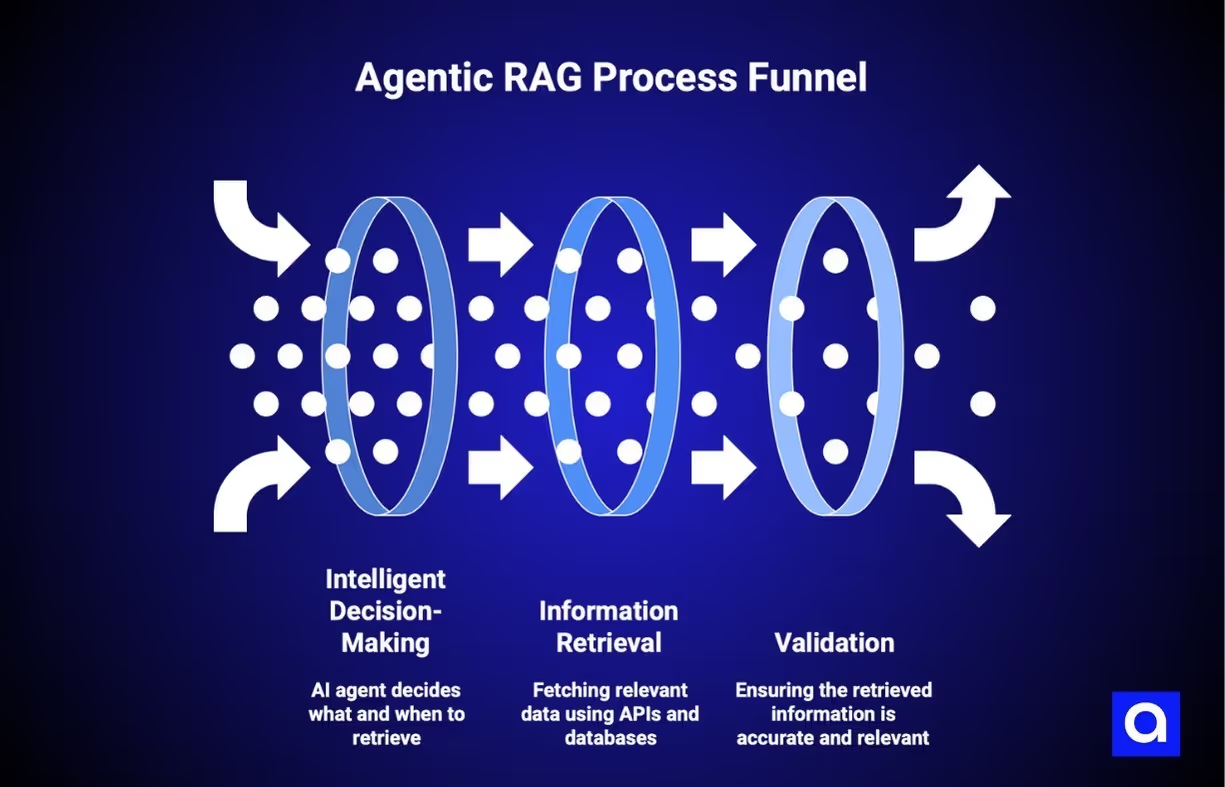

Now, if you're wondering how all this magic happens, let's peek behind the curtain. Unlike traditional models that rely on static, pre-trained knowledge, agentic RAG dynamically accesses real-time data. It uses advanced tools like APIs, databases, and knowledge graphs to fetch the most relevant and up-to-date information.

The key difference in the process is decision-making. While standard RAG retrieves information and passes it directly to an LLM, Agentic RAG allows the AI agent to intelligently decide what to retrieve, when to retrieve it, and how to use it in multi-step reasoning.

But here's what really sets it apart: validation. Agentic RAG doesn't just find information—it finds the right information, validates it, and double-checks it actually answers your query. Instead of hoping your retrieval system surfaces the right documents, you have intelligent agents actively working to deliver accurate, relevant results.

The Agentic RAG Architecture That Makes It All Possible

Let's talk about how these systems are actually built. Here are the core components of an AI agent: you need an LLM with a specific role and task, memory (both short-term and long-term), planning capabilities (like reflection, self-criticism, and query routing), and tools (calculators, web search, you name it).

The beauty of agentic RAG design is the flexibility. In its simplest form, agentic RAG is a router. You have at least two external knowledge sources, and the agent decides which to retrieve more context from. But for more complex scenarios? You can have a high-level master agent responsible for information retrieval by many specialist retrieval agents. It is comparable to having a project manager overseeing a team of specialists.

One of the most popular approaches is the ReAct framework. ReAct stands for Reason + Act, and it's just what the name indicates. The agent thinks through the problem, takes an action, gets the result, and then determines what to do in response. It is able to handle sequential multi-part queries and maintain state resident in memory by combining routing, query planning, and tool use within a single entity.

Real Companies, Real Results

This isn't just theoretical stuff. Major companies are already using agentic RAG to solve real problems. Replit released an agent that helps developers build and debug software. Microsoft announced copilots that work alongside users to provide suggestions in completing tasks.

The applications are everywhere. Analytics Vidhya describes how, while traditional RAG works well for basic Q&A and research, Agentic RAG is better suited for dynamic data-intensive applications like real-time analysis and enterprise systems. We're seeing it transform healthcare with patient data analysis of high complexity, revolutionize finance with real-time market monitoring, and supercharge customer service with intelligent support systems.

Leading Agentic RAG Development Partners

While some companies build their own Agentic RAG systems in-house, many organizations choose to work with specialized development partners. These companies bring deep expertise in multi-agent architectures, routing, validation, and reasoning frameworks. All of this is to help teams move faster from concept to deployment.

1. Azumo

Core Offering: Azumo builds custom Agentic RAG solutions with a focus on nearshore software development.

Key Capabilities:

- Designs and implements multi-agent RAG architectures with adaptive routing.

- Integrates retrieval, reasoning, and real-time decision-making into enterprise systems.

- Experienced in data-heavy industries such as finance, healthcare, and e-learning.

Combines technical depth in LLM engineering with flexible nearshore delivery, ensuring close collaboration across time zones.

Ideal For: Companies seeking a reliable nearshore partner to build custom, end-to-end Agentic RAG applications.

2. Nuclia

Core Offering: Nuclia positions itself as “The #1 Agentic RAG-as-a-Service company” that indexes and retrieves data from both internal and external sources.

Key Capabilities:

- Provides automated ingestion and vectorization of unstructured data (PDFs, docs, cloud sources).

- Supports retrieval and reasoning across multi-agent pipelines.

- Enables organizations to connect heterogeneous knowledge bases with minimal setup.

Its RAG-as-a-Service platform abstracts away infrastructure, letting teams focus on intelligent query and reasoning design.

Ideal For: Teams that need a ready-to-use, cloud-hosted Agentic RAG platform to connect multiple data silos quickly.

3. Progress

Core Offering: Progress offers a comprehensive Agentic RAG-as-a-Service platform with flexible pipelines.

Key Capabilities:

- It allows no-code fine-tuning of Agentic RAG workflows.

- Supports dynamic switching between different large language models.

- Provides integrated validation and monitoring tools.

It is known for its developer-friendly interface that enables fast experimentation and model management without deep ML expertise.

Ideal For: Enterprises looking for a managed environment to customize and deploy multi-agent RAG systems at scale.

4. First Line Software

Core Offering: First Line Software delivers Agentic RAG implementation services that focus on adaptable and operationally efficient solutions.

Key Capabilities:

- Builds modular RAG pipelines using frameworks like LangChain and LlamaIndex.

- Specializes in real-time data processing and AI workflow automation.

- Offers domain expertise across healthcare, logistics, and enterprise software.

This approach emphasizes robust software engineering practices that align AI innovation with enterprise-grade reliability.

Ideal For: Organizations wanting a technically strong implementation partner for integrating RAG into existing business systems.

5. Ment Tech Labs

Core Offering: Ment Tech Labs develops custom RAG systems that blend retrieval, reasoning, and analytics for content and customer support applications.

Key Capabilities:

- Designs hybrid retrieval pipelines that combine structured and unstructured data.

- Implements validation loops to improve output accuracy over time.

- Focuses on customer service, knowledge management, and insight automation.

Offers highly customized RAG deployments built around client data structures and domain needs.

Ideal For: Businesses seeking specialized RAG development for improving content generation, search, and support automation.

Choosing the right development partner depends on your technical needs, industry, and project scale. These companies help accelerate the path from idea to production. What they do brings Agentic RAG capabilities to life without the complexity of building from scratch.

Building Your Own Agentic RAG System

If you're thinking about implementing this yourself, you've got two main paths forward. The first is the function calling approach. In June 2023, OpenAI released a function calling for gpt-3.5-turbo and gpt-4, enabling these models to reliably connect GPT's capabilities with external tools and APIs.

The second path involves using agent frameworks. There's a whole ecosystem out there:

- LangChain/LangGraph offers LCEL and built-in tools

- LlamaIndex contains QueryEngineTool templates to retrieve

- CrewAI is optimal for the construction of multi-agent systems

- DSPy is optimal for ReAct agents and Avatar optimization

- Letta gives agent systems that are memory-intensive

Each of the frameworks does well what it does, and the choice really does depend on your use case and technical requirements.

What Are The Benefits Of Agentic RAG?

Let's talk about why companies are jumping on this bandwagon. IBM highlights the flexibility factor: Agentic RAG applications pull data from multiple external knowledge bases and allow for external tool use, while standard RAG pipelines connect an LLM to a single external dataset.

Salesforce emphasizes the accuracy improvements: more accurate and contextually relevant responses, reduced hallucinations, and better handling of complex queries. When your AI can actually validate its own answers before presenting them, you're looking at a whole different level of reliability.

And then there's the reasoning capability. While traditional RAG focuses on retrieving and augmenting information, Agentic RAG integrates the retrieval process with AI Agent capabilities. The system actively decides how to use information, not just whether to retrieve it.

The Agentic RAG Challenges We Need to Talk About

Research at arXiv explains that multi-agent architectures bring different challenges. These challenges may be coordination complexity, scalability, and latency issues that must be resolved for smooth deployment. We must know that these systems are far more complex than usual RAG, and their complications have their own set of problems.

Security is another big concern. OpenXcell points out that since agentic RAG deals with huge sets of data, it raises concerns over data biases, AI misuse, and misrepresentation. You need additional layers of security like data encryption, selective authorized access, and industry compliance regulations.

And let's not forget about the operational side. Weaviate reminds us that using an AI agent for a subtask means incorporating an LLM to do a task. It comes with added latency and potential unreliability. Depending on the reasoning capabilities of the LLM, an agent may fail to complete a task sufficiently or even at all.

Where This Is All Heading

The future of agentic RAG looks pretty exciting. According to Analytics Vidhya, we are seeing next-generation architectures like Speculative RAG and Self-Route RAG that are designed for a specific problem like unnecessary retrievals, reasoning errors, or computational inefficiency.

The field is evolving fast as businesses apply these technologies for advanced decision-making and self-managed operations. But the real game-changer might be what arXiv research highlights: LLMs have made AI capable of generating human text, but their reliance on pre-set training data limits the ability to respond to dynamic, real-time queries. Agentic RAG avoids this inherent constraint.

Making Sense of It All

So what does all this mean for you? DigitalOcean puts it well: Agentic RAG innovates the retrieval augmentation concept by broadening it from static, single-turn interactions to the multi-step context of autonomous agents.

Moveworks emphasizes that installation of an agentic RAG system can have a major impact on an organization's ability to pull and create data, supporting decision-making and automating workflows. But the installation has to be strategic and intentional.

Bottom line? Agentic RAG represents a next-generation leap in information retrieval that offers more sophisticated and autonomous functionality than the original RAG. With the help of AI agents, the systems are able to process sophisticated questions, learning to adapt to changing information environments, and producing more accurate answers.

Your Next Steps

If you're willing to jump into agentic RAG for your business, start by thinking about your current AI requirements. Do you have sophisticated, multi-step queries that regular RAG cannot handle? Are you dealing with dynamic data requiring real-time validation? Do you need an AI capable of really reasoning through problems and not just fetching?

The shift from traditional RAG to agentic RAG is not just a technical development; it is a paradigm shift in how we think about AI-backed information systems. We are shifting from passive retrieval to active intelligence, from one-shot queries to profound chains of reasoning, from encoded knowledge to dynamic adaptability.

At Azumo, we've been assisting companies in deploying these intelligent apps, supporting them in unlocking the potential of agentic RAG through our nearshore development services. Whether you're looking to overhaul your existing RAG infrastructure or build fresh agentic capabilities from the ground up, the key is to recognize both the potential and the risks of this technology.

The future of AI is not just access to information but to intelligent systems that are able to think, reason, and act on that information in their own right. And that future has arrived with agentic RAG.

.avif)

.avif)