If your aim is to construct clever platforms infused with AI, yet you desire to maintain a reasonable budget and insist upon rigorous data security, open source large language models just might be your answer. We are witnessing an unprecedented boom in the open source large language model market, with these models now rivaling (if not outpacing) the performance of proprietary platforms like GPT-5.2 and Gemini 3.0.

These models are particularly alluring due to their flexibility. They can be tailored to meet specific business demands, deployed in-house, and used without incurring the substantial fees that commercial AI services often require.

What we're talking about, when we say "fine-tuning," is taking a model that's already been trained on a very large dataset and making it perform well on a much smaller, task-specific dataset. This is, indeed, a defining characteristic of state-of-the-art (SOTA) AI today.

With that being said, let's explore the top 10 open source LLMs that you can start fine-tuning today. We will discuss what makes each one more suitable for different business applications.

DeepSeek-V3.2 – The Scalable Reasoning Specialist

DeepSeek-V3.2 hit the scene in December 2025, and it's pretty impressive for an open-source large language model. What makes it special is how smart it is about resource management. Think of it like having a team of 64 specialists, but only calling in the 2 most relevant experts for each task. This smart approach allows the model to pack a massive 671 billion parameters, but keep the costs reasonable.

Key Features:

- MoE design with 671B total parameters, activating only 41B per token.

- Strong performance in math, coding, and logical reasoning benchmarks.

- Trained on extensive multilingual and technical datasets.

- Includes instruction-tuned variants for safe, aligned output.

- Released with open weights and full training transparency.

Best For: Developers, researchers, and trading firms seeking high-performance models with open weights for use in multilingual applications, code generation, and advanced problem solving.

Qwen 3.1 (Alibaba Cloud) - The Newest Powerhouse

Launched in late 2025, Qwen 3.1 is Alibaba Cloud's latest open source large language model. This update improves reasoning performance, adds expanded multilingual coverage, and introduces better alignment for instruction-following tasks. Its hybrid Mixture-of-Experts architecture continues to deliver high efficiency while handling complex tasks.

Key Features:

- Multiple size variants from lightweight 0.5B to larger configurations.

- Trained on massive multilingual datasets.

- Strong performance on code and complex reasoning tasks.

- Released under open source licensing.

Best For: Businesses that need multilingual support and complex reasoning capabilities, especially those working with international markets or technical documentation.

Meta LLaMA 4 - The Versatile Giant

Meta's LLaMA 4 is available in three different parameter sizes (8B, 70B, and 405B), making it adaptable to various computational requirements. The 405B version is particularly impressive, offering enterprise-grade capabilities while remaining completely open source.

Key Features:

- Available in 8B, 70B, and 405B parameter sizes.

- Context window of 10 million tokens.

- Strong performance across multiple benchmarks.

- Extensive community support and documentation.

Best For: Companies that need scalable AI solutions, from startups running smaller models to enterprises deploying the full 405B version for multiple complex tasks.

Google Gemma 3 - Efficiency Meets Performance

Google's Gemma 3 is the newest iteration, building on Gemma 2 with improved reasoning, multilingual capabilities, and a larger context window for more complex tasks. It remains highly efficient, delivering performance comparable to much larger models while keeping computational costs lower.

Key Features:

- Available in 9B and 27B parameter sizes

- Apache 2.0 license for commercial use

- K token context window

- Optimized for efficiency

Best For: SAI performance is desired by small to medium businesses, but these same businesses have limited computational resources and are under budget constraints.

Mistral Large 2 - The Multilingual Specialist

Launched in July 2024, Mistral Large 2 works with an impressive 123 billion parameters and caters to a multitude of languages. TechTarget reports that number to be over 80 and includes some of the most esoteric coding languages in its count, which makes Mistral Large 2 particularly valuable for international development teams.

Key Features:

- 123 billion parameters

- 128K context window

- Support for dozens of natural languages

- Over 80 coding languages supported

Best For: Global companies with multilingual requirements or development teams working across different programming languages and frameworks.

Mixtral-8x22B - The Sparse Expert

This open source large language model employs a Sparse Mixture-of-Experts (SMoE) architecture, which results in a total of 141 billion parameters, but only 39 billion are activated for each input. This method offers the advantage of a large model but requires considerably less computation.

Key Features:

- 39 billion active parameters out of 141 billion total

- 64K token context window

- Apache 2.0 license

- Efficient sparse architecture

Best For: Entities requiring the capabilities of large computational models but desiring to reduce their operational costs and enhance their response times.

Cohere Command R+ - The RAG Specialist

Cohere's Command R+ is purpose-built for RAG (Retrieval-Augmented Generation) use cases. It enables businesses to integrate their AI more seamlessly with existing knowledge bases and document repositories.

Key Features:

- 128K token context window

- Max output token limit of 4096. This limit includes both input and output tokens.

- Fine-tuned for RAG applications

- Integration capabilities are robust.

Best For: Firms targeting the construction of AI systems that have the ability to access and reason over not just one but several existing forms of documentation, databases, and knowledge management systems.

Falcon 3 12B - The Balanced Choice

The Falcon 3 12B is the latest iteration, improving both efficiency and capabilities over Falcon 2 11B. It continues to support language and vision-language tasks while offering a larger context window and refined performance.

Key Features:

- 12 billion parameters.

- Apache 2.0 License

- Both textual and vision-language variants

- The context window of 16K.

Best For: Companies that require both text and image processing functionalities, like e-commerce platforms, content management systems, or customer service applications.

Grok 2 - The Multimodal Innovator

Created by xAI (Elon Musk's AI company), Grok 2 is the latest version from xAI, enhancing multimodal capabilities with improved reasoning, text, and vision processing. The context window has been expanded, making it more effective for handling long conversations and complex documents

Key Features:

- 256K token context window

- The ability to process multiple forms of information (text, image, etc.) at once.

- Robust rational skills.

- Open source availability

Best For: Firms that create apps requiring the processing of visual and textual material, such as document analysis, content moderation, or interactive customer support.

GPT-NeoX-20B - The Community Favorite

The EleutherAI community has developed an extremely large AI model called GPT-NeoX-20B. This model has been trained on the dataset known as The Pile and has been released under the very permissive Apache 2.0 license. Its performance appears to be solid, and it offers full commercial usage rights.

Key Features:

- 20 billion parameters

- Apache 2.0 license

- Trained on the comprehensive Pile dataset

- Strong community support

Best For: Startups and smaller companies that need reliable AI capabilities with clear licensing terms and active community support for troubleshooting and development.

Yi-1.5-34B – The Chinese-English Dual Powerhouse

Yi-1.5-34B is the latest evolution in the Yi model family from 01.AI, building on the original Yi‑34B with improved reasoning, language understanding, and code performance. It has strong bilingual capabilities for both English and Chinese tasks, and it competes with much larger models on benchmarks. Many variants also support extended contexts and chat‑style interaction.

Key Features:

- 34 billion parameters with strong bilingual capabilities

- Trained on a diverse English-Chinese dataset

- Competitive results on reasoning and MMLU benchmarks

- Released with open weights for community adaptation

Best For: Teams working in bilingual environments, or anyone looking for a high-performing model for both English and Chinese NLP tasks.

Phi-2 (Microsoft) – The Small Model with Big Accuracy

Released by Microsoft Research, Phi-2 is a compact transformer-based LLM with just 2.7 billion parameters, but don't let its size fool you. It’s been trained on carefully curated synthetic datasets focused on reasoning, math, and code, making it punch far above its weight. With open weights available, Phi-2 is ideal for low-resource environments or quick experimentation.

Key Features:

- Lightweight 2.7B model optimized for reasoning and math.

- Trained using synthetic data techniques for efficient learning.

- Outperforms larger models in specific benchmarks.

- Open weights support research and commercial adaptation.

Best For: Developers and researchers looking for a small, efficient model with strong reasoning ability and minimal compute needs.

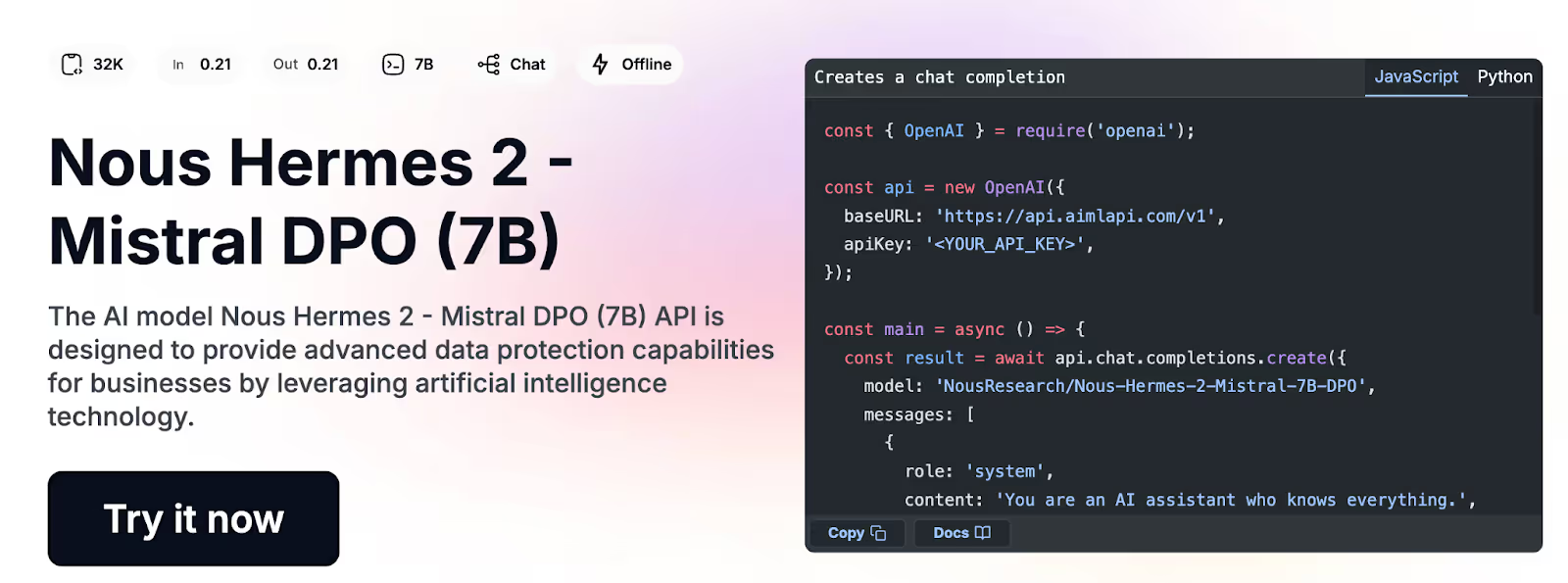

Nous Hermes 2 – The Dialogue-Centric Expert

Nous Hermes 2 which is often used through OpenChat, is a popular community-built LLMs fine-tuned for chat, instruction-following, and conversational coherence. Built on top of Mistral or LLaMA architectures, this model is designed to perform exceptionally well in multi-turn dialogue and assistant-style outputs. With open weights, it has become go-to options for customized chatbot and virtual assistant use cases.

Key Features:

- Optimized for high-quality conversation and multi-turn interactions.

- Fine-tuned on instruction datasets like ShareGPT and UltraChat.

- Community-supported and actively improved.

- Available with open weights for integration and fine-tuning.

Best For: Building custom chatbots, virtual assistants, or testing advanced instruction-following capabilities.

Pythia – The Transparent Research-First Model

%202.12.59%E2%80%AFp.%C2%A0m..avif)

Pythia is a family of models developed by EleutherAI, designed with research transparency in mind. The models range from 70M to 12B parameters, all released with open weights, full training data documentation, and reproducible training scripts. Pythia is ideal for academic or exploratory work where understanding how the model was built is as important as what it can do.

Key Features:

- Model suite ranging from small to medium sizes

- Fully documented training process and datasets

- Built for reproducibility and transparency

- Open weights and code available

Best For: Researchers and educators seeking transparent, reproducible models for analysis, benchmarking, or educational use.

Why Fine-Tuning Makes Business Sense

Fine-tuning these open source LLMs offers several compelling advantages over using pre-built AI services. LLM fine-tuning is a supervised learning process that updates the model's weights using your specific dataset, making it much more effective for your particular use cases.

- Data Security and Privacy: You maintain complete control over your data. No third-party access, no concerns about data mishandling, and full compliance with your industry's data protection requirements.

- Cost Savings: Elimination of per-use fees and licensing costs makes these freely available models even more attractive. You can and should take the money saved there and use it for customization and optimization.

- Reduced Vendor Dependency: You are not confined to an ecosystem or pricing structure of a certain AI provider. This flexibility becomes more and more precious as your AI requirements expand and adapt.

How to Start LLM Fine-Tuning

The technical barrier to entry has never been lower. According to Philipp Schmid, you can start by installing Hugging Face Libraries and PyTorch, including the TRL library, which makes fine-tuning much easier.

Most of these models can run on consumer hardware, and as Zapier notes, you can download models like LLaMA 3 and Gemma 2 from Hugging Face and run them on your own devices.

Looking Ahead: The Future of Open Source AI

These open source LLMs aren’t just cost-effective alternatives. They’re increasingly used by AI development companies that need flexible, customizable models without long-term vendor lock-in. Independent evaluations and real-world deployments show that models like Vicuna can deliver production-ready quality suitable for enterprise use cases, without the overhead of closed systems.

The shift toward sparse expert architectures is especially promising. Instead of relying on a single massive model, newer LLM designs activate specialized components based on the task at hand. This approach allows AI development companies to build systems that are more efficient, easier to scale, and better aligned with specific application needs.

Ready to Build Your Intelligent Applications?

Companies can take clear advantage of LLMs available without licensing constraints. Those living in the Gen AI world can, in most cases, consider open source LLMs a legitimate way to construct intelligent applications. Looking at the conscience of the models at hand seems vital.

Choosing a suitable model is crucial, however, for a company to work with AI effectively. The key is to select the right model for specific requirements and to have the technical know-how to implement and then fine-tune it to behave as desired. At Azumo, we work with companies to identify the most suitable AI solutions for their needs and provide the development expertise to make those solutions real.

Ready to explore the world of open source LLMs and how they can transform your business operations? The next step is a conversation, and not about us, more about you and your specific requirements. Let us help you build the intelligent applications your business needs.

.avif)

.avif)