The landscape of real-time applications has undergone substantial transformation in recent years. Companies across industries are integrating voice AI agents and real-time video capabilities into their core products, with LiveKit Cloud supporting over 100,000 developers collectively handling over 3 billion calls per year. This growth reflects a fundamental shift in how users expect to interact with applications—demanding seamless, low-latency communication experiences that work reliably at scale.

LiveKit addresses these requirements through a comprehensive, open-source platform that eliminates many of the traditional barriers to building real-time communication features. Rather than managing the complexities of WebRTC infrastructure, developers can focus on creating differentiated user experiences while relying on proven, scalable architecture. At Azumo, we’ve helped clients integrate LiveKit into AI-powered chatbots, telehealth platforms, and real-time customer service tools. This guide reflects our hands-on experience implementing scalable voice and video features using LiveKit’s open-source and cloud-hosted offerings.

Key Takeaways

- Open-Source Flexibility: LiveKit operates under Apache License 2.0, eliminating vendor lock-in while providing enterprise-grade features including end-to-end encryption and HIPAA compliance

- Proven Scalability: The platform supports over 100,000 AI developers handling 3+ billion calls annually, with SFU architecture that scales horizontally to 100,000 simultaneous participants per session

- AI-Native Framework: LiveKit Agents 1.0 offers production-ready voice AI capabilities with 85% accuracy in turn detection and seamless integration with major AI providers like OpenAI and Deepgram

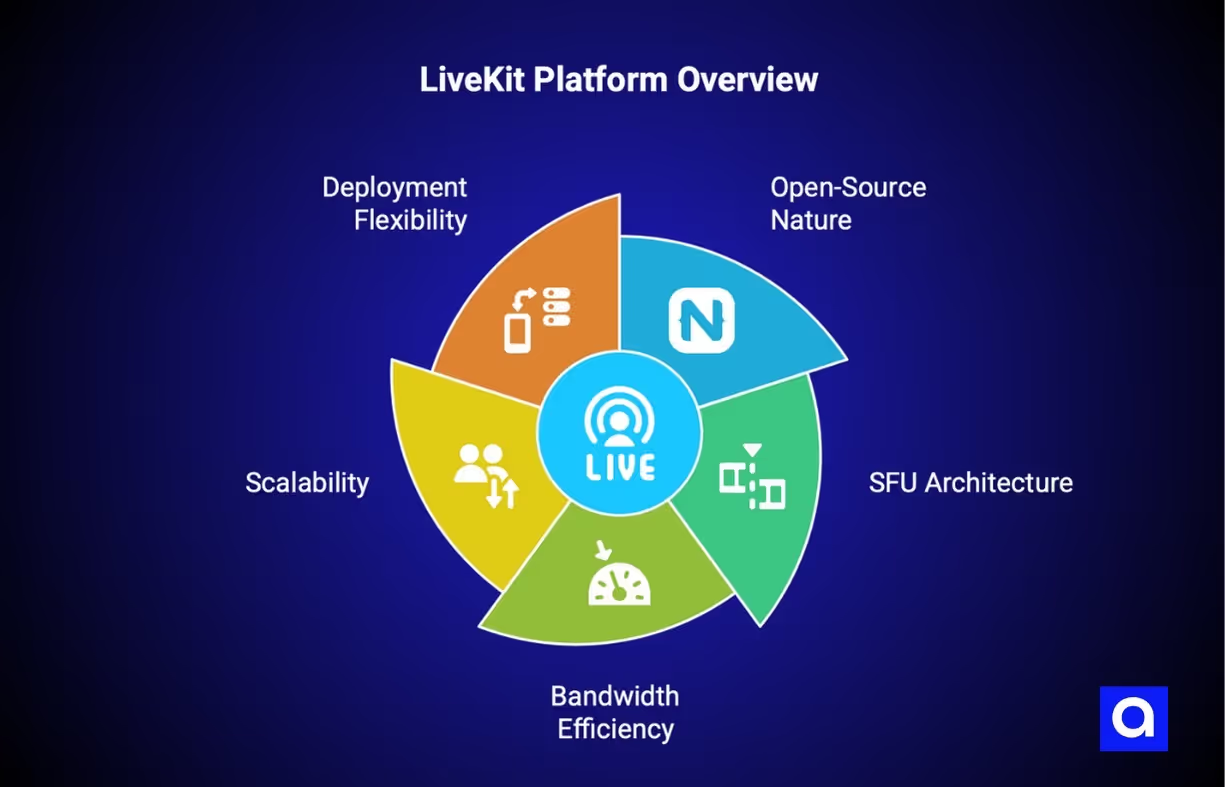

- Flexible Deployment: Choose between self-hosted infrastructure or LiveKit Cloud managed service with identical APIs, enabling smooth transitions as requirements evolve

- Cost-Effective Infrastructure: Eliminates licensing fees while providing enterprise features, with predictable per-minute pricing for managed services and full control over data residency

- Comprehensive SDK Support: Consistent APIs across web, iOS, Android, Flutter, and React Native platforms, plus telephony integration for phone-based voice agents

Open Source Foundation with Enterprise Capabilities

LiveKit operates as a fully open-source platform under the Apache License 2.0, providing developers with complete transparency and customization capabilities without vendor lock-in. The platform's core media server, written in Go using the Pion WebRTC library, implements a Selective Forwarding Unit (SFU) architecture that efficiently routes media streams through central servers rather than requiring peer-to-peer connections between all participants.

This architectural approach delivers measurable advantages in bandwidth utilization and scalability. Where traditional peer-to-peer models require each participant to upload their stream to every other participant—creating exponential bandwidth requirements—the SFU model requires only a single upload per participant. The server then handles distribution, reducing client-side bandwidth requirements and enabling reliable connections even on mobile networks with variable quality.

The platform supports both self-hosted deployments and LiveKit Cloud, a managed service that provides global infrastructure without requiring changes to application code. This flexibility allows organizations to choose deployment models that align with their specific requirements, from bare metal installations to Kubernetes clusters.

Scalable Architecture for Real-World Demands

LiveKit's horizontal scaling capabilities address one of the most significant challenges in real-time communication: handling unpredictable traffic patterns. The system can deploy multiple SFU nodes with identical configurations, using Redis for peer-to-peer routing to ensure participants in the same session connect to the same node. This approach allows capacity expansion through standard infrastructure scaling techniques rather than requiring specialized media server configurations.

In LiveKit Cloud deployments, multiple SFU instances form a distributed mesh, allowing media servers to discover and relay content between regions. Participants automatically connect to the nearest instance, minimizing latency and packet loss, while the mesh handles inter-region relay over optimized networks. This architecture bypasses traditional single-server limitations found in many SFU and MCU implementations.

The system includes built-in redundancy and fault tolerance mechanisms. If a media server fails, affected participants migrate to alternative instances automatically. During data center outages, traffic routes to the next closest location while LiveKit SDKs handle session migration without service interruption.

Adaptive streaming capabilities automatically adjust stream parameters based on network conditions. The system monitors connection quality in real-time and modifies resolution, bitrate, and other parameters to maintain optimal performance even when participants experience varying network conditions.

Azumo partnered with a telehealth platform to integrate LiveKit’s cloud solution, enabling smooth video consultations across various regions. With the distributed mesh architecture, the platform was able to provide low-latency connections, even in more remote locations. Thanks to LiveKit’s built-in redundancy, the consultations continued without a hitch, even when regional outages occurred.

Note: All examples described in this article are based on real engineering implementations delivered by Azumo’s development team, adapted for clarity and confidentiality.

AI-Native Features and Agent Framework

LiveKit's recent focus on AI integration culminated in the 1.0 release of its Agents framework, designed specifically for building voice and video AI agents. This framework addresses the specific challenges of real-time AI applications, including streaming audio through STT-LLM-TTS pipelines, handling interruptions, and managing conversational state.

The Agents framework supports two primary approaches for voice AI applications. The VoicePipelineAgent uses separate Speech-to-Text, Large Language Model, and Text-to-Speech providers, offering granular control over each component. The MultimodalAgent integrates directly with OpenAI's multimodal model and realtime API, producing more natural-sounding speech similar to OpenAI's Advanced Voice Mode.

A notable innovation is LiveKit's custom turn detection model, which addresses a common challenge in voice AI applications. While traditional Voice Activity Detection (VAD) systems rely solely on silence detection, LiveKit's model incorporates conversational context to achieve 85% true positive rates in avoiding early interruptions and 97% accuracy in determining when users have finished speaking.

The framework includes comprehensive integrations with major AI providers, supporting flexible combinations of STT, LLM, and TTS services. This modularity allows developers to optimize for specific use cases, whether prioritizing cost, latency, or audio quality.

Production Deployment and Integration

LiveKit provides multiple SDK options covering web, iOS, Android, Flutter, React Native, and server-side environments. These SDKs maintain consistent APIs across platforms, allowing developers to implement real-time features without platform-specific adaptations.

The platform includes robust security features including end-to-end encryption, JWT-based authentication, and compliance with SOC2, GDPR, and HIPAA standards. Role-based access control and moderation APIs provide granular control over participant permissions and content oversight.

Recent platform updates have focused on optimizing performance and expanding telephony capabilities. The latest releases include improvements to WHIP ingress processing, enhanced SIP service permissions, and better handling of IPv6 SDP fragments. These updates reflect LiveKit's commitment to addressing real-world deployment challenges identified by its user base.

Integration capabilities extend beyond basic media streaming. The platform supports webhook notifications, REST APIs for management functions, and CLI tools for automated operations.

At Azumo, we've taken full advantage of these updates to integrate LiveKit’s powerful features into our clients' applications.

For instance, we implemented SIP for voice agents on a customer service platform, making sure both inbound and outbound calls run smoothly without a hitch.

With LiveKit's extended integration options, we’ve automated various processes and streamlined media management using REST APIs, webhooks, and CLI tools. This has allowed us to create efficient, scalable real-time applications that fit our clients' specific needs.

Practical Implementation Considerations

Organizations evaluating LiveKit should consider both technical and business factors. The open-source nature eliminates licensing fees but requires infrastructure expertise for self-hosted deployments. LiveKit Cloud provides managed infrastructure with predictable per-minute pricing, though costs can vary significantly based on usage patterns.

The platform's strength in handling concurrent users makes it particularly suitable for applications expecting variable or unpredictable traffic. LiveKit Cloud supports up to 100,000 simultaneous participants per session, with automatic load distribution that functions similarly to content delivery networks.

Development complexity varies considerably based on implementation scope. Basic video conferencing features can be implemented relatively quickly using existing SDKs and sample applications. AI-powered voice agents require additional integration work with external providers and careful consideration of conversation flow design.

Testing and optimization become crucial for production deployments. LiveKit provides comprehensive analytics and telemetry data, enabling organizations to monitor connection quality, identify bottlenecks, and optimize performance based on actual usage patterns.

Business Value and Use Cases

Real-world implementations demonstrate LiveKit's versatility across industries. Companies use the platform for everything from orchestrating voice-related functionality in AI platforms to enabling low-latency real-time translation services. The common thread is the need for reliable, scalable real-time communication that integrates seamlessly into existing applications.

For example, we’ve worked with healthcare organizations to integrate the platform for telehealth consultations, ensuring HIPAA compliance and end-to-end encryption for secure patient interactions.

In education, we’ve helped institutions set up virtual classrooms with features like screen sharing and recording, enhancing the learning experience.

For customer service teams, we’ve deployed AI-powered voice agents that handle routine inquiries and seamlessly escalate more complex issues to human reps when needed

The economic advantages extend beyond reduced licensing costs. The ability to self-host provides control over data residency and security policies, which can be crucial for regulated industries. The consistent API across deployment models allows organizations to start with managed services and transition to self-hosted infrastructure as requirements evolve.

Looking Forward

LiveKit's recent $45 million Series B funding round demonstrates continued investment in expanding the platform's AI capabilities. The company's roadmap focuses on creating an all-in-one platform for AI agents that can see, hear, and speak naturally.

The broader industry trend toward voice-first interfaces aligns with LiveKit's technical capabilities. As voice AI becomes more prevalent, the infrastructure requirements for handling real-time audio processing at scale become increasingly important. Organizations building voice-enabled applications today are positioning themselves for a future where natural language interaction becomes the primary interface modality.

For development teams evaluating real-time communication solutions, LiveKit offers a compelling combination of open-source flexibility, proven scalability, and comprehensive feature sets. The platform's evolution from basic WebRTC infrastructure to AI-native capabilities reflects both technical innovation and market demand for more sophisticated real-time interaction paradigms.

Success with LiveKit requires thoughtful architecture planning, appropriate infrastructure allocation, and careful consideration of specific use case requirements. However, the platform's track record of supporting applications from small-scale implementations to services handling billions of calls annually suggests it can accommodate a wide range of organizational needs and growth trajectories.

Thinking of building real-time applications with AI capabilities? Azumo’s expert developers have hands-on experience working with LiveKit and similar platforms to deliver scalable, secure applications in industries like healthcare, customer service, and education. Let’s chat about how we can help you design and scale your next real-time solution.

.avif)