AI agents are revolutionizing business automation by enabling systems to make smart decisions, automate complex workflows, and adapt through learning. But building effective AI agents is not just about having powerful models; it requires a thoughtful, well-structured AI agent architecture (also called AI agents architecture or agentic AI architecture) that integrates sensing, reasoning, memory, and action into a seamless, scalable system.

At Azumo, we have developed proven best practices to design, build, and deploy AI agents customized to meet specific business needs.

In this guide, we share detailed insights into the core components, engineering challenges, and real-world applications of AI agent architecture to help you understand our practices for creating intelligent, reliable, and scalable AI agents.

What Is AI Agent Architecture?

AI agent architecture refers to the overall structural design that allows software agents to autonomously perceive their environment, interpret inputs, reason about goals, and take appropriate actions. This architecture is the blueprint that connects the key components, such as input processing, understanding layers, memory, planning, and output, to ensure the agent works consistently and scales as demands grow.

This framework is essential because it directly affects agent performance, adaptability, and maintainability. The concept of an agentic AI architecture emphasizes the point that these agents are independent, operate according to the set goals, and generally make intelligent decisions rather than performing scripted tasks.

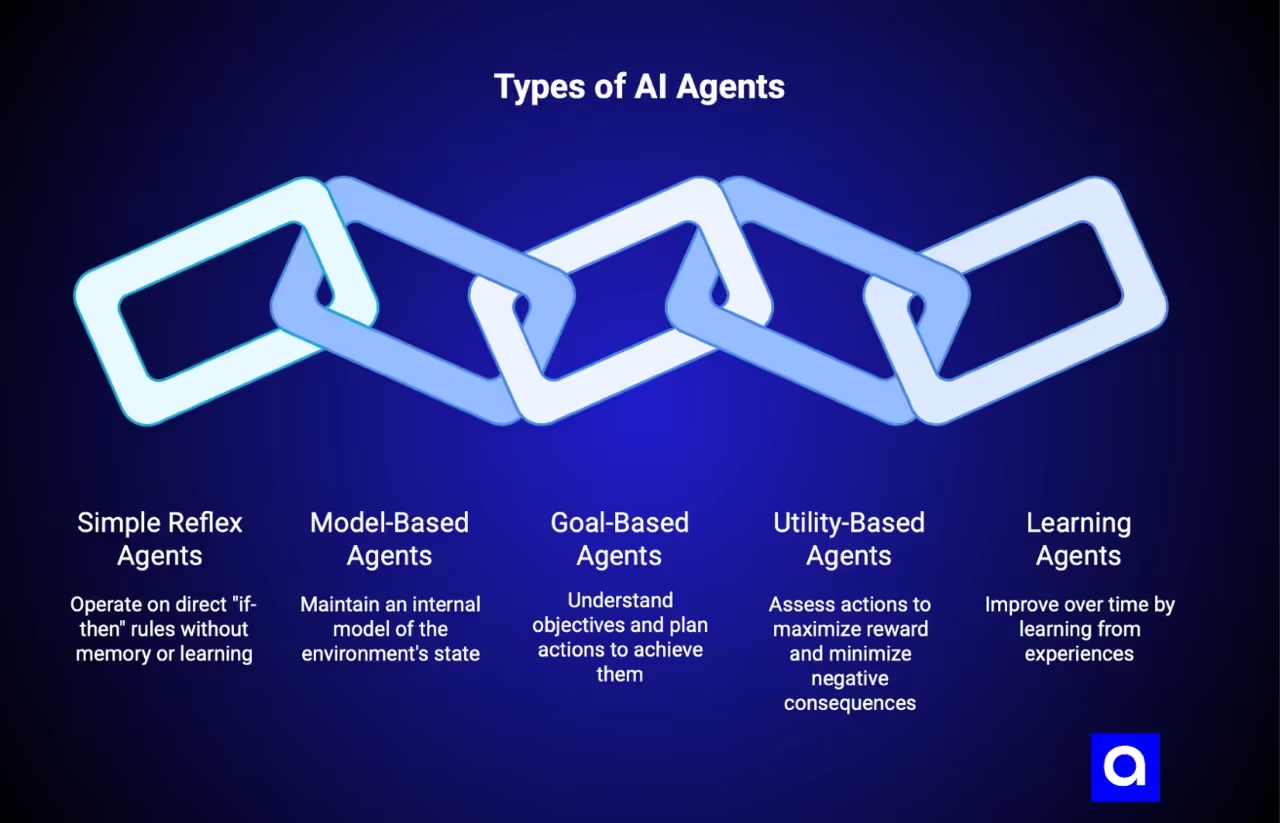

What are the Different Types of AI Agents?

When building AI agents, we consider their level of intelligence and purpose. At Azumo, we develop a variety of agent types, often combining their capabilities for practical use:

- Simple Reflex Agents

These operate on direct "if-then" rules, reacting immediately to events without memory or learning. Think of an Excel spreadsheet cell that recalculates instantly when a value changes.

- Model-Based Agents

These maintain an internal model of the environment's state. For example, an IP security camera detects motion by comparing current and previous infrared frames to decide when to record.

- Goal-Based Agents

These agents understand objectives and plan sequences of actions to reach them, such as a Roomba navigating to clean a room efficiently and constantly evaluating its progress.

- Utility-Based Agents

Utility-based agents are those that assess different actions in order to maximize reward and minimize negative consequences. For example, a robot that is navigating through a maze is weighing distances and costs to determine the most optimal route.

- Learning Agents

These agents improve over time by learning from experiences using neural networks or large language models. They adapt their behavior based on feedback from their environment.

In practice, many AI agents do not fit neatly into just one category. Instead, they often blend features from several types to better tackle the complexities of real-world problems.

For example, an AI agent might use reflex-like responses for simple tasks that require immediate action, while also maintaining an internal model of its environment to make more informed decisions over time.

It could have goal-based planning to map out longer-term objectives and apply utility-based reasoning to weigh different options based on costs and benefits. On top of that, it may continually learn from experience to improve its performance.

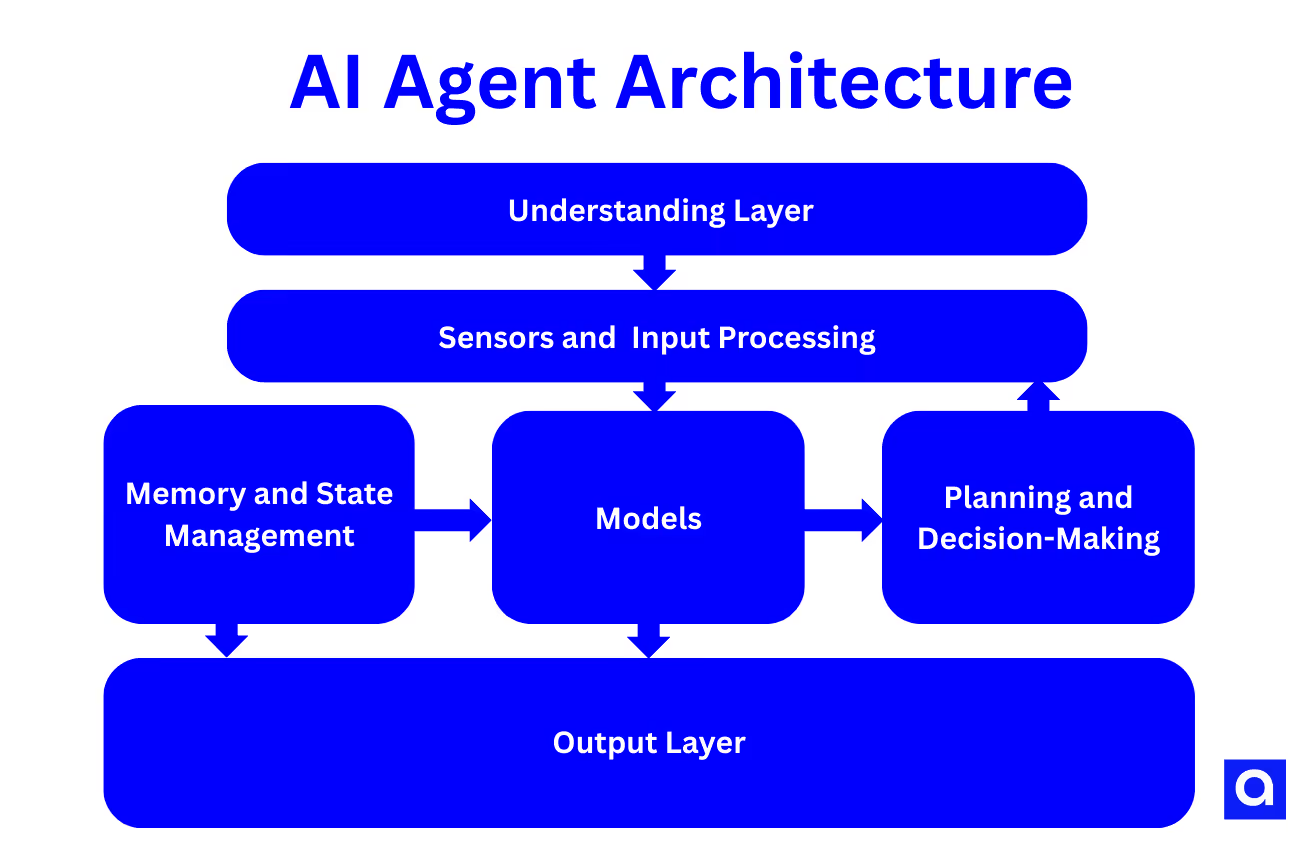

Core Components of AI Agent Architecture

Designing an effective agentic AI architecture requires integrating several key building blocks that work seamlessly together:

- Sensors and Input Processing

AI agents first need to “sense” the world around them, and they do this through different kinds of inputs:

- Sounds and speech are captured and converted into text using speech-to-text tools.

- Images are interpreted by captioning models that describe what’s happening in the scene: for example, “a girl kicking a soccer ball.”

- Text inputs are analyzed with natural language processing pipelines or large language models to understand the meaning, identify key entities, and grasp the overall context.

This layer turns all that raw, messy data into clear and structured information that the agent can reason about.

- Understanding Layer

Once the data is processed, advanced language models and NLP tools step in to interpret it further. They pull out the intent behind words, relevant details, and the context needed for the agent to make thoughtful, nuanced decisions.

- Memory and State Management

Good AI agents remember. They manage two kinds of memory to stay effective:

- Short-term memory, which is like a quick-access workspace (think Redis), keeps track of immediate conversation flow or recent data so interactions feel smooth and coherent.

- Long-term knowledge lives in large vector databases, letting the agent search through a vast pool of information quickly, often helped by neural network indexing for even faster retrieval.

At Azumo, we strike a balance between these memory types to keep agents both responsive in the moment and deeply informed over time.

- Models

Models are basically the brain of the AI agent. They hold knowledge about the environment, goals, and how to evaluate different choices. We build or integrate a variety of models, from simple rule-based ones to complex neural networks, often exposing them as handy tools the main AI engine can call on.

For example, when working with psychometric tests, a specialized model might score answers, while a large language model helps reason through the results to provide deeper insights.

- Planning and Decision-Making

How an agent decides what to do depends on its type. Some might respond immediately with simple reflexes, while others plan out multiple steps ahead.

For those powered by large language models, techniques like chain-of-thought reasoning help break down complex problems into clear, actionable steps. These agents can even call on external tools or APIs as part of their plan.

- Output Layer

After processing information and making decisions, AI agents need to share their responses. Whether through text, voice, images, or APIs, Azumo equips agents to communicate seamlessly across different platforms for smooth and effective interactions.

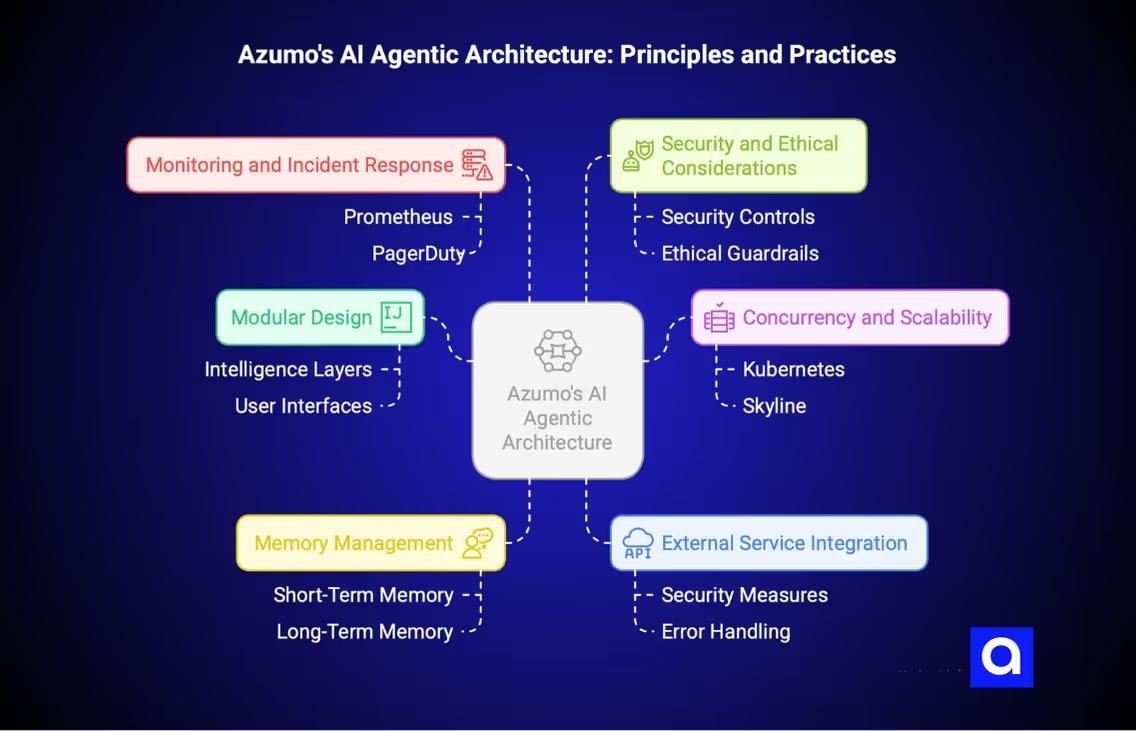

Engineering Realities: Azumo’s Approach to Scalable AI Agentic Architecture

Modular Design: Separating Intelligence from Interfaces

We separate intelligence layers from user interfaces. For example, an accounting chatbot uses a spreadsheet parser module for calculations that the LLM calls as a tool to answer questions. This modularity eases maintenance, testing, and future upgrades.

One of our most illustrative examples of agentic AI architecture in action is Valkyrie, an internal platform we built to streamline AI tool access across enterprises. Designed with a fully modular backend, Valkyrie integrates LLMs, diffusion models, and AI-powered assistants via a unified CLI and API.

It enables instant scalability across AWS, RunPod, and Hetzner using Skypilot, while maintaining security, observability, and zero setup overhead. This architecture reflects our core design philosophy, separating intelligence from delivery layers while providing developers with flexible, high-performance AI infrastructure.

Our architecture closely mirrors what recent research defines as compound AI, where agents are tracked in an agent registry, data sources are mapped in a data registry, and a centralized planner or orchestrator translates high-level workflows into executable steps.

This emerging framework supports scalable, modular agent design at the enterprise level and validates the approach we've taken with internal platforms like Valkyrie.

Concurrency and Scalability

By designing our AI agents modularly, we also ensure that scaling to support many users happens smoothly and efficiently. We leverage Kubernetes for zero-downtime rolling deployments and use tools like Skyline to dynamically provision GPU-backed inference servers during peak demand, scaling down when idle to reduce costs. Keeping GPU instances “warm” avoids long cold-start delays, which is crucial for live chat responsiveness.

Managing Memory and Context

Short-term memory stored in fast caches ensures conversational coherence. Long-term knowledge lives in vector databases with neural indexing to facilitate rapid and relevant retrieval during response generation.

Connecting to External Services and Tool Safety

AI agents also need to safely connect to external APIs and services. We achieve this by defining these integrations as standard software tools, implementing strict security measures, and robust error handling to isolate any failures and keep the system stable.

A strong example of this approach is our collaboration with a midstream Oil & Gas company, where we developed an AI-driven Alarms Management Platform that automatically filtered false positives, sent SMS notifications, and integrated with field operations through inbound and outbound alerts.

The platform uses anomaly detection models like Isolation Forest and Autoencoders, powered by real-world sensor data, and supports user feedback to continuously retrain and improve accuracy.

Monitoring and Incident Response

In order to make sure everything runs smoothly, we carry out full-fledged observing through tools like Prometheus, paired with alerting systems such as PagerDuty. This setup provides real-time health checks and notifications, supported by on-call rotations to provide short-term resolutions of any issues.

Security and Ethical Considerations

Security and ethical responsibility are foundational to our architecture. Security controls are enforced at both software and infrastructure levels. Ethical guardrails are introduced primarily during model training and fine-tuning phases. We favor open-source models like Falcon that offer transparency and traceability, allowing us to apply ethical constraints more effectively than with proprietary black-box models.

This approach helps us build AI agents that not only perform well but also align with responsible and transparent AI principles.

The Heart of Success: Data Sets

A fundamental truth from Azumo’s experience: 90% of AI agent success depends on data quality. No architecture or modeling sophistication can compensate for poor or insufficient data.

To ensure high-quality data, we rely on collaborative labeling tools like Label Studio. This allows domain experts and stakeholders to build, curate, and continuously improve gold-standard datasets together. It is an ongoing, iterative process involving scoring model outputs, adding new examples, and retraining. This careful preparation lays the foundation for launching AI agents that are truly reliable and effective.

A clear example of this is our work with Stovell, where we built backend AI agents that help global asset managers and energy companies make smarter pricing and investment decisions.

By training the agents on highly specialized datasets, ranging from historical market behavior to competitor pricing signals, we enabled a predictive platform that supports multi-billion-dollar decisions with greater accuracy and speed.

This illustrates how high-quality data paired with the right AI agent architecture can directly translate into strategic business value.

Seamless Model Updates and Deployment

At Azumo, we ensure model updates happen smoothly without interrupting live services by using Kubernetes rolling deployments. Essentially, this choice makes it possible to run new model versions in the presence of the pre-existing ones before completely turning over the traffic to the updated model. The older instances continue handling ongoing requests until they complete, making it easy and safe to roll back if needed.

Measuring Success: Key Metrics

To ensure our AI agents perform at their best, we closely monitor several key factors:

- Latency and cost: These are monitored continuously through logs and dashboards to keep performance efficient and affordable.

- Accuracy: We evaluate accuracy by comparing results against reserved test datasets, which underscores the need for well-curated data.

- User satisfaction: Feedback is collected through surveys and analysis of user interactions, understanding that satisfaction is subjective and response rates can vary.

Lessons Learned and Best Practices

From experience, the biggest lesson is to start your dataset early and keep it well-curated. Nailing the data from the start prevents headaches later. Rather than endlessly tweaking one model, testing several on the same dataset helps find the best fit faster.

When planning your infrastructure, consider how to scale efficiently without breaking the bank, especially when it comes to expensive GPU resources.

Also, keeping your AI’s core intelligence separate from the user interfaces makes it much easier to update and improve parts of the system without everything falling apart.

Where AI Agent Architecture Makes an Impact

We’ve designed and deployed AI agents across a wide range of industries, each with its own challenges, systems, and goals. Below are a few examples of how our agentic AI architecture comes to life in real-world scenarios:

- Finance & Asset Management

In fast-moving financial markets, timing and accuracy are everything. We built AI agents that help analysts make smarter portfolio decisions by monitoring market conditions, picking up on competitor moves, and generating predictive insights that go beyond human intuition.

- Healthcare & Clinical Operations

For a large hospital system, we developed AI agents to assist with triage. These agents analyze incoming patient data in real time, flag high-risk cases, and help route them to the right medical teams, freeing up clinical staff to focus on care, not coordination.

- SaaS & Customer Experience

Supporting a growing SaaS platform, we trained agents to act as always-on product experts. They handle user questions inside the app, troubleshoot technical issues, and surface helpful resources, all without needing human intervention unless truly necessary.

- Energy & Field Operations

In the energy sector, uptime is critical. We deployed AI agents that scan live sensor data from pipelines and machinery, detect anomalies, and send real-time alerts to field teams, reducing noise, avoiding false alarms, and keeping the operation running smoothly.

- Logistics & Supply Chain

In logistics, delays cost money. We created AI agents that track shipments, predict disruptions, and suggest alternate routing options based on weather, traffic, and warehouse availability, keeping deliveries on time and teams in sync.

Across every project, we adapt the architecture to fit the industry, the workflow, and the people using it. That’s the power of well-designed AI agents: they’re not just smart, they’re purpose-built.

The Future of AI Agent Architecture

At Azumo, we see several exciting trends shaping the future of AI agents:

- Smaller, ethically transparent large language models will make advanced AI more accessible to a wider range of users and organizations.

- AI agents will increasingly be built from multiple specialized neural models, each expertly handling specific subtasks for greater efficiency.

- Frameworks will continue to mature, yet there will still be a strong demand for custom, lightweight solutions tailored to unique business needs.

- Serverless and cloud-native technologies will make scaling AI agents more cost-effective and agile, allowing systems to respond quickly to changing demands.

Final Thoughts

So, building a robust AI agent architecture demands modular design, strong data foundations, scalable infrastructure, and embedded ethical guardrails.

Azumo’s extensive experience delivering custom AI agents proves that focusing on data quality and engineering flexibility produces smarter, faster, and more reliable agents that are perfectly customized to your business needs.

If you’re ready to transform your workflows with AI agents, contact us! Azumo’s expert team is ready to help you design, develop, and deploy scalable intelligent solutions.

.avif)