Retrieval-Augmented Generation (RAG) has quickly evolved from an AI experiment into a foundational architecture for enterprise-scale intelligence. Traditional large language models (LLMs) struggle with up-to-date data, compliance, and explainability. These are critical factors for organizations that depend on accuracy and control.

A well-designed enterprise RAG system bridges this gap. It augments generative models with verified, real-time knowledge from internal sources, creating systems that can reason, validate, and respond with context-aware precision.

In this comprehensive guide, we’ll walk through how to build a RAG system for enterprise environments, which covers architecture, components, guardrails, and the lessons learned from real-world RAG deployments.

Why Enterprises Need RAG Systems

Before building a system, it’s important to understand the why. LLMs alone are powerful but limited. They can’t access live enterprise data or verify factual accuracy. For most organizations, that’s a dealbreaker.

RAG solves these by connecting LLMs to knowledge warehouses through retrieval mechanisms. It retrieves relevant documents, injects them into the model’s context, and generates responses grounded in verified information.

Enterprise RAG Benefits

- Factual accuracy: Each output is backed by traceable sources.

- Compliance: Restricts data retrieval to authorized knowledge bases.

- Adaptability: New data updates automatically without retraining models.

- Scalability: Handles large, domain-specific datasets efficiently.

- Explainability: Every answer links to evidence, improving transparency.

RAG shifts enterprise AI from “best-guess” to “source-backed” intelligence by combining retrieval and reasoning.

What Are The Common RAG Failure Points?

Even the most carefully engineered RAG systems can trip over hidden obstacles. You can have the most innovative retriever and the fastest embeddings, yet still find yourself wondering why the model missed that obvious answer. Galileo Labs’ research highlights seven recurring failure points that quietly ruin reliability in even mature systems.

If you’re building or scaling a RAG for production, these are the blind spots you need to anticipate early. They’re not just technical glitches, but patterns that emerge when retrieval pipelines, ranking logic, or generation prompts don’t quite align. And when they do show up, they can cause your outputs to sound off, skip details, or miss the mark entirely. Isn’t this something no enterprise team wants to explain to stakeholders?

So, where do these cracks appear in the system? Let’s walk through the seven most common RAG failure points you’ll want to guard against:

- Missing content (FP1): No relevant data available for the query.

- Missed top-ranked documents (FP2): The right answer exists but isn’t ranked high enough.

- Not in context (FP3): Retrieved docs never make it into the final context window.

- Not extracted (FP4): LLM fails to identify or extract the relevant information.

- Wrong format (FP5): Output ignores format instructions (e.g., lists, tables).

- Incorrect specificity (FP6): Answers are too general or overly detailed.

- Incomplete (FP7): Responses lack full detail, even when data is available.

A strong enterprise RAG architecture reduces these with intelligent retrieval tuning, query rewriting, and validation layers. We’ll dive into these topics below. Now let’s talk about them individually.

FP1. Missing Content

This happens if a request is submitted to the system for which there is no relevant information in the stored documents. Instead of safely responding with “I don’t know,” some RAG implementations attempt to generate a speculative answer. This undermines trust and can create hallucinated outputs.

Best Practice: Implement backup detection and confidence scoring so the system can gracefully decline when knowledge coverage is missing.

FP2. Missed Top-Ranked Documents

Sometimes, the correct answer is in the database, but it doesn’t make into the top K retrieved results. This usually occurs due to embedding mismatches or ranking thresholds.

Best Practice: Use dynamic top-K tuning, hybrid (vector + keyword) retrieval, and reranking models to surface the most relevant content even when the signal is weak.

FP3. Not in Context

Here, relevant documents are retrieved but fail to make it into the final context window sent to the LLM. When too many results are returned, consolidation algorithms may omit the critical information.

Best Practice: Before sending content to the generator, give context minimization and summary top priority. Important information can be preserved by "small-to-big" retrieval techniques or recursive retrieval.

FP4. Not Extracted

In some cases, the answer is present in the context, but the model doesn’t extract it correctly. This can stem from noisy or conflicting data, or from prompts that don’t guide the LLM toward the desired section of text.

Best Practice: Use structured prompting or chain-of-thought patterns to direct extraction. Pair this with validation agents that verify extracted facts against retrieved documents.

FP5. Wrong Format

If a query requests a particular output format (such as a table, list, or structured JSON), the model may ignore such instructions. For enterprise applications, this breaks downstream automation.

Best Practice: Define output schemas using function calling or JSON-based templates. Reinforce formatting rules with post-processing validators that enforce schema compliance.

FP6. Incorrect Specificity

Answers can be too broad or overly detailed and, therefore, not serve the user’s intent. This often arises when queries are confusing or when retrieval brings in documents at the wrong granularity.

Best Practice: Add a query rewriter that clarifies vague questions and applies context-aware refinement. Adjust chunking size to better align retrieved content with user specificity.

FP7. Incomplete Answers

Sometimes, the model gives a correct but partial response, even though full information was available. This happens when context windows shorten related information or when summary drops important details.

Best Practice: You can apply multi-step answering, breaking the question into subqueries and merging their results. Evaluate completeness using automated scoring metrics or user feedback loops.

What Are The Core Components of a RAG System?

Every enterprise RAG setup involves a clear goal: retrieve, reason, validate, and respond. To achieve this reliably, it must be built around several tightly integrated components.

Key Architectural Layers

Before exploring technical components, let’s align on the objective, which is to ensure data relevance, model accuracy, and low-latency reasoning.

- Retriever: Searches across vector stores or hybrid indexes to find semantically similar chunks of text.

- Generator: The LLM (e.g., GPT-4, Claude, Llama 3) that composes contextually grounded answers.

- Knowledge Store: Databases, APIs, or content repositories holding enterprise data.

- Query Router: Routes complex queries to the right retrievers or indexes.

- Validation Layer: Re-ranks, filters, and checks factual consistency before final output.

These components work together as a pipeline and turn raw documents into intelligent, grounded responses.

What Is an Enterprise RAG Architecture?

The complexity of enterprise RAG lies not in the individual parts but in how they connect. So what does the typical enterprise RAG architecture look like?

Most companies over-engineer their RAG stack or fail to integrate security and observability early on. A layered approach ensures scalability without chaos. Let’s unpack the major layers.

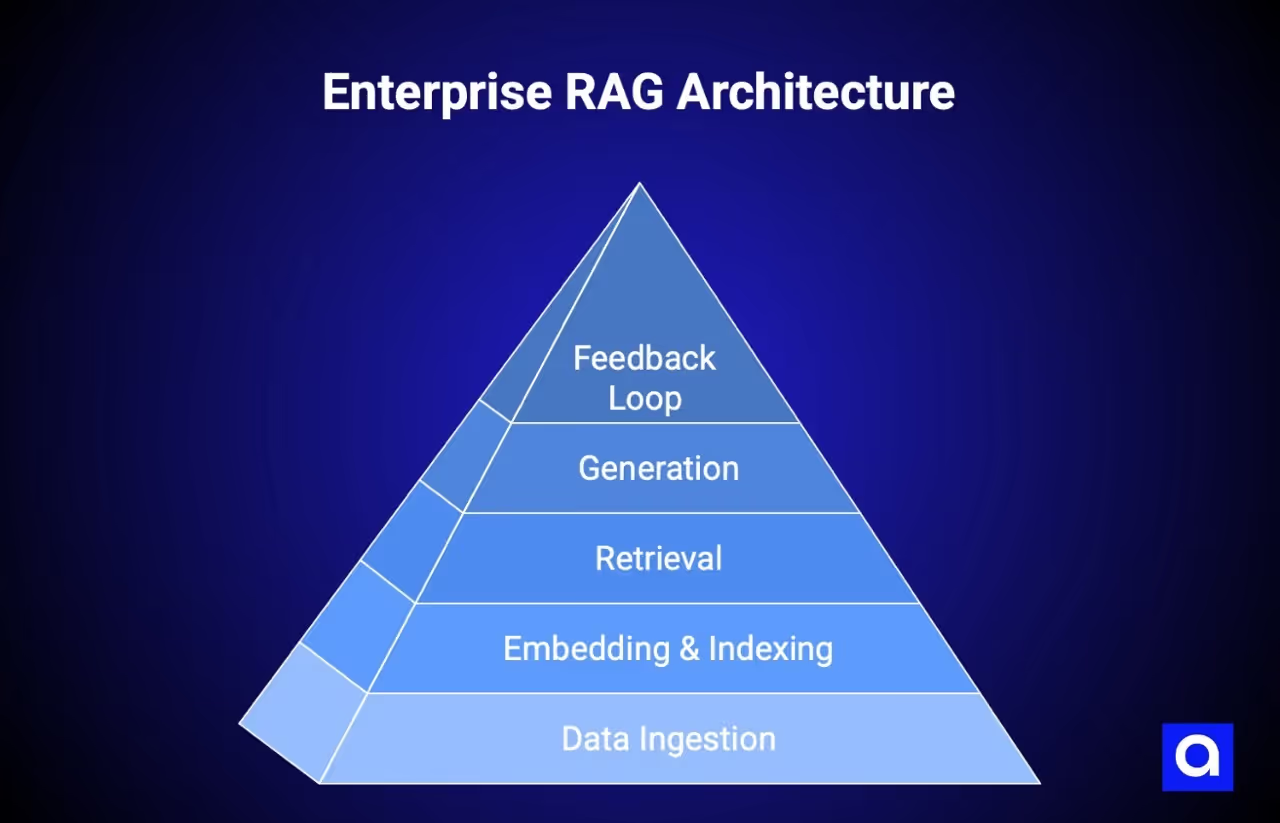

Core Layers

- Data Ingestion & Cleaning: Gathers structured and unstructured enterprise data.

- Embedding & Indexing: Transforms text into embeddings (numerical vectors) and stores them in a vector database.

- Retrieval: Uses hybrid search (vector + keyword) for precision.

- Generation: Combines retrieved snippets with LLM reasoning.

- Feedback Loop: Collects usage and accuracy data for continuous improvement.

Technologies like LangChain, LlamaIndex, and DSPy control these pipelines, whereas vector stores like Pinecone, Milvus, and Weaviate manage embeddings efficiently.

Why Are Input Guardrails and Query Handling So Important?

What’s important is that in enterprise settings, guardrails are non-negotiable. They protect systems from malicious inputs, data leaks, or model misuse.

This means without input controls, RAG systems can be jailbroken or manipulated to expose sensitive information.

Common Guardrail Mechanisms

- Anonymization: Redact personally identifiable information (PII).

- Topic Restriction: Block queries on restricted or non-business topics.

- Injection Detection: Prevent malicious SQL or prompt injections.

- Language Filtering: Ensure only supported languages are processed.

- Token Limits: Cap input length to prevent denial-of-service attacks.

- Toxicity Detection: Block abusive or non-compliant inputs.

Tools like Llama Guard (Meta) or AWS Comprehend can detect harmful prompts and sanitize input before retrieval.

How Can Query Rewriting Improve Context and Precision?

It’s no secret that users often submit vague or multi-step queries. Query rewriting improves search quality by turning inputs into more precise or deconstructed subqueries.

Poorly phrased queries lead to irrelevant document search and inaccurate responses.

Techniques

- Context-based Rewriting: Use prior conversation history to maintain context continuity.

- Subquery Decomposition: Break complex requests into smaller, answerable parts.

- Synonym & Similar Query Expansion: Generate related terms to improve recall.

Such frameworks like LlamaIndex’s SubQuestion Query Engine and LangChain’s ReAct are automating this process and enhancing both recall and relevance.

How Should You Select and Evaluate Text Encoders?

Encoders convert text into embeddings, the backbone of retrieval. Choosing the right one determines system accuracy, latency, and cost.

Enterprises often default to popular APIs without testing how well embeddings perform on their specific domain data.

How to Choose an Encoder

- Benchmarking: Start with MTEB (Massive Text Embedding Benchmark) for general comparison.

- Custom Evaluation: Measure retrieval precision using domain datasets.

- Latency and Cost: Balance model size against speed (smaller = faster, cheaper).

- Multilingual Support: For global enterprises, use multilingual embeddings or translation pipelines.

In sensitive industries like finance or healthcare, you can consider self-hosted encoders, to make sure you get compliance and data privacy.

How Should You Design a Document Ingestion Pipeline?

The ingestion pipeline transforms raw enterprise data into structured, searchable content. This is because unclean data leads to poor retrieval accuracy and high latency during searches.

Core Stages

- Parsing: Handle various formats (PDF, Word, HTML) with OCR and NLP extraction.

- Table Recognition: Preserve tabular data using models like Table Transformer.

- Metadata Extraction: Store attributes (author, date, tags) for filtering.

- Chunking: Split documents intelligently. If too small and context is lost, too large and noise increases.

Chunking strategies can be domain-aware (e.g., “function-based” for code or “paragraph-level” for text). Summarization and redundancy removal improve embedding efficiency.

How Do You Optimize Vector Databases for Retrieval?

Vector databases feed the retrieval layer, but let’s not forget, that each has trade-offs in terms of cost, latency, and recall. Inaccurate vector store configurations result in slow searches, missing documents, or costly expenses.

Optimization Strategies

- Index Type: Use HNSW for speed or Flat for accuracy.

- Hybrid Search: Combine vector (semantic) and keyword (lexical) search for better recall.

- Filtering: Use metadata filtering (by author, date, department) for enterprise precision.

- Rerankers: Add cross-encoders (e.g., BGE-Large, RankGPT) to reorder results.

- MMR (Maximal Marginal Relevance): Diversify results to reduce redundancy.

Advanced retrieval setups may also use Recursive Retrieval (small-to-large context) or Sentence Window Retrieval for contextual relevance.

How Should You Guard the Generator’s Outputs?

The way context is incorporated into natural language responses is decided by the generator. RAG systems run the risk of sensitive data leaks or hallucinations if output validation is not used.

Best Practices

- Hallucination Detection: Use log-probability scoring or validation models.

- Format Control: Enforce response schema (JSON, table, paragraph).

- Content Filtering: Detect and block harmful or non-compliant outputs.

- Brand and Ethics Filters: Ensure generated text aligns with company guidelines.

Output guardrails complement input filters, completing the security loop for enterprise-grade reliability.

How Can You Control Cost and Latency with Caching?

RAG systems can become expensive at scale. Smart caching strategies minimize repeated computation. Cost and latency are quickly increased by making frequent API calls to vector storage or LLMs.

Techniques

- Prompt Caching: Save embeddings and responses for repeated queries.

- Response Reuse: Serve cached results for identical or similar inputs.

- Monitoring Metrics: Track hit ratio, recall, and latency using tools like GPTCache.

Caching also speeds up development cycles, enabling faster iteration without unnecessary inference costs.

How Do You Monitor and Maintain RAG Quality Over Time?

RAG systems must be continuously monitored to create reliability and quality in the production. Even high-performing RAGs degrade over time due to data drift or unmonitored hallucinations.

Key Metrics:

- Groundedness: Is the output supported by retrieved documents?

- Latency: Response and retrieval times.

- Factuality & Toxicity: Accuracy and ethical compliance of generated text.

- User Feedback: Thumbs-up/down or ratings to fine-tune retrieval logic.

Platforms like Galileo or Arize AI offer observability dashboards for LLM-powered systems, enabling proactive corrections before user complaints arise.

What Are The Best Practices for Enterprise RAG Implementation?

Integrating all these moving parts into one cohesive system requires alignment across data, AI, and engineering teams. RAG projects often fail not due to technology, but due to lack of governance, iteration, and data hygiene.

Here’s what to pay attention to:

- Use domain-tuned embeddings for higher retrieval accuracy.

- Fine-tune chunking for your document structure.

- Add guardrails and observability early, not post-launch.

- Keep human-in-the-loop feedback active.

- Document everything: from retrieval configs to ranking logic.

When built with discipline, an enterprise RAG becomes a continuously improving intelligence engine.

Leading Enterprise RAG Development Partners

Not every organization needs to start from scratch. Many enterprises partner with specialized developers who already understand multi-agent pipelines, routing, and enterprise-grade RAG deployment.

Azumo

Azumo builds custom enterprise RAG systems using nearshore collaboration and adaptive architecture. Their solutions are designed to handle complex data flows while ensuring scalability and maintainability.

Key Capabilities:

- Multi-agent RAG pipelines with retrieval, reasoning, and validation

- Integration with CRMs, databases, and APIs

- Proven scalability for high-volume data systems

- Differentiator: Combines deep AI engineering with nearshore efficiency for fast delivery.

- Perfect For: Enterprises seeking secure, end-to-end RAG and Agentic RAG builds.

Vectara

Vectara provides an enterprise-grade RAG platform built from the ground up to prevent hallucinations and support high-performance scaling. Their end-to-end RAG service can be deployed on-premises, in VPC, or as SaaS.

Key Capabilities:

- Citation-backed responses with advanced explainability

- Built-in hallucination prevention and accuracy scoring

- Multi-LLM integration with enterprise security controls

- Differentiator: Prioritizes truth and relevance with verifiable, citation-backed AI responses.

- Perfect For: Enterprises requiring trusted, governed conversational AI with extraordinary accuracy.

Glean

Glean’s AI platform connects directly to enterprise data sources and provides RAG-powered search. Their approach focuses on natural language. This is to make enterprise knowledge easily available.

Key Capabilities:

- Enterprise-wide knowledge graph integration

- Real-time retrieval across CRMs, databases, and internal systems

- Personalized AI experience with secure, role-based access

- Differentiator: It combines private search with RAG for comprehensive enterprise knowledge automation.

- Perfect For: Organizations that enhance knowledge management and employee productivity at scale.

SoluLab

SoluLab specializes in Web3, blockchain, and generative AI development services. They offer custom RAG implementations tailored to enterprise needs. They concentrate on connecting cutting-edge technologies with useful business uses.

Key Capabilities:

- Custom RAG application development and integration

- Cross-industry AI solutions for healthcare, finance, and retail

- Blockchain-integrated AI systems for enhanced security

- Differentiator: Combines Web3 expertise with enterprise AI for next-generation solutions.

- Perfect For: Enterprises exploring innovative RAG applications with blockchain and decentralized technologies.

Contextual AI

Contextual AI launched its enterprise RAG 2.0 platform with Grounded Language Models (GLM) to ensure factual outputs. Their instruction-following reranker provides natural-language control over document relevance and formatting.

Key Capabilities:

- They got advanced reranking with natural language preference controls

- Grounded Language Models for factual accuracy

- Flexible deployment options with enterprise security

- Differentiator: Next-generation RAG architecture with instruction-aware relevance tuning.

- Perfect For: Teams requiring precise control over retrieval relevance and output formats.

These partners reduce complexity, helping enterprises move from prototype to production RAG systems faster.

Future of Enterprise RAG Architecture

What you should always remember is that RAG isn’t static, it’s evolving toward agentic and autonomous architectures. Future systems will feature multi-agent reasoning, self-validation, and real-time orchestration between retrievers, planners, and verifiers.

The next phase of Enterprise Agentic RAG will:

- Combine RAG with workflow automation and decision agents.

- Integrate structured and unstructured reasoning.

- Deliver continuously learning, self-correcting AI systems.

Businesses that effectively handle this shift can use AI for autonomous insight and decision-making.

Wrapping Up On Enterprise RAG

Building an enterprise RAG system is both an engineering and a strategic challenge. It requires thoughtful design, secure pipelines, and continuous improvement. But the payoff is big. It is a reliable AI that understands, retrieves, and reasons with your organization’s real knowledge.

Whether you’re developing in-house or partnering with experts like Azumo, the key is to build RAG not as a chatbot, but as a layer of enterprise intelligence. It should be precise, adaptive, and grounded in truth.

.avif)