.avif)

Artificial Intelligence (AI) is rapidly changing the way we live and work, and it's crucial to understand the programming languages used in the development of AI systems. AI is a complex field that requires specialized knowledge and skills to create sophisticated algorithms and models.

At Azumo, we’ve developed AI solutions for companies across various industries. With hands-on experience using Python, Java, C++, and other languages, we’ve seen firsthand how these languages perform in real-world applications. This gives us the practical knowledge needed to evaluate and recommend the best programming languages for different AI projects. We’re not just theorizing, we’re working with these tools every day to build real, scalable AI solutions.

In this blog post, we will explore the most commonly used AI programming languages, their benefits, and the factors to consider when choosing a programming language for an AI project.

What Are AI Coding Languages?

Python

Python is a popular AI programming language used in AI because of its simplicity and readability. Python also provides a wealth of libraries and frameworks that make it easy to develop AI applications, such as TensorFlow, PyTorch, and scikit-learn.

Key Libraries: TensorFlow, PyTorch, scikit-learn, Keras, OpenCV

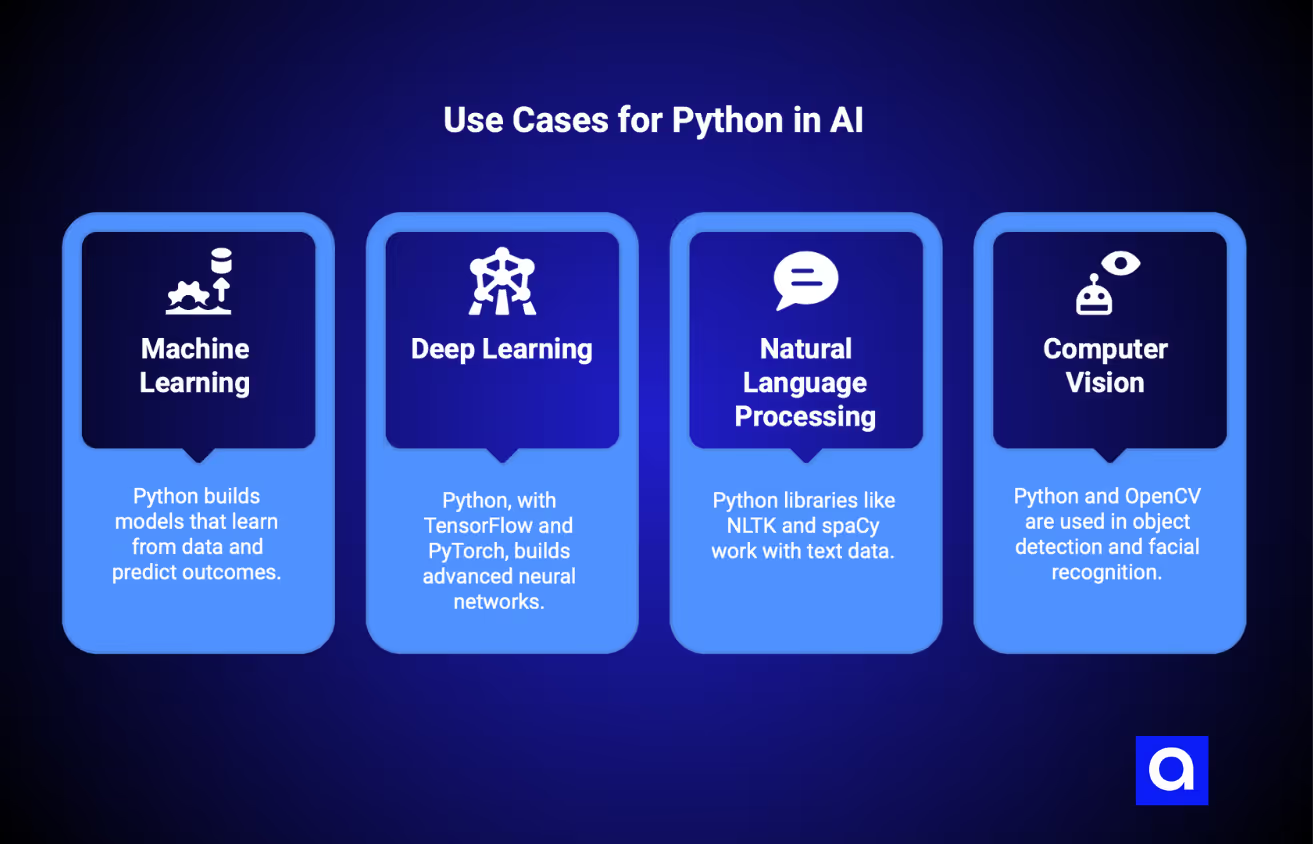

Use Cases for Python in AI:

- Machine Learning: Python is widely used to build models that can learn from data and make predictions.

- Deep Learning: With libraries like TensorFlow and PyTorch, Python is essential for building advanced neural networks.

- Natural Language Processing (NLP): Libraries like NLTK and spaCy are perfect for working with text-based data.

- Computer Vision: Python, along with OpenCV, is used in various applications such as object detection and facial recognition.

AI Jobs Using Python

- Machine Learning Engineers: Rely on Python to create and run machine learning models using TensorFlow and PyTorch.

- Data Scientists: Use Python for data manipulation and analysis with libraries like Pandas, scikit-learn, and Matplotlib.

- NLP Engineers: Use Python libraries like NLTK and spaCy to create text-based AI systems.

- Computer Vision Engineers: Use Python's OpenCV to create systems for image and video processing.

Java and Java Libraries for AI

Java is another popular AI programming language, and it provides a robust platform for building AI applications. Java has several libraries and frameworks that make it easy to develop AI systems, such as Weka, Deeplearning4j, and Java-ML.

Key Libraries: Weka, Deeplearning4j, Java-ML, Apache OpenNLP

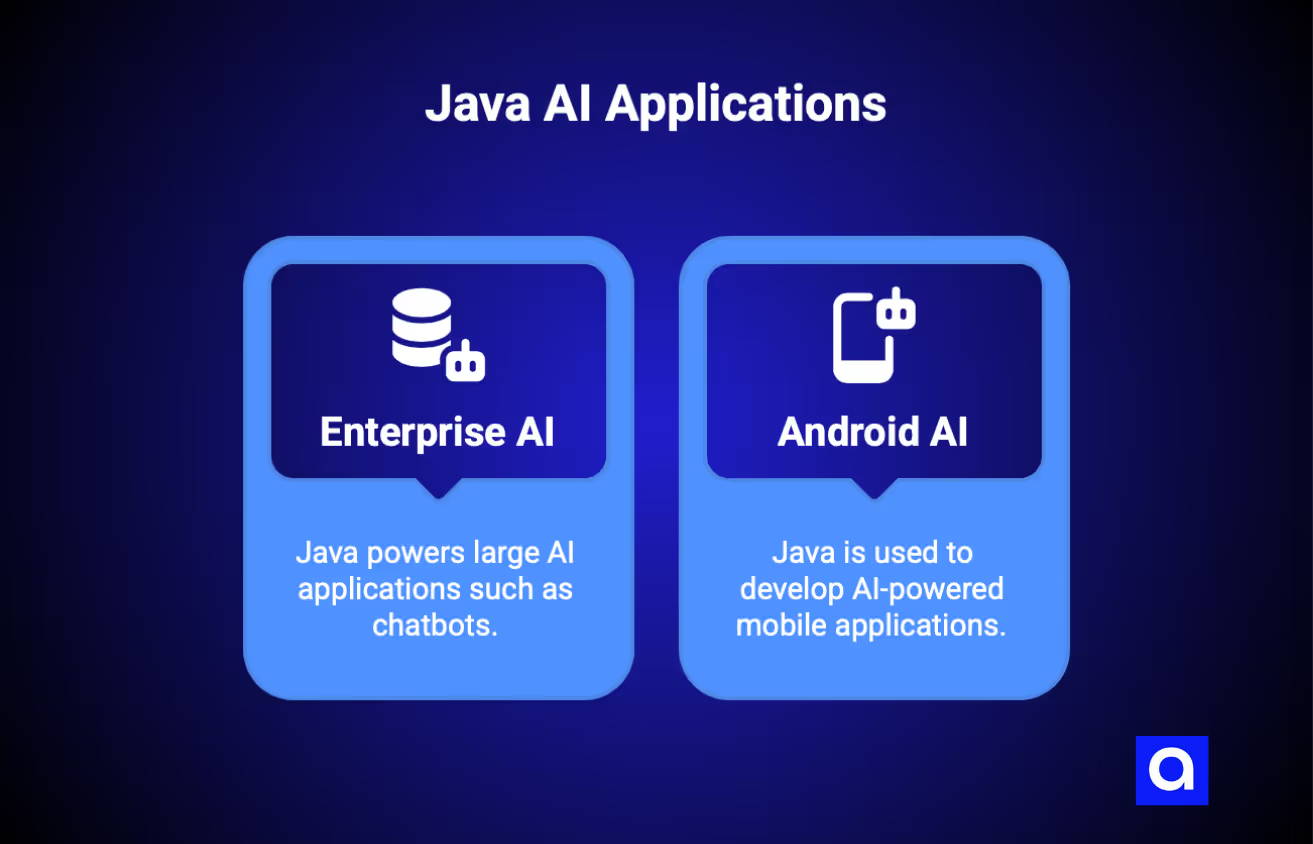

Use Cases for Java in AI:

- Enterprise AI Solutions: Used in large-scale AI applications like chatbots and predictive maintenance.

- Android AI Development: Java is the foundation for developing AI-powered mobile applications.

AI Jobs That Use Java

- Enterprise AI Engineers: Develop large-scale AI systems, often for businesses, with Java and libraries like Deeplearning4j and Weka.

- Android Developers: Develop Android apps with AI features using Java.

- Big Data Engineers: Develop AI-driven big data implementations with Apache Hadoop and Apache Spark using Java.

C++

C++ is a fast and efficient AI programming language that is often used for developing AI systems that require high performance. C++ is used in the development of several popular AI frameworks, such as TensorFlow and PyTorch.

Key Libraries: TensorFlow C++ API, OpenCV, Dlib

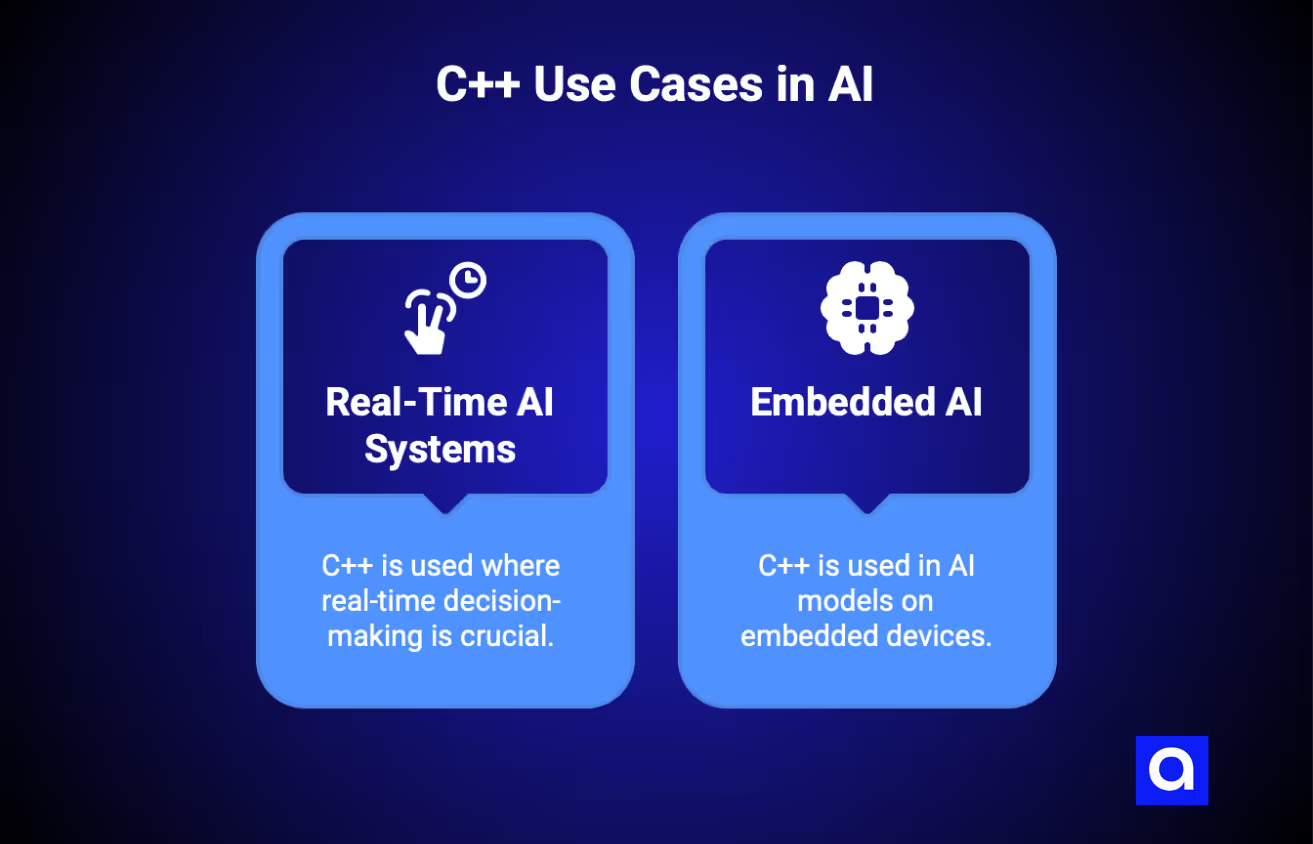

Use Cases for C++ in AI:

- Real-Time AI Systems: C++ is widely used in applications where real-time decision-making is crucial.

- Embedded AI: Used in AI models that need to run on embedded devices like mobile phones or cameras.

AI Jobs That Use C++

- Robotics Engineers: Develop AI systems for robotics and autonomous system real-time decision-making.

- Game AI Engineers: Design game AI behaviors using C++.

- Computer Vision Engineers: Utilize the application of C++ with OpenCV for object detection and facial recognition, for example.

Julia

Julia is a new AI programming language that is gaining popularity in the AI community. Julia is known for its speed and efficiency, making it ideal for developing AI systems that require high performance.

Key Libraries: Flux.jl, MLJ.jl, DifferentialEquations.jl

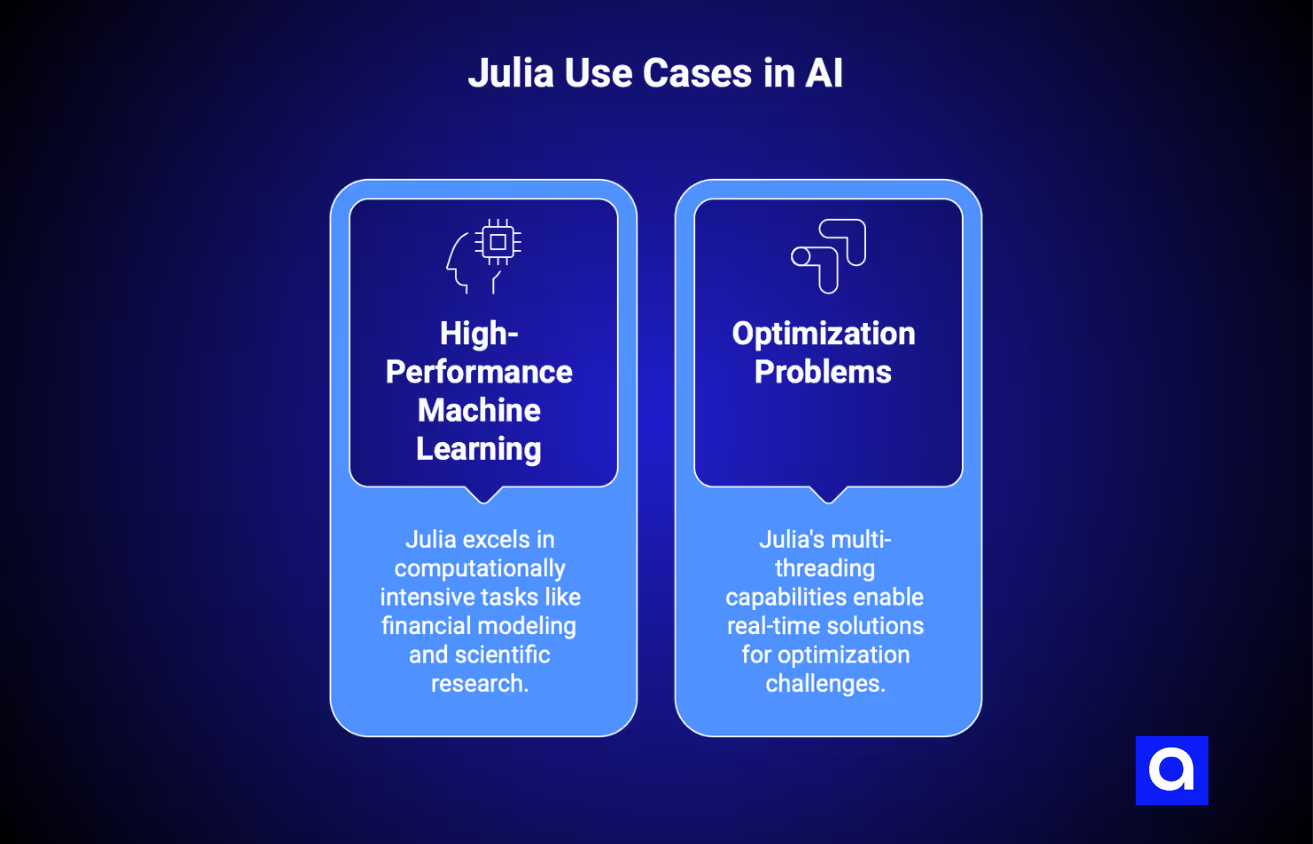

Use Cases for Julia in AI:

- High-Performance Machine Learning: Julia is well-suited for applications that require significant computation power, such as financial modeling and scientific research.

- Optimization Problems: Its multi-threading capabilities make it ideal for solving optimization problems in real-time.

AI Roles That Use Julia

- AI Researchers: Use Julia for high-performance computing machine learning models as well as computational research.

- High-Performance Computing Data Scientists: Use Julia for optimization and real-time data analysis.

- Optimization Engineers: Use the application of Julia's multi-threading capability to solve challenging optimization problems.

AI Roles That Use Julia

- AI Researchers: Use Julia for high-performance computing machine learning models as well as computational research.

- High-Performance Computing Data Scientists: Use Julia for optimization and real-time data analysis.

- Optimization Engineers: Use the application of Julia's multi-threading capability to solve challenging optimization problems.

R

R is a popular programming language that is used for data analysis and statistical computing. R has several libraries and frameworks that make it easy to develop AI applications, such as caret and mlr.

Key Libraries: caret, mlr, xgboost, ggplot2

Use Cases for R in AI:

- Statistical Analysis: Used to analyze large datasets and find hidden patterns.

- Machine Learning: Especially useful for classification, regression, and clustering tasks.

- Data Visualization: Helps to create meaningful visual representations of data, making it easier to interpret and analyze.

AI Roles That Use R

- Data Scientists: Use R to manipulate data, perform statistical modeling, and machine learning.

- Statisticians: Use R for predictive modeling in health and finance applications.

- AI Researchers: Use R packages like caret and xgboost to try out algorithms and models.

Prolog

Prolog is a logic-based programming language that is often used for developing AI systems that require reasoning and decision-making capabilities.

Key Libraries: SWI-Prolog, CLIPS, ProbLog

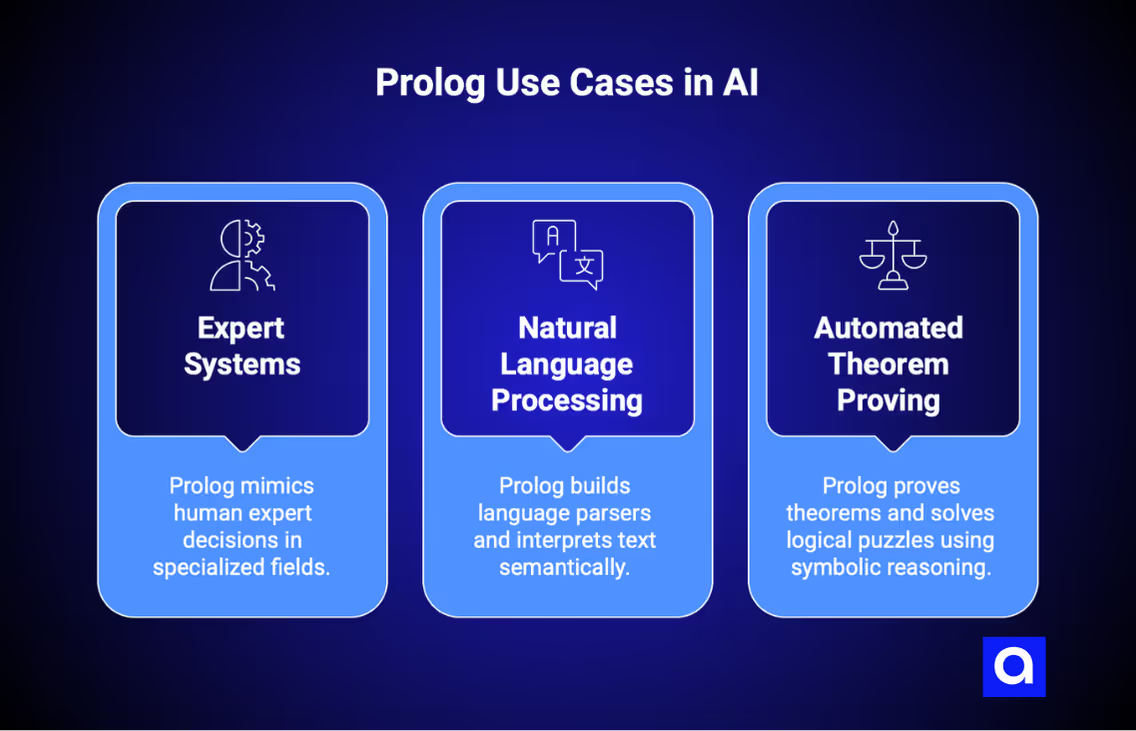

Use Cases for Prolog in AI:

- Expert Systems: Prolog is often used in expert systems where a computer mimics the decision-making ability of a human expert in specialized fields like medical diagnosis or legal reasoning.

- Natural Language Processing (NLP): Prolog’s logic-based approach makes it suitable for building language parsers and systems that require semantic interpretation of text.

- Automated Theorem Proving: Prolog can be used for proving theorems or solving logical puzzles, thanks to its ability to handle symbolic reasoning.

AI Professions Utilizing Prolog

- Expert Systems Engineers: Use Prolog to implement AI systems that mimic human decision-making within a given field, i.e., health or law.

- NLP Engineers: Leverage Prolog's logic programming paradigm for parsing and understanding text-based data.

- Theorem Proving Engineers: Use Prolog to design systems that prove the validity of mathematical or logical expressions.

Rust

Rust is a systems programming language that is often used for developing AI systems that require high performance and low latency.

Key Libraries: RustLearn, Tch-rs, ndarray

Use Cases for Rust in AI:

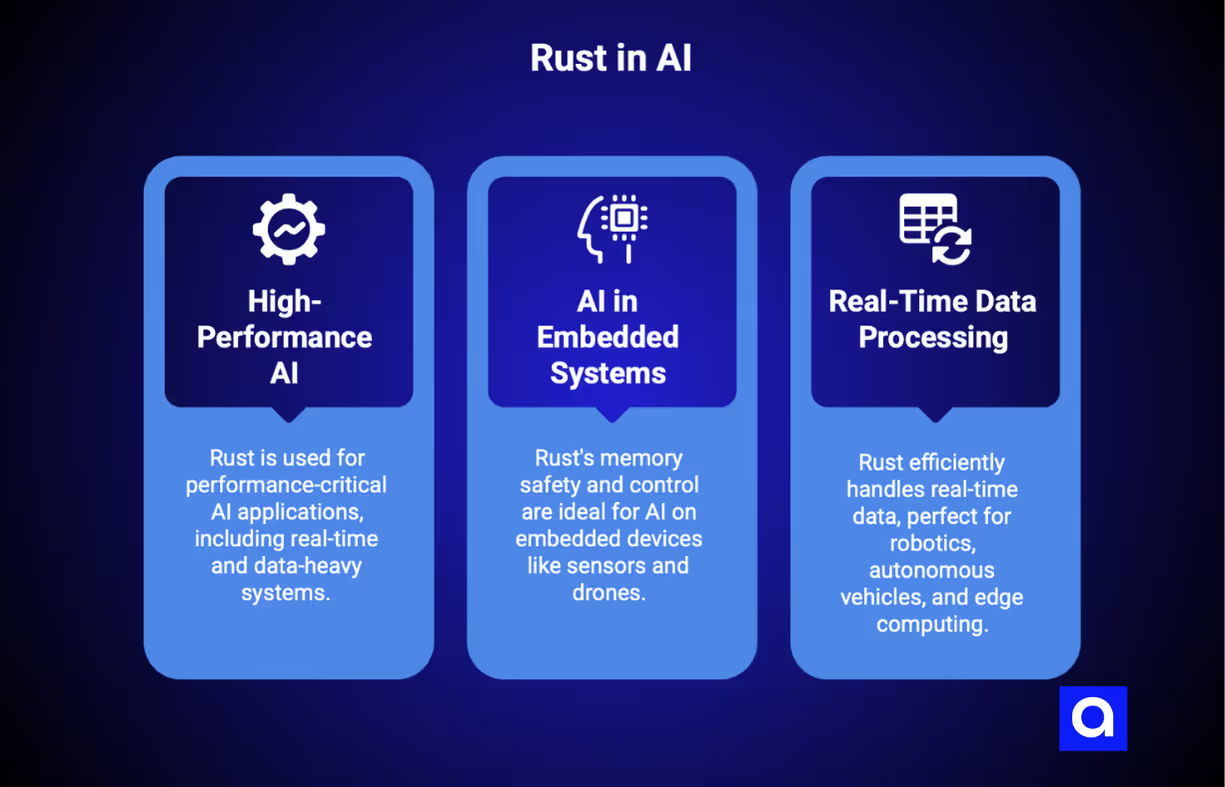

- High-Performance AI Models: Rust is increasingly being adopted for performance-critical AI applications, including real-time AI systems and data-heavy applications.

- AI in Embedded Systems: Rust’s memory safety and low-level control make it ideal for AI applications running on embedded devices like sensors, drones, and autonomous systems.

- Real-Time Data Processing: Rust’s ability to handle real-time data efficiently makes it perfect for applications in robotics, autonomous vehicles, and edge computing.

AI Roles That Utilize Rust

- Systems Engineers: Build high-performance AI systems using Rust.

- Embedded AI Developers: Use Rust for AI applications run on low-power hardware like drones and sensors.

- Real-Time AI Engineers: Develop AI applications for robotics, autonomous systems, and edge computing where real-time computation of data is critical.

Scala

Scala is a functional programming language that is often used for developing AI systems that require high scalability and parallel processing.

Key Libraries: Spark MLlib, Breeze, Deeplearning4j

Use Cases for Scala in AI:

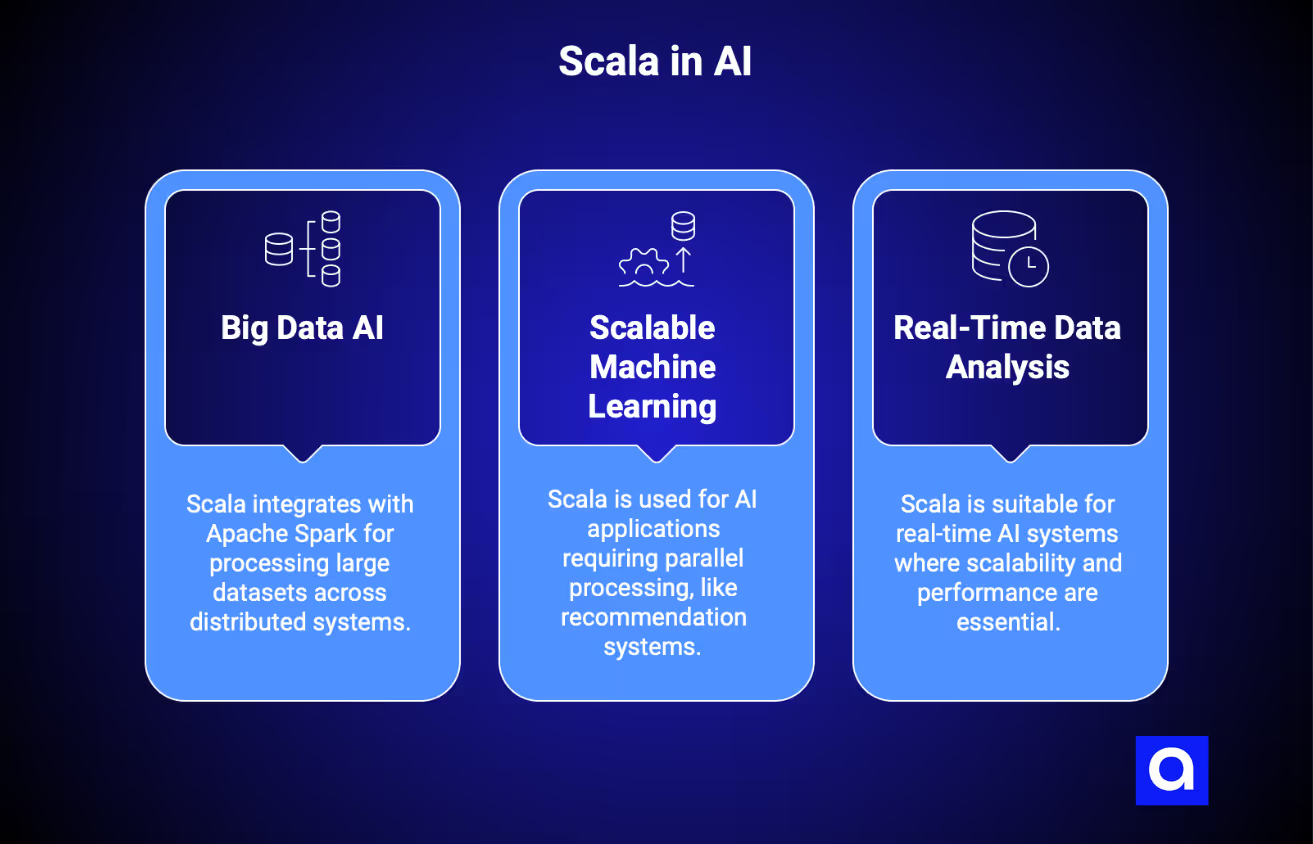

- Big Data AI: Scala’s integration with Apache Spark makes it perfect for processing and analyzing large datasets across distributed systems.

- Scalable Machine Learning: Scala is used for AI applications that require parallel processing, such as recommendation systems, data mining, and fraud detection.

- Real-Time Data Analysis: It’s also suitable for real-time AI systems, where scalability and performance are essential.

AI Professions Utilizing Scala

- Big Data Engineers: Leverage Scala capabilities with Apache Spark to manage and analyze large-scale data for AI use.

- Machine Learning Engineers: Use Scala to create scalable machine learning models, especially in big data environments.

- Real-Time Data Analysts: Create AI systems that require high-speed, real-time data analysis, like recommendation systems or detecting fraud.

JavaScript

JavaScript is a popular programming language used in web development, and it's increasingly being used for developing AI systems. JavaScript has several libraries and frameworks that make it easy to develop AI applications, such as TensorFlow.js and Brain.js.

Key Libraries: TensorFlow.js, Brain.js, Synaptic.js

Use Cases for JavaScript in AI:

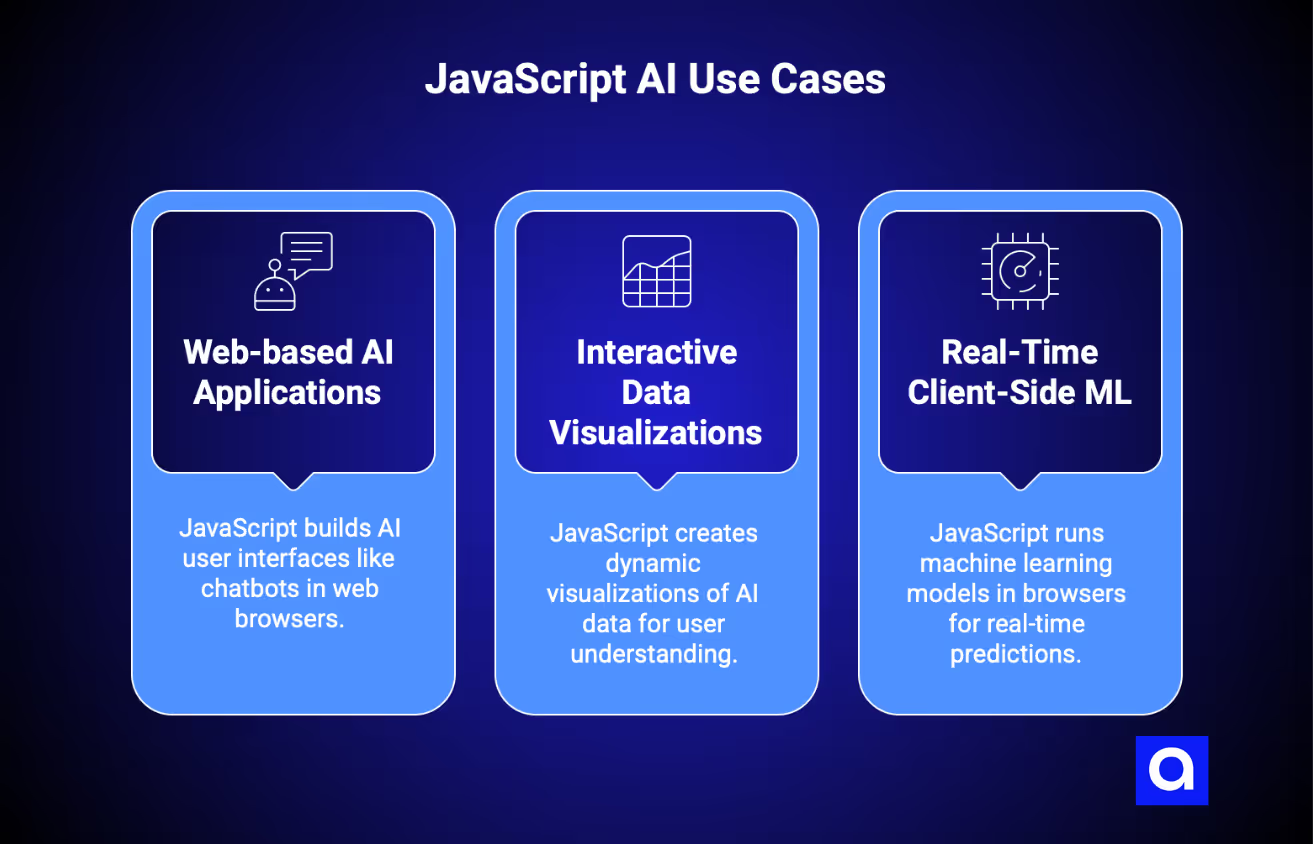

- Web-based AI Applications: JavaScript enables developers to build AI-driven user interfaces (e.g., chatbots, voice recognition, real-time translations) that run directly in web browsers.

- Interactive Data Visualizations: JavaScript is used to create dynamic, interactive visualizations of AI-generated data, making it easier for users to understand complex AI models and results.

- Real-Time Client-Side Machine Learning: With TensorFlow.js, JavaScript can run machine learning models directly in the browser, allowing for real-time predictions and inference without needing to send data to a server.

AI Jobs That Use JavaScript

- Web Developers: Create AI-driven user interfaces with TensorFlow.js and Brain.js.

- Front-End Developers: Integrate machine learning models right inside the browser for real-time access.

Data Visualization Experts: Build interactive data visualizations using D3.js to display AI output.

Top 9 AI Programming Languages in 2024: A Detailed Comparison

What Makes a Programming Language Ideal for AI Development?

When choosing a programming language for AI, here are some factors to keep in mind:

- Ease of Use: The language should have a syntax that’s easy to understand, especially for beginners.

- Extensive Libraries & Frameworks: A rich collection of tools and pre-built models is crucial for accelerating development.

- Performance: For tasks requiring high computation, languages with lower execution time are preferable.

- Community & Support: A large, active community can help you overcome challenges with tutorials, forums, and documentation.

How to Choose an AI Programming Language for a Project

When choosing a programming language for an AI project, there are several factors to consider, including:

What type of application is it?

The type of AI application you are building will influence the choice of programming language. For example, if you're building a machine learning model, Python is often the preferred choice.

What platform will it run on?

The platform where the AI application will run will also influence the choice of programming language. For example, if the AI application will run on the web, JavaScript may be the preferred choice.

What are the maintenance issues?

The maintenance issues associated with the programming language will also influence the choice. For example, if the programming language has a large community of developers, it may be easier to find solutions to problems and make updates.

Current state: Python’s early dominance meets enterprise realities

Python currently dominates with 51% developer adoption and powers 80% of AI agent implementations, a position unlikely to change dramatically despite performance limitations. While some vendors predict radical shifts, organizations are instead adopting pragmatic multi-language strategies that leverage Python’s unmatched ecosystem for AI development while incorporating systems languages like Rust and Go for performance-critical components. As the agentic AI market explodes from $13.8 billion in 2024 toward transforming 33% of enterprise software by 2028, success will come from orchestrating language diversity rather than betting on single-language revolutions.

The programming language landscape for agentic AI underwent seismic shifts in 2024-2025, with Python leading the way while simultaneously revealing critical limitations. According to GitHub’s Octoverse 2024 report, Python overtook JavaScript as the platform’s most popular language for the first time in over a decade, driven by a 98% surge in AI project contributions and 92% increase in Jupyter Notebook usage. Stack Overflow’s 2024 Developer Survey confirms this trend, with 76% of developers now using or planning to use AI tools, up from 70% in 2023.

Python’s ecosystem advantage appears insurmountable at first glance. The language boasts over 300,000 AI/ML packages, comprehensive framework support through LangChain, AutoGen, CrewAI, and LangGraph, and native integration with every major LLM provider. Microsoft’s Azure AI Foundry, Google’s Vertex AI, Amazon Bedrock, and OpenAI’s agent platforms all prioritize Python as their primary development language. Real-world implementations demonstrate this dominance - BlueOceanAI achieved 97% operational improvement building their multi-agent marketing platform primarily in Python, processing 1.2 billion tokens monthly using Amazon Bedrock with Claude models.

Yet enterprise deployments are exposing Python’s performance trade-offs. Performance comparisons between languages are highly workload-dependent and architectural choices matter more than raw language speed. While synthetic benchmarks show Python can run 60x slower than Rust for certain CPU-intensive loops, real-world AI workloads tell a different story. Most AI operations are dominated by GPU-accelerated tensor operations where the language overhead becomes negligible - a PyTorch model running on CUDA performs nearly identically whether called from Python or C++. (PyTorch C++/CUDA background). The actual bottlenecks appear in areas like web service handling, where Python’s FastAPI manages 12,000 requests per second compared to Rust’s Actix 183,000 RPS with Actix - though even here, proper caching and load balancing often matter more than language choice. (TechEmpower Framework Benchmarks). Memory overhead presents more legitimate concerns, with Python requiring 150-400MB baseline memory versus 15-50MB for Rust, creating genuine challenges for edge computing and serverless deployments.

Language selection shapes organizational capabilities profoundly

The technical architecture of programming languages directly impacts an organization’s ability to build, deploy, and scale AI chatbots across three critical dimensions: development velocity, production performance, and enterprise integration capability. For AI chatbots, a robust architecture ensures smooth natural language processing, enabling better user interactions and more accurate responses.

Development velocity varies by language choice, though the differences are often overstated. Python enables faster prototyping through its extensive libraries and forgiving syntax, with teams reporting 3-5x faster initial development for proof-of-concepts. A financial services firm modernizing 400 legacy systems leveraged this advantage, using Python for AI agent development while maintaining Java for enterprise system compatibility, achieving over 50% reduction in modernization time. However, the velocity advantage narrows in production development where type safety and testing become critical. The real bottleneck is rarely language speed but rather architectural decisions - poor database design or inefficient API calls will dwarf any language-level performance differences.

Production performance requirements increasingly favor systems languages for specific components rather than complete replacements. Rust delivers excellent performance with memory safety guarantees, but this advantage primarily matters for infrastructure components like model serving engines or high-frequency data ingestion pipelines. The “2x speed advantage over Go” often cited comes from microbenchmarks that don’t reflect full system performance. C++ maintains relevance primarily for lowest-level optimizations - companies like integrated circuit manufacturers using customized Llama models choose C++ not for general development but for specific inference optimization kernels. (See TensorRT and PyTorch C++/TensorFlow C++ backends). Go emerges as a practical choice for service orchestration, with its lightweight goroutines enabling efficient concurrent agent coordination, though the performance gains depend heavily on I/O-bound versus CPU-bound workloads.

Enterprise integration capabilities determine real-world deployment success. Java’s resurgence stems from unparalleled enterprise system compatibility and mature tooling ecosystems. While perhaps biased, Azul Systems’ 2025 State of Java Survey found 50% of participants building AI functionality use Java, with the language benefiting from Project Panama and Project Babylon for improved GPU performance. JavaScript and TypeScript dominate web-integrated agents, with 38.5% developer adoption and native support for real-time streaming interfaces critical for customer-facing AI applications.

Industry adoption reveals clear patterns and strategic choices

Analysis of enterprise implementations reveals distinct patterns in language selection driven by use case requirements and organizational contexts. Major technology platforms demonstrate this strategic alignment clearly.

Microsoft’s Azure AI Foundry exemplifies the multi-language approach, using C# for enterprise Windows/Office ecosystem integration, Python for AI/ML development, and TypeScript for cross-platform web interfaces. Their 100,000+ enterprise clients, including Standard Bank and Thomson Reuters, benefit from this architectural flexibility. Google takes a similar approach with Python for AI research, JavaScript/TypeScript for Workspace integration, and Go for backend infrastructure, leveraging their TPU optimization for model inference. Snap achieved 2.5x engagement increases using Google’s Gemini models through this multi-language architecture.

Startups and AI-first companies show different patterns. OpenAI chose Python exclusively for their Agents SDK, prioritizing ecosystem compatibility and developer familiarity. Shopify’s e-commerce AI implementation uses LLaVA (Llama-based vision model) with Python for ML development and JavaScript for platform integration, avoiding per-token inference costs while improving SEO performance. These choices reflect the trade-off between development speed and long-term scalability that every organization must navigate.

Open-source frameworks reinforce language-specific strengths. LangChain and LangGraph dominate Python with modular architectures supporting 600+ integrations. CrewAI’s role-based multi-agent framework achieves 5.76x faster performance than LangGraph in certain benchmarks, demonstrating that framework choice within a language ecosystem significantly impacts performance. JavaScript’s LangChain.js and Vercel AI SDK 3.1 bring modern TypeScript support with React hooks and streaming optimization, while Rust’s Candle and Burn frameworks target performance-critical deployments with WASM compilation for edge computing.

Today five languages compete for Agentic AI applications

- Python maintains its position as the essential foundation for agentic AI development despite acknowledged limitations. Its comprehensive ecosystem, with frameworks like LangChain supporting chains, agents, and RAG systems, provides unmatched rapid prototyping capabilities. The language excels at orchestration and coordination, with native LLM provider support and extensive vector database integrations through Pinecone, Chroma, and Weaviate. However, the Global Interpreter Lock (GIL) severely restricts parallel processing, forcing complex multiprocessing workarounds that add 50-100ms overhead per task.

- JavaScript and TypeScript capture the web-native agent market with explosive growth from 12% adoption in 2017 to 35% in 2024. The ecosystem provides full-stack development capability with Node.js runtime, excellent serverless support through AWS Lambda and Vercel, and native browser integration for client-side AI applications. LangChain.js brings enterprise-grade agent orchestration to JavaScript, while the Vercel AI SDK provides modern streaming interfaces optimized for real-time user interactions. Yet the single-threaded nature limits parallel processing for complex agent workloads, and the ecosystem lacks the AI-specific tooling depth of Python.

- Rust emerges as the performance and safety leader, climbing from 15th to 11th in Pluralsight’s language rankings within three years. The language delivers zero-cost abstractions with compile-time memory management, eliminating data races and memory leaks critical for long-running agent processes. With deployment footprints of 5-20MB, Rust excels at edge computing and embedded AI agents. The steep learning curve remains a barrier - Python developers require 3-6 months to achieve Rust proficiency, and the limited AI ecosystem requires bindings to Python or C++ implementations.

- Go provides cloud-native scalability with built-in concurrency through goroutines ideal for agent orchestration. Single binary deployments with 20-60MB footprints excel in containerized environments, while superior I/O performance handles thousands of concurrent connections. Major cloud infrastructure built with Go (Kubernetes and Docker) provides natural integration advantages. However, limited native AI/ML libraries require external API calls or Python interop, and minimal GPU acceleration capabilities restrict compute-intensive applications.

- C++ retains critical importance for high-performance infrastructure, delivering maximum performance with manual memory management and SIMD optimizations. Native CUDA support and established ML framework backends (TensorFlow C++ API, PyTorch C++) enable hardware acceleration crucial for inference serving. Predictable sub-millisecond latency serves robotics and autonomous vehicle applications. Yet development complexity increases 5-10x compared to Python, with limited high-level agent orchestration tools and significant maintenance burden for security vulnerability management.

Ecosystem maturity determines practical deployment success

The strength of language ecosystems directly correlates with successful agentic AI deployment, with framework availability, cloud platform support, and community resources creating compound advantages for established languages.

Python’s ecosystem maturity remains unmatched. Beyond raw package count, Python offers enterprise-grade features across its framework landscape. LangGraph provides stateful graph execution with persistent memory and custom breakpoints for complex workflows. AutoGen enables multi-agent conversations with mixed LLM support. CrewAI simplifies production deployment with YAML configuration and built-in memory management using ChromaDB and SQLite. Cloud platforms provide universal first-class Python support - AWS Bedrock, Azure AI Foundry, and Google Vertex AI all prioritize Python integration with comprehensive documentation and enterprise features.

JavaScript’s ecosystem shows the fastest growth trajectory. LangGraph.js , used in production by LinkedIn, Uber, and Klarna, brings graph-based orchestration to JavaScript with excellent streaming support. The npm registry’s 2.5 million packages provide extensive integration options, while modern frameworks emphasize TypeScript-first development for improved type safety. Cloud platforms increasingly support JavaScript through serverless functions and edge computing capabilities, with AWS Lambda and Cloudflare Workers providing sub-second cold starts.

Systems languages face ecosystem challenges despite technical advantages. Rust frameworks like Candle provide GPU support and WASM deployment but lack high-level abstractions for agent development. Go’s LangChainGo offers basic LLM integration but limited features compared to Python equivalents. C++ provides excellent inference optimization through ONNX Runtime and TensorRT but minimal agent orchestration capabilities. These limitations force organizations into multi-language architectures, increasing complexity but enabling optimal performance for specific components.

Future predictions reveal gradual evolution, not revolution

While some industry voices predict dramatic shifts in language dominance, the reality suggests a more nuanced evolution. Simon Ritter, deputy CTO at Azul Systems (a company specializing in Java runtime optimization), claims that “2025 is the last year of Python dominance in AI,” predicting Java will overtake Python within 18 months. This prediction appears highly unlikely given Python’s entrenched ecosystem advantages - its network effects through libraries like NumPy, Pandas, PyTorch, and TensorFlow, combined with millions of trained developers and vast educational resources, create barriers to displacement that typically take decades, not months, to overcome. (Coverage).

More realistic market dynamics point to Python maintaining dominance while other languages carve out specialized niches. Python’s ecosystem moat continues to deepen - every major AI breakthrough from transformers to diffusion models launches first in Python, reinforcing its position. The talent market does show interesting patterns, with Rust developers commanding salary premiums ($150,000-$210,000 versus Python’s $130,000-$180,000), but this reflects scarcity rather than mainstream adoption. WebAssembly emerges as an important complementary technology, with Matt Butcher of Fermyon predicting “edge computing is the keystone of WASM’s success” in 2025, enabling Python models to run efficiently at network edges through compilation rather than replacement.

New languages enter the competition with specialized advantages. Julia gains traction for high-performance scientific computing with Python-like syntax but C-like speed. Domain-specific languages like MoonBit and Wing target edge computing with WebAssembly compilation, emphasizing ultra-fast startup for resource-constrained environments. Government backing for memory-safe languages through initiatives like the White House’s push for Rust adoption in critical infrastructure accelerates this diversification.

The practical reality is that Python’s position remains secure for AI orchestration and development, while performance pressures create opportunities for complementary languages rather than replacements. McKinsey’s analysis reveals that unlike traditional IT systems where annual run costs are 10-20% of build costs, AI solutions can have recurring costs exceeding initial investment, making language efficiency important but not decisive enough to overcome ecosystem advantages. Organizations are solving this through selective optimization - keeping Python for AI logic while implementing performance-critical paths in Rust or Go, a pattern that preserves development velocity while addressing scalability concerns.

Organizations face complex trade-offs without simple solutions

The selection of programming languages for Agentic AI involves navigating multiple dimensions of trade-offs, each with significant implications for organizational success.

- Performance versus development speed represents a nuanced trade-off that varies by use case. Python enables rapid prototyping with 3-5x faster initial development, crucial for experimentation. The oft-cited performance gaps (like “Python handles 12,000 RPS while Rust manages 183,000”) are misleading without context - most AI applications are limited by model inference time, database queries, or network latency rather than language overhead. Smart architectural choices like async programming, caching layers, and horizontal scaling often provide better returns than language switching. Organizations increasingly adopt targeted optimization, keeping Python for orchestration while implementing only genuine bottlenecks in systems languages - accepting some architectural complexity for measurable performance gains where they actually matter.

- Ecosystem maturity versus innovation potential creates strategic dilemmas. Established languages offer proven reliability - Python’s 300,000+ packages and extensive documentation reduce development risk. Emerging languages promise competitive advantages - Rust’s memory safety eliminates entire categories of production failures while reducing deployment footprints by 90%. The choice depends on risk tolerance and competitive positioning, with startups often embracing newer technologies while enterprises prioritize stability.

- Talent availability versus technical advantages forces practical compromises. Python’s massive developer community ensures easy hiring and knowledge transfer. Rust’s superior technical characteristics come with a limited talent pool and 3-6 month learning curves for experienced developers. The average Rust developer salary premium of $20,000-30,000 over Python reflects this scarcity. Organizations must balance immediate staffing needs against long-term technical advantages.

- Vendor lock-in versus portability gains importance as AI platforms proliferate. Cloud-native languages like Go provide natural portability across providers. Proprietary frameworks risk dependency on specific vendors - Salesforce’s Apex locks organizations into their ecosystem. Open standards like Model Context Protocol and WebAssembly compilation offer escape routes, but require additional development effort. The trade-off between convenience and flexibility becomes critical as Agentic AI systems become business-critical infrastructure.

The path forward requires strategic multi-language architectures

The evidence overwhelmingly indicates that successful enterprise agentic AI deployment requires abandoning single-language strategies in favor of carefully orchestrated multi-language architectures. Leading organizations are already implementing this approach with clear patterns emerging.

The recommended architecture allocates languages based on their strengths: Python for orchestration and AI logic (50-60% of codebase), providing rapid development and ecosystem access; Rust or Go for performance-critical components (20-30%), handling high-throughput processing and resource-constrained deployments; JavaScript/TypeScript for user interfaces and web integration (15-20%), enabling responsive user experiences; and Java or C# for enterprise system integration (5-10%), maintaining compatibility with existing infrastructure.

Implementation should follow a phased approach. Phase 1 focuses on rapid prototyping in Python, leveraging existing frameworks and tools to validate concepts quickly. Phase 2 identifies performance bottlenecks through comprehensive benchmarking and profiling. Phase 3 selectively replaces critical components with optimized implementations in systems languages. Phase 4 establishes governance frameworks for multi-language maintenance and evolution.

Critical success factors include investing in robust integration layers between language boundaries, establishing clear ownership and expertise requirements for each language domain, implementing comprehensive testing across language interfaces, and maintaining flexibility to adopt emerging languages as the ecosystem evolves. Organizations should create cross-functional “transformation squads” combining domain expertise with polyglot programming capabilities.

The window for strategic positioning narrows rapidly. Gartner predicts 33% of enterprise software will include agentic AI by 2028, up from less than 1% in 2024. Organizations that optimize their language strategies now will capture competitive advantages, while those clinging to single-language approaches risk being constrained by technical limitations as autonomous systems become essential infrastructure. The winners in the agentic AI era will be those who embrace linguistic diversity as a source of strength, building architectures that leverage each language’s unique capabilities while managing complexity through careful design and governance.

Next Steps: Start Your AI Journey

AI is a rapidly growing field that requires specialized knowledge and skills. The AI programming languages play a crucial role in the development of AI systems and applications. When choosing a programming language for an AI project, it's important to consider factors such as the type of application, the platform it will run on, maintenance issues, performance and scalability, and security. By understanding the different programming languages used in AI and the factors to consider when choosing one, you can make informed decisions and develop more effective AI systems.

.avif)