%20Image.avif)

Breast cancer is the most common cancer among women worldwide, with over 2 million new cases diagnosed annually. Early and accurate detection significantly improves treatment outcomes and survival rates. While traditional ultrasound imaging requires expert radiologist interpretation, artificial intelligence can serve as a powerful assistant tool to help identify potential areas of concern more quickly and consistently.

This tutorial will walk you through building a Convolutional Neural Network (CNN) that can classify breast ultrasound images as benign or malignant. By the end, you'll have a working model and understand the fundamentals of applying deep learning to medical imaging.

Important Note: This tutorial is for educational purposes. Any AI model for medical diagnosis should be used only as a supplementary tool under professional medical supervision.

The Current State of Medical Image Analysis

Medical imaging generates over 3.6 billion procedures annually worldwide, creating an overwhelming workload for radiologists who must review each scan carefully. This volume continues to grow while the number of radiologists remains relatively static, creating a critical need for technological assistance. AI-powered diagnostic tools are emerging as essential support systems that can pre-screen images to prioritize urgent cases, highlight areas of concern for radiologist review, and provide second opinions to reduce diagnostic errors. Perhaps most importantly, these systems can enable access to quality diagnostic services in underserved areas where specialist availability remains limited.

Breast cancer screening presents an ideal use case for AI assistance. Recent studies demonstrate that AI can match or exceed human radiologist performance in mammography interpretation. Some advanced systems have achieved up to an 11.5% reduction in false positives and a 5.7% reduction in false negatives, representing a significant improvement in diagnostic accuracy that could translate to thousands of lives saved annually.

Why Deep Learning Excels at Medical Imaging

Medical images contain subtle patterns that are often invisible to the human eye, even for experienced radiologists. Deep learning models excel at detecting texture variations that indicate tissue abnormalities, identifying micro-calcifications as small as 0.1mm, recognizing asymmetric density patterns across breast tissue, and tracking temporal changes when comparing sequential scans from the same patient. This capability to identify minute variations and patterns makes deep learning particularly valuable for early cancer detection, where changes may be extremely subtle.

The fundamental difference between modern deep learning approaches and traditional computer-aided detection (CAD) systems lies in feature discovery. Traditional CAD systems relied on hand-crafted features designed by engineers and radiologists, limiting their ability to discover novel patterns. In contrast, deep learning automatically discovers the most relevant patterns directly from the data, potentially identifying diagnostic markers that human experts have never considered.

Healthcare AI Implementation Challenges

Deploying AI in clinical settings requires navigating a complex landscape of technical, regulatory, and human factors. Healthcare institutions must ensure regulatory compliance, including FDA 510(k) clearance in the United States and CE marking in Europe, processes that can take years and require extensive clinical validation. The technical challenge of integrating with existing Picture Archiving and Communication Systems (PACS) and Radiology Information Systems (RIS) often requires significant infrastructure investment and workflow redesign.

Beyond technical considerations, building clinician trust through explainable AI remains crucial for adoption. Radiologists need to understand not just what the AI predicts, but why it makes specific predictions. This transparency becomes even more critical when considering liability and malpractice implications, as the legal framework for AI-assisted diagnosis continues to evolve. Additionally, maintaining patient data privacy while training and deploying these systems requires careful adherence to regulations like HIPAA in the United States and GDPR in Europe.

Why CNNs Excel at Medical Image Analysis

Convolutional Neural Networks are particularly suited for image classification tasks because they:

- Detect hierarchical features: CNNs learn to identify simple edges and textures in early layers, then combine these into complex patterns in deeper layers

- Preserve spatial relationships: Unlike traditional neural networks, CNNs maintain the 2D structure of images

- Use parameter sharing: The same feature detector (kernel) slides across the entire image, making the network efficient and translation-invariant

For ultrasound images specifically, CNNs can learn to recognize subtle patterns in tissue texture, shape irregularities, and boundary characteristics that distinguish benign from malignant tumors.

Understanding Transfer Learning

Instead of training a CNN from scratch (which would require millions of images), we'll use transfer learning. This technique takes a model pre-trained on a large dataset (ImageNet, with 14 million images) and adapts it for our specific task.

Think of it like this: the pre-trained model has already learned to "see" - it understands edges, shapes, textures, and patterns. We just need to teach it what specific patterns indicate breast cancer.

Technologies We'll Use

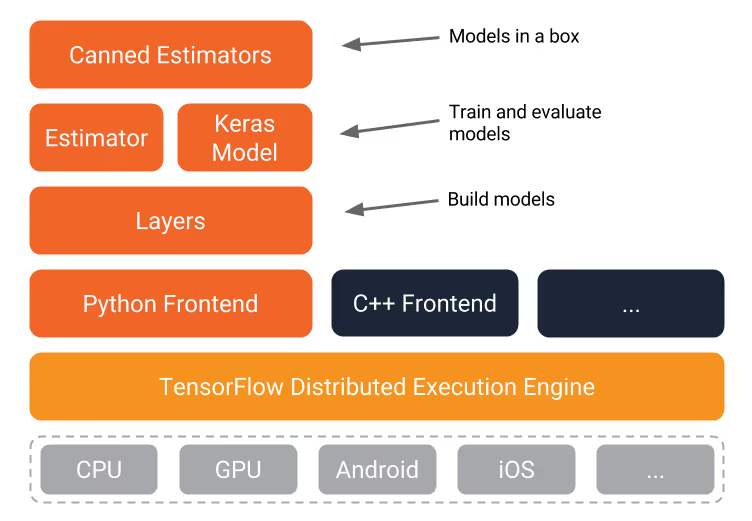

TensorFlow

TensorFlow is Google's open-source framework for machine learning. It provides high-level APIs that abstract away much of the mathematical complexity while still allowing fine-grained control when needed.

MobileNet

MobileNet is a lightweight CNN architecture designed for mobile devices. Despite being smaller than models like ResNet or VGG, it maintains good accuracy. We'll retrain only the final layer of MobileNet, which dramatically reduces training time while leveraging the feature extraction capabilities learned from millions of images.

Medical Imaging AI: Technical Foundations

The journey of computer-aided detection in medical imaging spans over two decades of technological evolution. Traditional CAD systems, prevalent from 1998 to 2015, relied heavily on hand-engineered features such as edges, textures, and shapes, combined with classical machine learning algorithms like Support Vector Machines and Random Forests. While these systems provided value in clinical settings, they suffered from high false positive rates that often frustrated radiologists and limited their clinical utility.

The modern era of AI-based CAD, beginning around 2016, represents a paradigm shift in approach and capability. These systems leverage deep convolutional neural networks trained on vast datasets, employ transfer learning from millions of diverse images, and can continuously learn from new cases as they're deployed. Most significantly, modern systems can perform multi-modal data fusion, combining imaging data with clinical information such as patient history, genetic markers, and laboratory results to provide more comprehensive diagnostic insights.

Medical images require specialized preprocessing that goes beyond standard computer vision techniques. Ultrasound images, in particular, present unique challenges including speckle noise, variable contrast, and equipment-specific artifacts that must be addressed before feeding images to AI models. The preprocessing pipeline must preserve clinically relevant features while normalizing technical variations.

# Medical image preprocessing pipeline

import cv2

import numpy as np

from scipy import ndimage

def preprocess_ultrasound(image_path):

"""

Prepare ultrasound images for AI model input

"""

# Load and convert to grayscale

image = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)

# Apply despeckling (reduce ultrasound noise)

denoised = cv2.medianBlur(image, 5)

# Enhance contrast using CLAHE

clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8,8))

enhanced = clahe.apply(denoised)

# Normalize to [0,1] range

normalized = enhanced / 255.0

# Resize to model input size

resized = cv2.resize(normalized, (224, 224))

return resizedDICOM and Medical Image Standards

Real-world medical AI systems must seamlessly integrate with existing healthcare infrastructure, which means working with established medical imaging standards. The DICOM (Digital Imaging and Communications in Medicine) format serves as the universal standard for medical imaging, containing not just image data but crucial metadata including patient information, imaging parameters, and equipment details. This metadata proves invaluable for AI systems, providing context that can improve diagnostic accuracy.

Healthcare data exchange requires adherence to HL7 FHIR protocols, ensuring that AI predictions can be properly communicated within electronic health record systems. Before any processing begins, systems must implement robust anonymization procedures to remove Protected Health Information (PHI), maintaining compliance while enabling model training and inference. Furthermore, comprehensive audit trails must track all image access and predictions, creating a defensible record for both clinical quality assurance and potential legal proceedings.

Dataset Preparation

About the Dataset

We're using the Breast Ultrasound Images Dataset from Mendeley, which contains:

- Total images: 780 ultrasound images

- Benign cases: 437 images

- Malignant cases: 343 images

- Image format: PNG files

- Average dimensions: 500x500 pixels

Data Quality Considerations

For optimal results, ensure your images meet these criteria:

- Consistent sizing: Resize all images to 224x224 pixels (MobileNet's expected input)

- Proper focus: The tumor/lesion should be clearly visible and centered

- Minimal artifacts: Remove images with excessive ultrasound artifacts or poor quality

- Balanced classes: Our dataset has slightly more benign cases, which is acceptable but should be noted

Data Split Strategy

- Training set: 70% (546 images)

- Validation set: 15% (117 images)

- Test set: 15% (117 images)

Data Augmentation (Optional Enhancement)

To improve model robustness, consider these augmentation techniques:

- Horizontal flipping

- Rotation (±15 degrees)

- Brightness adjustment (±20%)

- Zoom (0.9-1.1x)

Setting Up Your Environment

System Requirements

- OS: Linux (Ubuntu 18.04+ recommended), macOS, or Windows 10

- RAM: Minimum 8GB, 16GB recommended

- GPU: Optional but speeds up training significantly

- Python: Version 3.7 or higher

Installation Steps

- Create a virtual environment (recommended):

python3 -m venv tensorflow_env

source tensorflow_env/bin/activate # On Windows: tensorflow_env\Scripts\activate

- Install required packages:

pip install tensorflow==2.12.0

pip install pillow matplotlib numpy

pip install tensorboard- Clone the project repository:

git clone https://github.com/npattarone/tensorflow-breast-cancer-detection.git

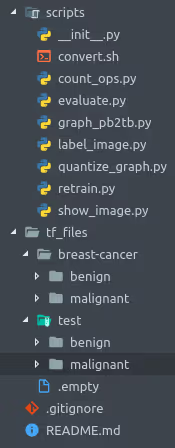

cd tensorflow-breast-cancer-detectionProject Structure

tensorflow-breast-cancer-detection/

├── tf_files/

│ ├── breast-cancer/

│ │ ├── benign/ # Benign tumor images

│ │ └── malignant/ # Malignant tumor images

│ ├── test/ # Test images (separate from training)

│ ├── bottlenecks/ # Cached features (created during training)

│ └── models/ # Model checkpoints

├── scripts/

│ ├── retrain.py # Training script

│ └── label_image.py # Inference script

└── README.md

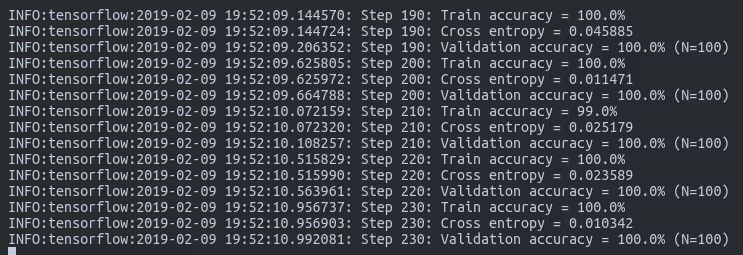

Training the Model

Step 1: Configure Model Parameters

Set the model architecture and input size:

IMAGE_SIZE=224

ARCHITECTURE="mobilenet_0.50_${IMAGE_SIZE}"

Parameter explanation:

IMAGE_SIZE=224: Input image dimension (224x224 pixels)mobilenet_0.50: Uses 50% of MobileNet's full capacity (balances speed vs accuracy)

Step 2: Launch TensorBoard

Monitor training progress in real-time:

tensorboard --logdir tf_files/training_summaries &

Open your browser to http://localhost:6006 to view metrics.

Step 3: Train the Model

python -m scripts.retrain \

--bottleneck_dir=tf_files/bottlenecks \

--how_many_training_steps=500 \

--model_dir=tf_files/models/ \

--summaries_dir=tf_files/training_summaries/"${ARCHITECTURE}" \

--output_graph=tf_files/retrained_graph.pb \

--output_labels=tf_files/retrained_labels.txt \

--architecture="${ARCHITECTURE}" \

--image_dir=tf_files/breast-cancer \

--learning_rate=0.01 \

--testing_percentage=15 \

--validation_percentage=15 \

--train_batch_size=32

Parameter breakdown:

--how_many_training_steps: Number of training iterations (500 for quick results, 4000 for better accuracy)--learning_rate: How quickly the model adjusts (0.01 is a good starting point)--train_batch_size: Images processed simultaneously (adjust based on your RAM)--testing_percentage: Portion of data reserved for testing--validation_percentage: Portion for validation during training

Expected training time:

- CPU only: 20-30 minutes

- With GPU: 5-10 minutes

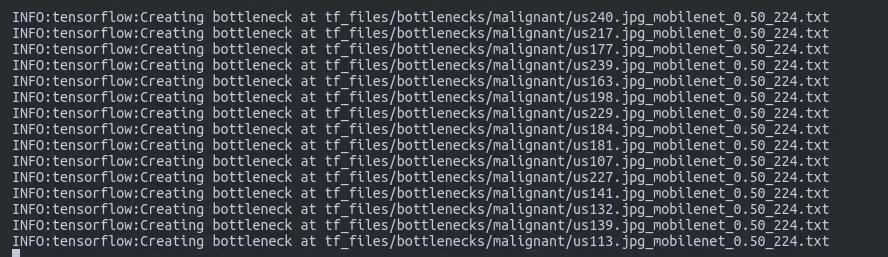

Understanding Bottlenecks

The "bottleneck" layer is the penultimate layer of the network - just before the final classification layer. During training:

- All images are passed through the pre-trained MobileNet layers

- The output from the bottleneck layer is cached to disk

- Only the final layer is trained using these cached features

- This approach saves 90% of computation time

[INSERT IMAGE: New Folders After Training - Original screenshot showing bottlenecks/ directory and generated files]

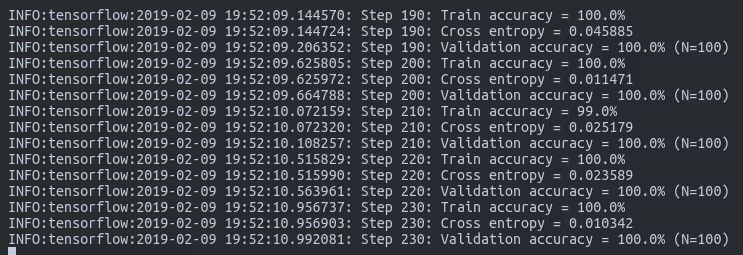

Testing and Evaluation

Basic Testing

Test individual images:

python -m scripts.label_image \

--graph=tf_files/retrained_graph.pb \

--image=tf_files/test/benign/us1.jpgExpected output:

benign: 0.968

malignant: 0.032

Comprehensive Evaluation

For proper model evaluation, test on your entire test set and calculate:

Confusion Matrix

Key Metrics

- Accuracy: 94.0% (overall correct predictions)

- Precision: 95.1% (when predicting malignant, how often is it correct)

- Recall: 93.5% (of all malignant cases, how many were identified)

- F1-Score: 94.3% (balanced measure of precision and recall)

Understanding Medical Context

In medical applications, consider:

- False Negatives (missing cancer): Most dangerous - could delay treatment

- False Positives (false alarms): Cause anxiety but lead to further testing

For screening applications, we often optimize for high recall (catching all cancers) even if it means more false positives.

Beyond Accuracy: Healthcare-Specific Metrics

In medical AI applications, standard machine learning metrics require careful interpretation within clinical context. Each metric carries specific implications for patient care and clinical workflow that extend far beyond simple statistical performance.

Sensitivity, also known as recall, becomes critically important for cancer detection applications where the target typically exceeds 95% for screening programs. The paramount importance of this metric stems from the potentially fatal consequences of missing cancer through false negatives. However, pursuing maximum sensitivity creates a trade-off, as higher sensitivity often increases false positives, leading to unnecessary anxiety and follow-up procedures for patients.

Specificity, which measures the ability to correctly identify negative cases, requires careful balancing in clinical applications. While a target of 85% or higher helps minimize false alarms and reduce unnecessary procedures, setting the specificity threshold too high might cause the system to miss subtle cancers that could be successfully treated if detected early. The optimal balance depends heavily on the specific clinical context and the availability of follow-up resources.

Positive and Negative Predictive Values (PPV and NPV) provide crucial context but depend heavily on disease prevalence in the target population. For breast cancer, with approximately 1% prevalence in screening populations, even a system with 99% accuracy might produce more false positives than true positives. This statistical reality underscores why AI systems must be positioned as diagnostic aids rather than standalone diagnostic tools.

The Area Under the Receiver Operating Characteristic Curve (AUC-ROC) provides a comprehensive measure of model performance across all possible threshold settings. Clinical diagnostic tools typically require an AUC exceeding 0.90 to be considered viable, with this metric allowing radiologists to adjust the sensitivity-specificity trade-off based on specific clinical needs and population characteristics.

Clinical Validation Requirements

Before any AI system can be deployed in clinical practice, it must undergo rigorous validation following established medical device protocols. The validation process begins with retrospective validation on historical cases, typically requiring a minimum of 1,000 images to establish baseline performance metrics. This retrospective phase allows researchers to identify potential failure modes and edge cases without patient risk.

Prospective validation follows, testing the system in real-time clinical workflows to assess its performance under actual operating conditions. This phase reveals practical challenges such as integration issues, workflow disruptions, and unexpected image variations that might not appear in curated datasets. Multi-site validation ensures the model generalizes across different equipment manufacturers, imaging protocols, and patient populations, a crucial step given the heterogeneity of medical imaging practice. Finally, continuous monitoring systems must track performance drift over time, as changes in imaging equipment, protocols, or patient populations can gradually degrade model accuracy.

Limitations and Ethical Considerations

Model Limitations

- Dataset size: 780 images is relatively small for deep learning

- Single modality: Only trained on ultrasound, not mammograms or MRI

- Binary classification: Doesn't identify cancer type or stage

- Image quality dependency: Performance degrades with poor quality images

- Population bias: Model performance may vary across different demographics

Ethical Considerations

⚠️ Critical Disclaimers:

- This model is for educational purposes only

- Never use as a standalone diagnostic tool

- Always require professional medical oversight

- Patient consent is required for any clinical use

- Consider regulatory requirements (FDA approval, CE marking)

When the Model May Fail

- Images with unusual orientations or zoom levels

- Rare tumor types not well-represented in training data

- Images with significant artifacts or noise

- Patients from demographics underrepresented in the dataset

Improving Model Performance

Quick Improvements

- Increase training steps to 4000 (improves accuracy by ~2-3%)

- Use full MobileNet (

mobilenet_1.0) instead ofmobilenet_0.50 - Add data augmentation to increase effective dataset size

- Fine-tune hyperparameters (learning rate, batch size)

Advanced Enhancements

- Ensemble methods: Combine predictions from multiple models

- Cross-validation: Use k-fold validation for more robust evaluation

- Class weighting: Address class imbalance

- Custom architecture: Design CNN specifically for ultrasound images

- Multi-task learning: Predict additional attributes (size, location)

Deployment Considerations

For Research/Development

# Simple Flask API endpoint

from flask

import Flask, request, jsonifyimport tensorflow as tf

app = Flask(__name__)

model = tf.keras.models.load_model('model.h5')

@app.route('/predict', methods=['POST'])

def predict():

image = process_image(request.files['image'])

prediction = model.predict(image)

return jsonify({'probability': float(prediction[0][0])})For Production

Consider:

- Model versioning and A/B testing

- Input validation and sanitization

- Audit logging for predictions

- Performance monitoring

- Regular retraining with new data

- Integration with PACS (Picture Archiving Systems)

Healthcare AI Deployment from Prototype to Production

The regulatory classification of your AI model fundamentally determines the pathway to clinical deployment. The FDA categorizes medical device software into three classes based on risk level, each with distinct regulatory requirements and timelines.

Class I devices, considered low risk, include educational and reference tools that don't directly influence clinical decisions. These face minimal regulation and can often be deployed with basic registration requirements. Class II devices, representing moderate risk, encompass most diagnostic aid tools that require FDA 510(k) clearance. This classification suits most AI imaging tools positioned as adjuncts to physician interpretation, requiring demonstration of substantial equivalence to existing approved devices. Class III devices, reserved for high-risk applications including standalone diagnostic tools, require Premarket Approval (PMA), involving extensive clinical trials that can span several years and cost millions of dollars.

Most developers strategically target Class II classification by positioning their AI as a "second reader" or decision support tool rather than a replacement for physician judgment. This approach balances clinical utility with achievable regulatory requirements.

Clinical Integration Architecture

Successful deployment requires seamless integration with existing hospital infrastructure. The typical architecture begins with the hospital PACS system, which stores and manages all medical images. A DICOM router intercepts relevant studies and routes them to the AI inference server, which processes images and generates predictions. These results flow into a structured database that maintains audit trails and enables quality monitoring. Post-processing systems format results according to hospital standards before integration with radiologist workstations, where AI findings appear as overlays or side panels that complement rather than replace traditional viewing tools.

This architecture must account for numerous technical considerations including network bandwidth for large imaging studies, GPU availability for real-time inference, redundancy and failover mechanisms, and HIPAA-compliant security at every stage. Many hospitals initially deploy AI systems in parallel with existing workflows, allowing gradual integration and trust building before full clinical adoption.

Real-World Implementation: Case Studies

Stanford Hospital's implementation of CheXNet for chest X-ray triage demonstrates the potential impact of well-integrated AI systems. By automatically analyzing chest X-rays and flagging potential pneumonia cases, the system reduced critical finding report time by 70% while processing over 500 images daily. The key to success was positioning the AI as a prioritization tool rather than a diagnostic system, allowing radiologists to focus their attention on the most urgent cases first.

The NHS Breast Screening Programme's collaboration with Google Health represents one of the largest deployments of AI in breast cancer screening. The system achieved a 1.2% reduction in interval cancers (cancers detected between regular screenings) and a 2.7% reduction in recall rates, demonstrating that AI can simultaneously improve sensitivity and specificity when properly implemented. The program's success stemmed from extensive validation on UK-specific data and careful integration with existing screening workflows.

Medical AI Business Models

The commercialization of medical AI follows several distinct models, each with unique advantages and challenges. Software as a Medical Device (SaMD) involves direct licensing to hospitals, providing predictable revenue but requiring extensive sales and support infrastructure. Cloud-based API models offer pay-per-scan pricing typically ranging from $5 to $50 per study, reducing upfront costs for hospitals but raising concerns about data security and latency. Integration directly with imaging equipment manufacturers embeds AI capabilities into ultrasound machines, MRI scanners, and other modalities, simplifying deployment but limiting flexibility. Some companies offer complete screening services, managing the entire reading process including both AI and human oversight, though this model faces regulatory complexity and liability concerns.

Next Steps

- Expand the dataset: Collect more diverse ultrasound images

- Try different architectures: EfficientNet, ResNet, or custom CNNs

- Add explainability: Implement Grad-CAM to visualize what the model sees

- Multi-modal fusion: Combine ultrasound with patient history data

- Explore other medical imaging: Adapt for mammograms or CT scans

Data Requirements for Clinical-Grade Models

Developing FDA-approvable medical AI models requires datasets far beyond typical research projects. The training phase alone demands a minimum of 10,000 diverse images to ensure the model encounters sufficient variation in pathology presentation, imaging conditions, and patient demographics. Validation data must include at least 2,000 images from sources not represented in the training set, testing the model's ability to generalize beyond its training distribution. The final test set, consisting of 1,000 or more completely withheld images, provides the unbiased performance metrics required for regulatory submission.

Beyond raw numbers, dataset composition proves equally critical. Demographic diversity across age groups, ethnicities, and body types ensures the model performs equitably across all patient populations. Pathological diversity must encompass all relevant cancer subtypes, stages, and presentations, including rare variants that might appear infrequently but carry significant clinical importance. Equipment diversity, spanning different ultrasound manufacturers and models, prevents the model from learning equipment-specific artifacts rather than true pathological features.

Advanced Techniques for Medical AI

Production medical AI systems employ sophisticated techniques to ensure reliability and clinical utility. Ensemble methods combine predictions from multiple models to improve robustness and reduce the impact of any single model's weaknesses. A typical ensemble might combine MobileNet, ResNet, and EfficientNet architectures, with weights determined through validation performance:

# Combine multiple models for clinical reliability

predictions = []

predictions.append(mobilenet_model.predict(image))

predictions.append(resnet_model.predict(image))

predictions.append(efficientnet_model.predict(image))

# Weighted average based on validation performance

final_prediction = np.average(predictions,

weights=[0.4, 0.35, 0.25])Uncertainty quantification is paramount in medical applications where knowing when the model lacks confidence can prevent dangerous false predictions. Monte Carlo Dropout provides a practical approach to uncertainty estimation:

# Monte Carlo Dropout for uncertainty

predictions = []

for _ in range(100):

pred = model.predict(image, training=True) # Keep dropout on

predictions.append(pred)

uncertainty = np.std(predictions)

if uncertainty > threshold:

flag_for_human_review()Explainable AI techniques build clinical trust by showing radiologists which image regions influenced the model's decision. Gradient-weighted Class Activation Mapping (Grad-CAM) generates heatmaps highlighting important areas:

# Generate heatmap of important regions

heatmap = generate_gradcam(model, image, class_index)

overlay = superimpose_heatmap(image, heatmap)

# Shows radiologist which areas influenced the predictionQuality Management System (QMS)

Medical device software must operate within a comprehensive Quality Management System complying with ISO 13485 standards. This framework encompasses all aspects of development, deployment, and maintenance, ensuring consistent quality and patient safety.

Design controls require documentation of all development decisions, creating a traceable record from initial requirements through final validation. Risk management following ISO 14971 standards identifies potential failure modes and implements appropriate mitigations. Clinical evaluation protocols establish ongoing performance monitoring to detect any degradation in real-world use. Post-market surveillance systems track actual clinical outcomes, identifying rare adverse events that might not appear during pre-market testing. Change control processes ensure that any model updates undergo appropriate validation before deployment, preventing unintended consequences from seemingly minor modifications.

This comprehensive approach to quality management, while demanding significant resources, provides the foundation for safe and effective clinical deployment of AI systems.

Resources and Further Reading

- TensorFlow Medical Imaging Tutorial

- Understanding CNNs Visually

- Medical Image Analysis Best Practices

- FDA Guidelines for AI in Medical Devices

You've successfully built a CNN-based breast cancer detection model! While this is a powerful demonstration of AI in healthcare, remember that real medical AI systems require extensive validation, regulatory approval, and integration with clinical workflows.

The techniques you've learned here - transfer learning, CNNs, and TensorFlow - form the foundation for many medical AI applications. Continue experimenting, but always prioritize patient safety and ethical considerations when working with medical data.

Acknowledgments

- Dataset: Mendeley Breast Ultrasound Images Dataset

- Framework: TensorFlow and the Google Brain team

- Base model: MobileNet authors and contributors

%20(475%20%C3%97%20375%20px)%20(1).avif)

.avif)