The landscape of large language models in 2025 shows a major shift toward efficiency-driven architectures. Mixture of Experts (MoE) designs are reshaping how models are built and deployed. This analysis highlights differences in size, architecture, and training data across ten leading LLMs, revealing how major developers approach AI design.

Model Size and Parameter Distribution

Parameter Hierarchy

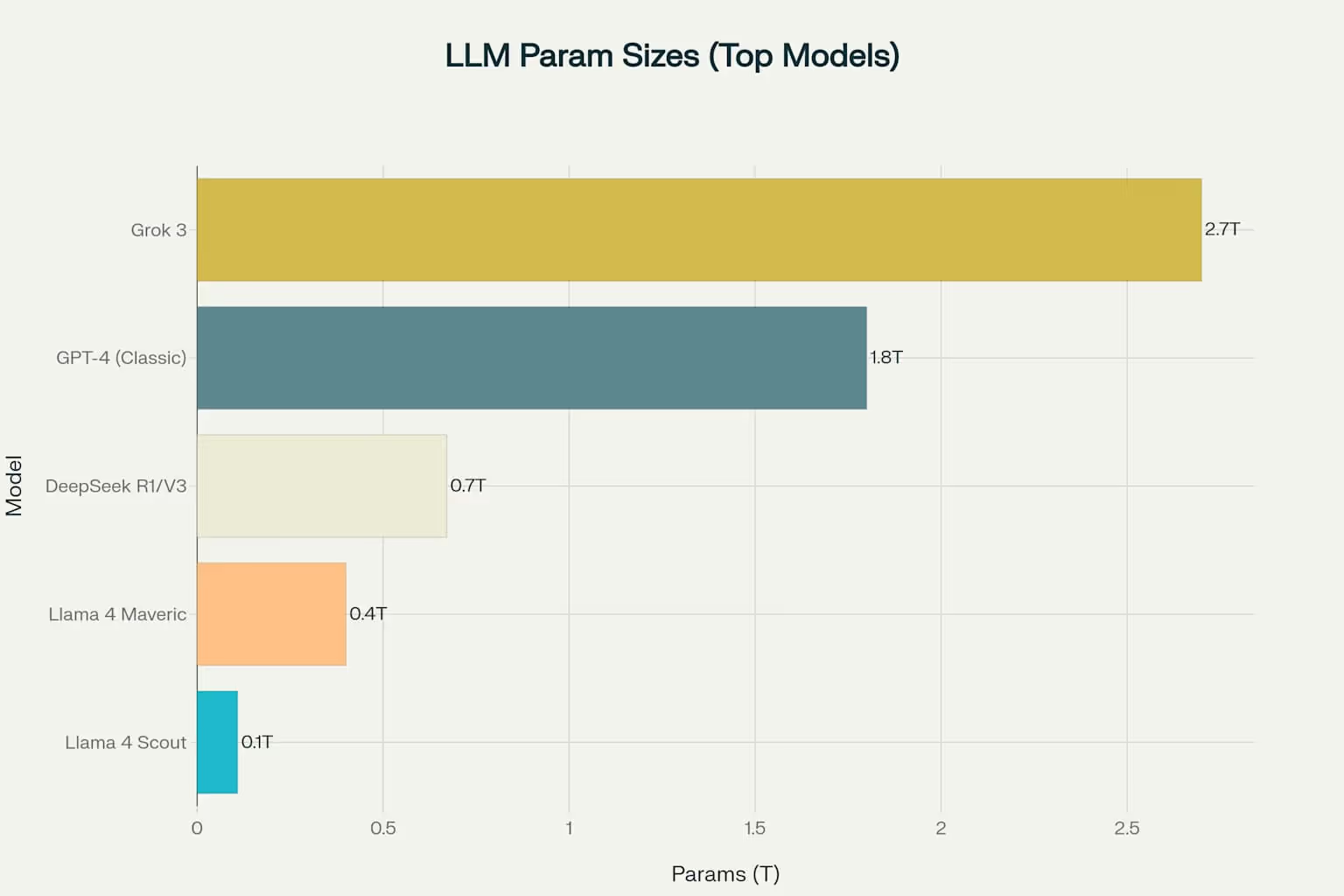

Grok 3 leads with 2.7 trillion parameters, followed by GPT-4 at 1.8 trillion. But modern MoE models only activate part of their parameters during inference. DeepSeek R1/V3 uses 671B total but only 37B active, showing how efficiency is achieved. Llama 4 variants follow a similar structure with 17B active parameters despite large total sizes.

Undisclosed Specifications

Some top models—including Gemini 2.5 Pro, Claude 4, and Mistral Medium 3—do not disclose parameter counts, reflecting competitive pressures in the AI space.

Architectural Innovation

MoE Dominance

Seven out of ten models use MoE designs to scale efficiently by activating only relevant parameter subsets. Grok 3 and the Llama 4 models implement 128-expert configurations, allowing specialization without overloading compute resources.

Reasoning Architectures

Models like Gemini 2.5 Pro and Claude 4 are built for reasoning, capable of switching between fast responses and deeper deliberation. Claude 4 introduces tool use during reasoning, allowing integration with external sources like web search.

Dense Architectures

Mistral Medium 3 is the only top model still using a dense transformer design, achieving competitive results with lower costs—just $0.40 per million tokens.

Training Data and Evolution

Scale and Volume

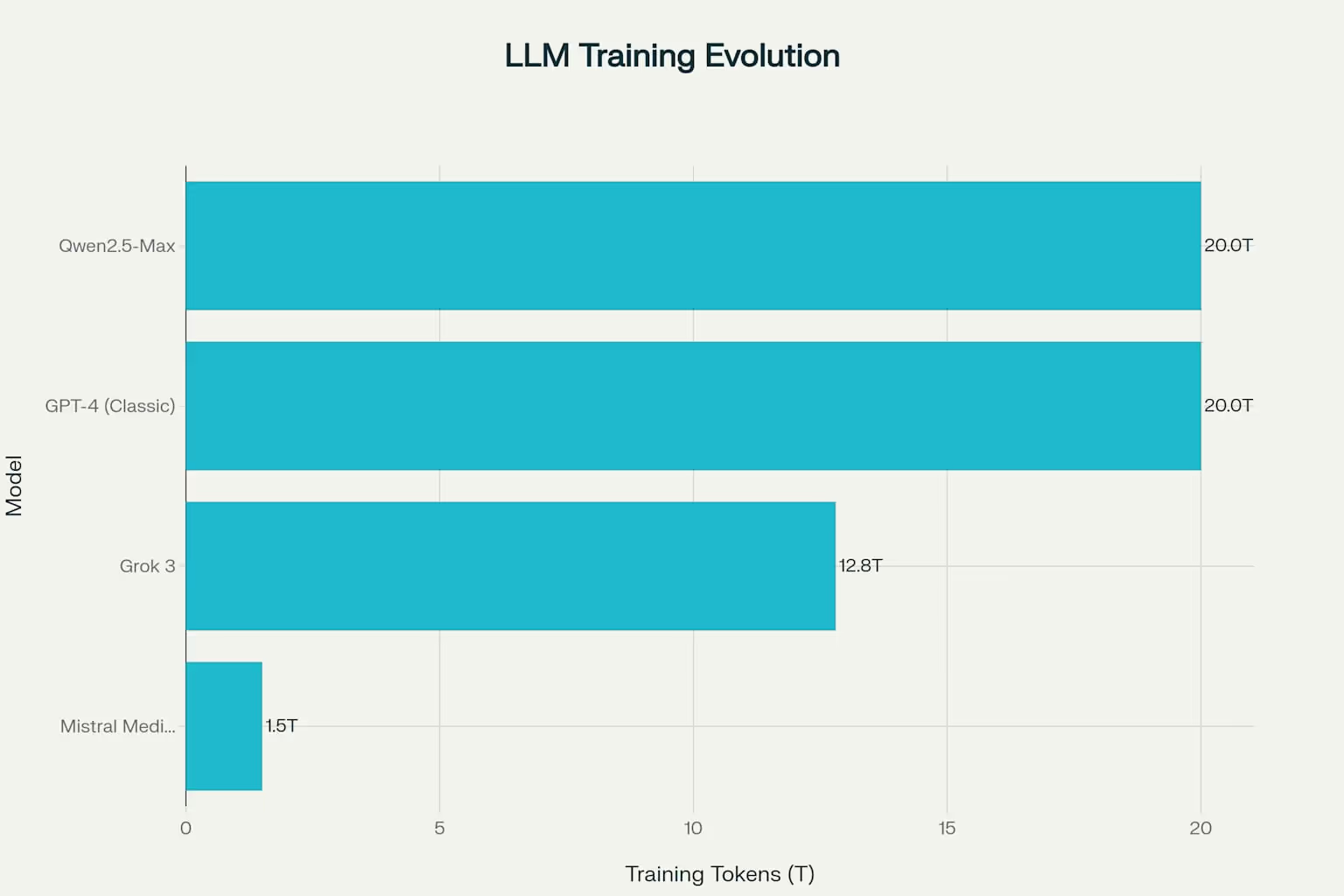

Models vary significantly in training volume. GPT-4 and Qwen2.5-Max trained on ~20T tokens; Grok 3 used 12.8T, while Mistral Medium 3 trained on just 1.5T—showing how data quality can outweigh volume.

Synthetic Data Use

Grok 3 trained on a 50/50 mix of synthetic and real data to increase diversity and robustness. This shift to AI-generated data may impact bias, scalability, and generalization.

Multimodal Training

Llama 4 is trained natively on text, image, and video through early fusion. Qwen2.5-Max focuses on scientific literature, targeting specialized domains with broad capability.

Context Windows and Memory

Scaling Context

Llama 4 Scout leads with a 10M token context window. Gemini 2.5 Pro and Llama 4 Maverick support 1M tokens. Others range between 128K and 200K. These larger windows support long documents, full codebases, and complex inputs.

Dynamic Memory

Grok 3 uses dynamic memory allocation based on task complexity, optimizing compute use and enabling smarter scaling.

Training Methodologies

Advanced Techniques

Training now includes curriculum learning—gradually increasing complexity during training. Grok 3 used a 9-phase curriculum. DeepSeek R1/V3 leveraged FP8 mixed-precision to reduce memory needs while keeping training stable.

Reinforcement Learning

Most top models use RLHF (Reinforcement Learning from Human Feedback). Mistral Medium 3 applies this to enterprise tasks through custom reward models.

Performance and Deployment

Efficiency

MoE models like DeepSeek R1/V3 achieve up to 8× better compute efficiency. They require significantly fewer FLOPs per token than dense models of similar capability.

Deployment Flexibility

Some models (e.g., Mistral Medium 3) can run on just 4 GPUs, making advanced AI more accessible even to teams with limited infrastructure.

Future Directions

Hybrid Models

Claude 4 represents a hybrid trend, combining dense and sparse elements that adapt based on task type. This may become standard for general-purpose, adaptable models.

Scaling Law Adjustments

New research suggests MoE models require fewer training tokens per parameter than dense models, which could influence future resource allocation strategies.

The top LLMs of 2025 showcase remarkable architectural diversity—from Grok 3’s massive 2.7T MoE design to Mistral’s efficient dense model. MoE dominance, synthetic data training, and extreme context scaling mark a new generation of AI. As innovation accelerates, the balance between scale, efficiency, and specialization will define the next wave of enterprise and research-ready AI.

.avif)