Transforming Data Extraction with AI-Powered Automation

Azumo designed and developed Transforming Data Extraction with AI-Powered Automation for Centegix using Python resulting in measurably improving outcomes such as this, the team achieved 100% of MVP deliverables within expecte.

Centegix

CENTEGIX is a leading provider of rapid incident response safety solutions designed to minimize identification, notification, and response times during emergencies. Their Safety Platform™ integrates dynamic digital mapping, real-time location tracking, user-friendly wearable panic buttons, visitor management, and reunification features to enhance preparedness and response across various sectors.

For organizations managing visitor access, processing identification documents quickly and accurately is critical. Manual data extraction from driver's licenses had become a significant bottleneck in their visitor management workflow, creating delays that could impact emergency response effectiveness.

Results:

90%

100%

28%

The Challenge

CENTEGIX needed to automate data extraction from driver's licenses but faced significant obstacles. They encountered two primary challenges: technical complexity and budget constraints.

Technical Complexity

The team had to account for the wide variation in document structures - every state and region uses different formats, fonts, and layouts, making consistency a moving target.

Complicating matters further were image quality issues. Real-world photos of licenses came with all the usual problems: low resolution, poor lighting, and faint text that often blended into the background - all of which made accurate OCR extraction far more difficult.

Adding pressure was the need for real-time processing. The system wasn’t just about accuracy; it had to be fast enough to keep up with live visitor management workflows, striking a careful balance between speed and reliability.

Another challenge lay in the limited training data. With only 600 synthetic images available, the team had to rely heavily on creative data augmentation to build models capable of recognizing a wide variety of license designs.

Budget Feasibility Challenges

CENTEGIX approached multiple AI vendors but encountered a common problem: unpredictable hourly billing models that made project costs difficult to forecast and control. Many providers couldn't offer fixed-cost solutions within their budget parameters.

Azumo differentiated itself by proposing a fixed-cost Proof of Concept approach. This eliminated financial uncertainty while providing a clear path to evaluate technical feasibility before committing to full development.

Their team consistently brings thoughtfulness, professionalism, and ownership, making them a valued extension of our internal team.

.avif)

The Solution

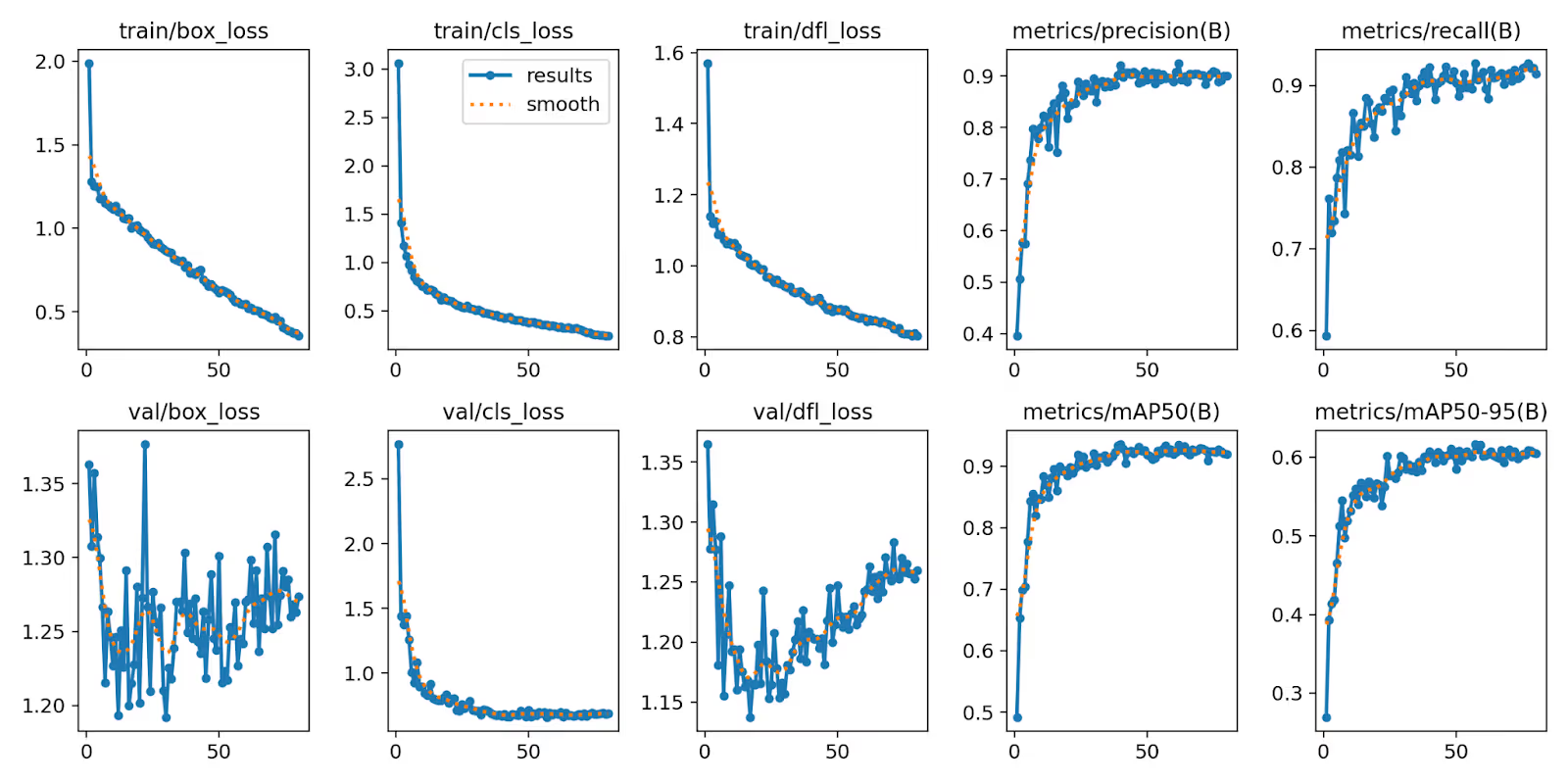

To identify the optimal model for license field detection, multiple YOLO (You Only Look Once) variants were tested, including YOLO8 (nano and small) and YOLO11 (nano and medium). The goal was to find the right balance between detection accuracy and inference speed, critical for real-time document processing.

Training was conducted using a synthetic dataset of 600 driver’s license images sourced from the Roboflow dataset. To simulate real-world variability, the dataset underwent dynamic augmentation, introducing changes in lighting, orientation, and background noise. The data was then divided into three subsets: 70% for training, 20% for validation, and 10% for testing.

Over the course of 80 training epochs, performance was continuously monitored to track improvements and spot potential issues early. Hyperparameters were kept lean to enable faster iteration cycles and focus efforts on observable performance gains across models. This approach helped benchmark each variant under consistent conditions and build confidence in the most effective configurations for deployment.

Performance Visualizations

Two key outputs best represent the model’s performance - one showing classification accuracy across fields, and the other demonstrating how those results translate visually.

This chart highlights how accurately the YOLO11m model classified each license field. Most predictions fall along the diagonal, indicating consistently correct identification across categories like name, address, and ID number. Minor overlap appears between similar fields, such as first and last names - expected due to their structural and positional similarities. Overall, the matrix reflects strong per-class accuracy and low rates of misclassification.

.avif)

In this example, the model accurately places bounding boxes on a driver’s license, identifying key fields with precision. Despite layout variation and synthetic noise, the output demonstrates clear localization of names, dates, and other relevant fields - reinforcing the model’s ability to generalize beyond the training set.

Optical Character Recognition (OCR)

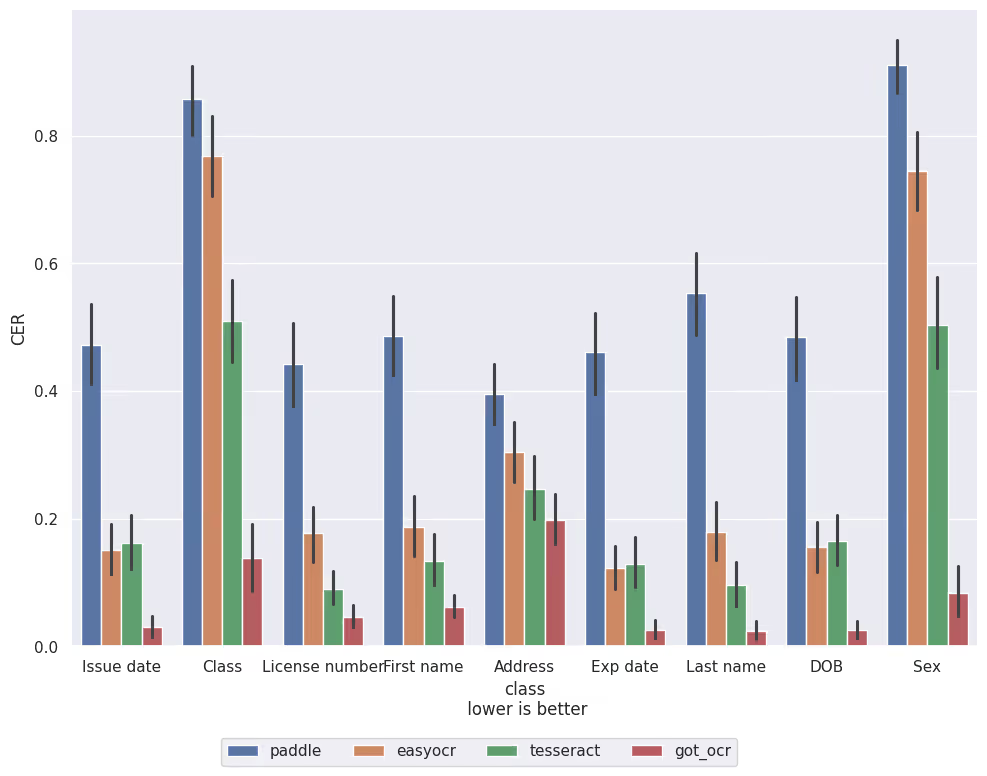

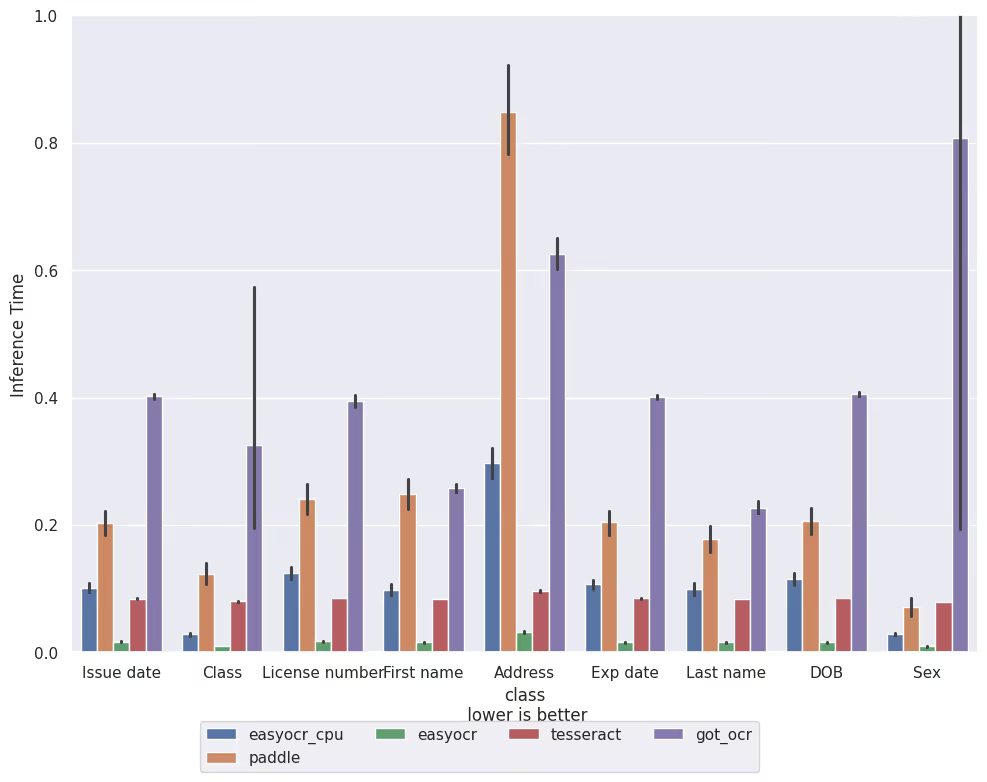

To evaluate text extraction performance, four OCR libraries were tested side by side: Tesseract, EasyOCR, GOT_OCR, and PaddleOCR. Each was assessed based on its accuracy across different license field types and its ability to process data efficiently. The goal was to determine which engine could best handle structured documents with speed and precision.

Alongside raw image testing, preprocessing techniques were introduced - specifically OTSU binarization - to analyze their impact on output quality. In some cases, preprocessing improved accuracy, especially for lower-quality images. However, results also highlighted the need for fine-tuned preprocessing strategies if the solution were to be scaled for production. These insights helped shape recommendations for future implementation and model tuning.

Performance Metrics

To evaluate the effectiveness of each OCR library, multiple dimensions were assessed - including accuracy at the character level and processing speed across document types.

This chart presents the Character Error Rate (CER) for each OCR model across different field types. GOT_OCR consistently delivered the lowest error rates - especially on structured fields like names, ID numbers, and dates - making it the most accurate library tested. In contrast, other engines showed higher error rates in complex or abbreviated fields like class codes.

Levenshtein Ratio Testing was used alongside CER to measure how closely the extracted text matched ground truth. This reinforced GOT_OCR’s lead, with high similarity ratios on most fields. Sequence Ratio Evaluation, which focused on multi-line fields such as addresses, highlighted how formatting complexity affected performance - especially for models not optimized for paragraph-style content.}

Inference time was also critical for real-time use cases. As shown in this chart, EasyOCR (GPU version) outperformed all others in speed, offering the fastest processing times across categories. However, this came with slightly reduced accuracy compared to GOT_OCR.

Together, these results reveal a clear trade-off between speed and precision. GOT_OCR is ideal when accuracy is the priority (e.g., compliance-related fields), while EasyOCR is well-suited for high-throughput, time-sensitive workflows. These insights guided the decision to consider hybrid OCR strategies — using faster engines for initial extraction and slower, more accurate ones for post-validation or critical fields.

Evaluation and Insights

The comprehensive testing process generated detailed performance data that shaped the understanding of each approach’s strengths and limitations. Confusion matrices offered a clear view of detection accuracy across the different YOLO variants, helping identify which models performed best under specific conditions.

OCR performance varied considerably depending on both the type of data field and the quality of the input image. Fields with complex structures or poor visual clarity introduced higher error rates, revealing the limitations of general-purpose OCR engines when applied to structured documents like driver’s licenses.

Recommendations for Future Work

The analysis provided specific, data-driven recommendations for production development:

- Dataset Enhancement: Expand training data with greater diversity in license types, lighting conditions, and text-background contrast variations

- Preprocessing Optimization: Fine-tune image preprocessing techniques for specific field types and quality conditions

- Hybrid OCR Architecture: Consider combining multiple OCR approaches - using fast libraries for initial processing and accurate libraries for quality refinement

Results

The Proof of Concept successfully demonstrated the viability of automated driver’s license data extraction while providing actionable insights for production development.

Detection Accuracy

The YOLO11m model achieved mean average precision (mAP) exceeding 80%, reliably identifying key license fields across diverse formats. This consistent accuracy provided confidence that the solution could scale to real-world variability. Project execution mirrored these results, with 90% planning accuracy achieved across commitments — up from ~85% earlier in the year — ensuring model development stayed on schedule.

OCR Performance

GOT_OCR delivered the highest extraction accuracy, surpassing 80% for critical fields such as names and ID numbers. This validated that automated OCR could meet operational requirements. Parallel to this, the team achieved 100% of MVP deliverables within expected timelines, ensuring OCR testing phases progressed smoothly without delays.

Processing Trade-offs

The evaluation highlighted speed vs. accuracy trade-offs: EasyOCR (GPU) provided the fastest processing, while GOT_OCR balanced accuracy and robustness. This hybrid approach informed production recommendations. Supporting this adaptability, the delivery team absorbed 20–25% unplanned feature scope, proving the process could handle unexpected demands without disruption.

Technical Roadmap

Opportunities were identified for production scaling, including hyperparameter optimization, expanded datasets, preprocessing refinements, and testing under broader conditions. Execution of this roadmap was supported by improved delivery velocity: average cycle time dropped from 18 days to 13 days, and sprint completion rates consistently exceeded 95% within 13 days across six sprints.

Project Validation

The fixed-cost PoC validated both technical feasibility and delivery capability. Robust collaboration between backend and frontend teams reduced blockers by ~70% compared to the prior quarter, while resource adaptability improved by 80%, ensuring reassignments and onboarding did not affect outcomes. The project not only confirmed technical viability but also demonstrated a scalable, resilient delivery framework.

The Proof of Concept with CENTEGIX demonstrated both the technical and operational viability of automating driver’s license data extraction with AI-powered solutions. By combining advanced object detection and OCR techniques, the project achieved over 80% accuracy in both field detection and text extraction, validating that automation could meet the customer’s operational requirements.

Beyond technical performance, the engagement also proved the strength of Azumo’s delivery model. High planning accuracy, rapid sprint completion rates, and effective cross-team collaboration ensured milestones were consistently met without disruption — even when scope changes arose. This reliability gave CENTEGIX confidence that a production-scale deployment would not only meet accuracy and speed requirements but also be supported by a scalable and resilient delivery framework.

Most importantly, the project eliminated uncertainty. With a fixed-cost PoC approach, CENTEGIX was able to evaluate feasibility, validate performance metrics, and identify a clear technical roadmap for production — all within budget and timeline constraints. The result was a trusted partnership and a foundation for advancing toward full-scale implementation of AI-driven automation in their visitor management workflows.

.avif)