The evolution of Large Language Models (LLMs) has enabled a new generation of intelligent systems — Retrieval-Augmented Generation (RAG) assistants. These assistants bridge the gap between generalized reasoning and domain-specific expertise by combining a language model's generative ability with dynamic access to external data and tools.

Building a multi-tool RAG assistant is about designing a full intelligence stack — one that grounds every answer in reliable data, leverages specialized tools for reasoning and computation, and coordinates multiple agents to produce trustworthy, explainable outputs.

1. The Philosophy Behind RAG Assistants

Traditional chatbots rely on pre-trained knowledge, which quickly becomes stale. RAG assistants, on the other hand, are contextually aware systems that:

- Retrieve the most relevant and up-to-date information from dynamic data sources

- Use that retrieved knowledge to guide generation

- Validate or extend their own outputs through external tools (calculators, APIs, evaluators, or even other AI models)

The goal is to reduce hallucinations, improve factual accuracy, and extend capabilities beyond simple conversation.

Why This Matters for Business

Consider a financial analyst assistant. When asked "What was our Q3 revenue compared to industry benchmarks, and should we adjust our pricing strategy?", a traditional chatbot might hallucinate numbers or provide outdated comparisons. A RAG assistant:

- Retrieves internal Q3 financial reports from your knowledge base

- Fetches current industry benchmarks through market data APIs

- Runs pricing analysis calculations

- Validates the comparison methodology

- Generates a grounded recommendation with traceable sources

This transforms AI from a conversational tool into a strategic business asset.

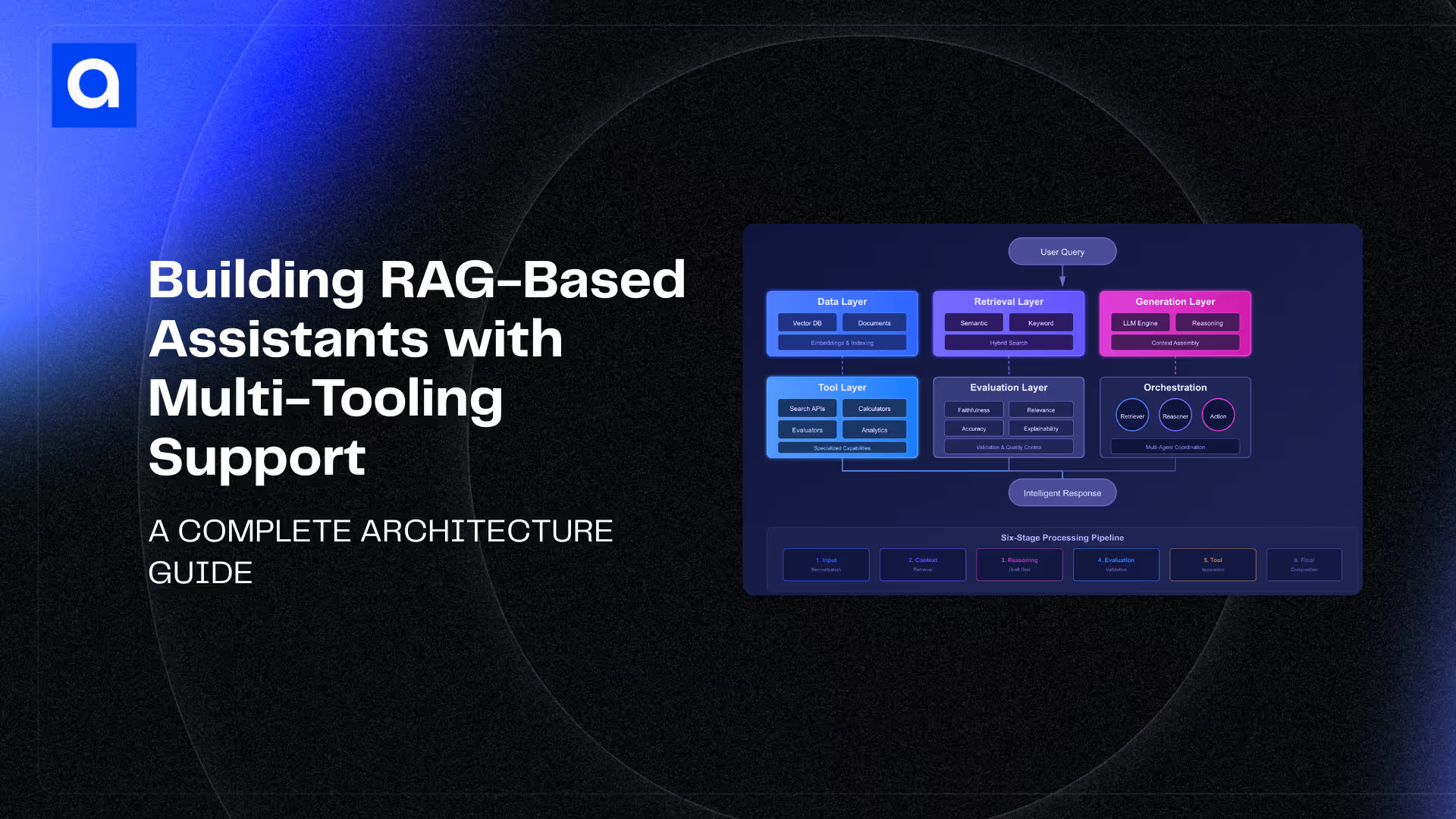

2. Architectural Overview

A well-engineered RAG assistant consists of three main layers, each modular and replaceable:

A. Retrieval Layer: The Factual Backbone

This layer handles how information is stored and found:

Data Ingestion: Your documents, reports, FAQs, and structured data are broken into meaningful sections (typically 500-1500 words each).

Embedding & Indexing: Each section is converted into a mathematical representation and stored in a vector database (like Weaviate, Pinecone, or FAISS).

Contextual Retrieval: When a user asks a question, the system finds the most relevant information using semantic search — understanding meaning, not just matching keywords.

The Key Principle: If someone asks "How does empathy influence decision-making?", the system should surface psychological frameworks, not just generic definitions of empathy. This requires understanding context and relevance, not just keyword matching.

Hybrid Search: Best of Both Worlds

Modern systems combine two search approaches:

Semantic Search: Understands the meaning and context of queries. Great for conceptual questions like "What are best practices for customer retention?"

Keyword Search: Finds exact terms and phrases. Perfect for specific lookups like "Project Phoenix timeline" or "ISO 27001 compliance."

By combining both, the system delivers more accurate results across different query types.

B. Generation Layer: Creating Intelligent Responses

Once relevant information is retrieved, the LLM uses it as grounding context to produce answers. This involves:

Prompt Assembly: Combining the user's question, retrieved context, and instructions about tone and format.

Controlled Reasoning: The LLM generates an answer strictly based on the supplied evidence.

Post-Processing: Validation models check factual accuracy and coherence before presenting the answer.

An important technique here is context compression — summarizing retrieved information to preserve processing capacity for deeper reasoning. Think of it like giving someone a briefing document instead of a 500-page report.

C. Tooling Layer: Where Assistants Become Agents

This is where the assistant becomes truly powerful. Each tool represents a specialized capability:

Search Tools: Query internal databases, external APIs, or specialized sources like medical databases or financial markets.

Computation Tools: Perform calculations, analytics, scoring, or statistical analysis.

Knowledge Tools: Summarize documents, classify information, or extract key insights.

Evaluation Tools: Validate outputs for accuracy, compliance, or alignment with company policies.

Each tool has a clear interface defining what it does, what inputs it needs, and what outputs it provides. When the system identifies a need, it automatically selects and uses the appropriate tool.

Example Tool Definition:

- Name: Sentiment Analysis Tool

- Purpose: Analyzes emotional tone of text

- Input: Text to analyze

- Output: Sentiment (positive/neutral/negative) and confidence score

3. The Multi-Tool Workflow: How It All Works Together

A robust RAG assistant follows a systematic six-stage pipeline:

Stage 1: Input Normalization

The system interprets what you're really asking for. Are you requesting an analysis? A comparison? A recommendation? This stage identifies the intent and determines what capabilities will be needed.

Stage 2: Context Retrieval

The system searches your knowledge base for relevant information using hybrid search (semantic + keyword). Results are ranked by relevance and filtered by any time constraints or domain requirements.

Stage 3: Reasoning and Draft Generation

Using the retrieved information, the LLM constructs a grounded response. If multiple reasoning paths are possible, it may generate alternative hypotheses to evaluate.

Stage 4: Evaluation and Validation

A secondary model checks the reasoning quality and factual grounding. Think of this as an internal quality control step that scores confidence and flags any concerns.

Stage 5: Tool Invocation

The assistant triggers appropriate tools to fill gaps — perhaps running calculations, fetching external data, or performing specialized analysis. Tool results are fed back into the reasoning process.

Stage 6: Final Composition

All pieces come together: the LLM output, tool results, and retrieved facts merge into a cohesive answer complete with source citations and confidence scores.

The modularity ensures easy expansion — new tools can be added without changing the core reasoning logic.

4. Multi-Agent Coordination: Teamwork at Scale

As assistants become more complex, specialized agents can collaborate instead of one model handling everything:

Retriever Agent: Handles searching and filtering information

Reasoner Agent: Performs the main logical reasoning

Evaluator Agent: Validates the reasoning quality and accuracy

Action Agent: Executes tool calls and API interactions

These agents share information through a central memory system, enabling transparent workflows. For example, the Evaluator Agent can challenge the Reasoner's conclusions before presenting them to you, ensuring higher reliability.

Real-World Example: Collaborative Intelligence

A practical example of this kind of multi-agent coordination comes from AWS, which developed a field workforce safety assistant that uses multiple specialized agents to deliver comprehensive site risk assessments.

When a user requests a safety report, an orchestrator agent delegates tasks to domain-specific agents—one fetching live weather data, another checking location-based hazards, and another retrieving emergency protocols. The orchestrator then aggregates and validates the findings, ensuring consistency and reliability before returning the final summary.

This structure closely mirrors a collaborative intelligence workflow for pricing analysis, where agents handle data retrieval, reasoning, and validation in parallel—each contributing a layer of expertise that strengthens the accuracy of the overall response (AWS Industries blog).

5. Making It Production-Ready

To build enterprise-grade assistants, several considerations are essential:

Caching and Performance

Frequently accessed information and common queries are cached to deliver faster responses and reduce costs. Smart caching can cut response times in half.

Knowledge Versioning

Every piece of information carries a timestamp, allowing the system to retrieve "the latest policy update" or "compare 2024 vs. 2025 results." This temporal awareness is crucial for dynamic business environments.

Observability and Transparency

Every retrieval, reasoning step, and tool call is logged. Users can see exactly what data informed an answer, which sources were consulted, and what tools were used. This transparency builds trust and enables debugging.

Human-in-the-Loop Feedback

Subject matter experts can review responses and provide corrections. This feedback continuously improves the system's accuracy and relevance through iterative refinement.

Evaluation Metrics

Faithfulness: Does the answer rely only on retrieved data? (Target: >95%)

Relevance: Are the right documents being retrieved? (Target: >80%)

Latency: Are responses delivered quickly? (Target: <3 seconds for simple queries)

Explainability: Can the system justify its conclusions? (Target: 100% for factual queries)

6. System Architecture at a Glance

7. The Future: Autonomous and Self-Improving Systems

The next evolution includes:

Adaptive Tool Learning: Assistants that automatically learn which tools work best for different situations based on outcome quality.

Self-Improving Retrieval: Systems that dynamically adjust their search strategies based on what works, expanding or contracting scope as needed.

Self-Evaluation Loops: Models that critique their own reasoning and use successful patterns to improve future performance.

Collaborative Intelligence: Multiple specialized assistants (Finance, Legal, Product) working together to solve complex cross-functional challenges.

Ultimately, the goal is creating self-improving, contextually fluent AI systems capable of conducting analysis, running computations, and reasoning collaboratively with humans.

Conclusion

A RAG-based assistant with multi-tooling support is more than a chatbot — it's an adaptive intelligence framework. It combines retrieval, reasoning, and action into a cohesive architecture that evolves with your data, tools, and business goals.

The architecture we've outlined follows modular principles with clean separation between retrieval, reasoning, evaluation, and orchestration. This enables assistants that are:

- Scalable: Grow from prototype to enterprise without rebuilding

- Explainable: Every answer traces back to specific sources

- Adaptable: New tools and data sources integrate seamlessly

- Reliable: Multiple validation layers ensure accuracy

Key Success Factors:

- Robust Retrieval with hybrid search and metadata awareness

- Intelligent Tool Use with proper error handling

- Multi-Agent Coordination through structured communication

- Continuous Evaluation with comprehensive metrics

- Production Readiness including caching, security, and observability

This represents the workflow of enterprise AI — not just answering questions, but becoming a trusted partner in decision-making, analysis, and strategic planning. By grounding every response in verifiable data, leveraging specialized tools, and maintaining complete transparency, RAG assistants are transforming how organizations harness artificial intelligence.

The technology exists today. The question is: how will your organization leverage it to gain competitive advantage? Let’s connect today.

.avif)