Enhancing Psychometric Question Analysis with Large Language Models

Azumo designed and developed Enhancing Psychometric Question Analysis with Large Language Models for AI-Powered Talent Intelligence Company using Python resulting in measurably improving outcomes such as data. Dataset Creation – 550 psychometric question-answer pairs.

AI-Powered Talent Intelligence Company

The customer partnered with Azumo to explore the use of Large Language Models (LLMs) for psychometric analysis. Their goal was to determine whether an AI system could reliably analyze questions and answers, then categorize them into 50 distinct psychometric values. The engagement was defined as a Proof of Concept (PoC), focused on validating feasibility, building essential infrastructure, and fine-tuning models with real-world data.

Results:

8

$25K

5x

The Challenge

Developing a psychometric classification system using AI introduced several unique challenges. The first was the high-dimensional nature of the task: each question and answer needed to be categorized across 50 psychometric values, which demanded both carefully designed annotation workflows and robust quality checks.

The second challenge was limited data availability. The project began with just 105 annotated question-answer pairs. To expand coverage, LLM-generated synthetic data was introduced generating an additional 550 question-answer pairs, but this required additional validation to maintain accuracy and avoid bias.

Finally, as this was a feasibility study rather than a production deployment, the customer required a lightweight but functional solution. The interface for visualizing results had to be simple and non-productized, while still enabling validation of performance. All of this had to be achieved under a tight two-month timeline and a $25,000 USD budget cap.

The Solution

Azumo designed a phased, milestone-driven PoC to balance infrastructure setup, labeling, and model development.

The first phase focused on infrastructure. Label Studio was deployed via Docker/VM to provide a secure environment for annotation, while PostgreSQL was used for structured storage of labeling data. Python and standard data science frameworks formed the backbone of the modeling environment.

The next phase emphasized data labeling and quality control. Custom workflows were built in Label Studio to capture the 50 psychometric values. Inter-rater reliability checks ensured consistency across annotators, and outlier detection helped flag unusual or potentially misclassified entries. Communication was streamlined through shared Slack and email channels for asynchronous feedback.

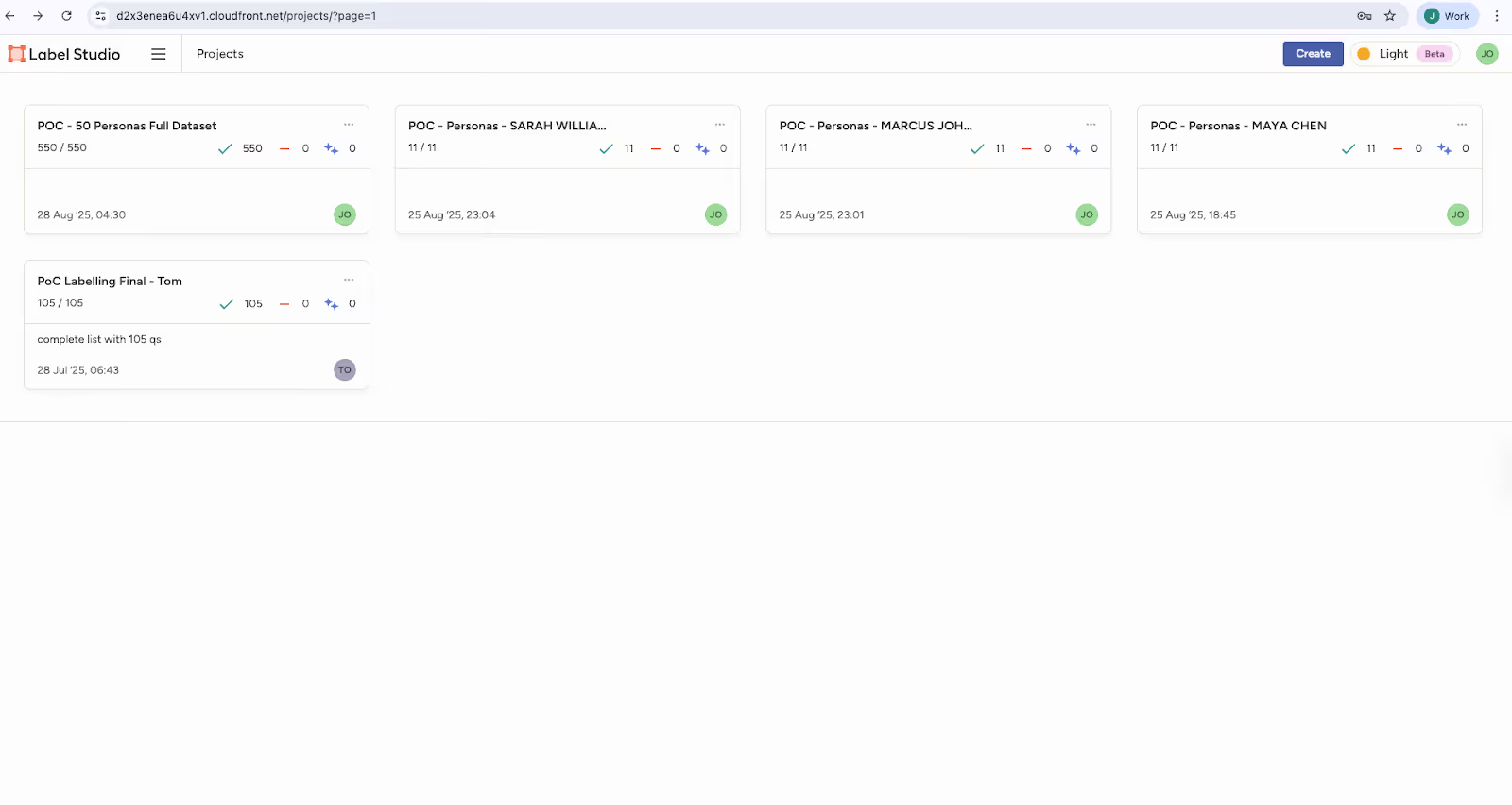

This screenshot highlights the use of Label Studio for managing annotation workflows. Each dataset was organized into projects, allowing annotators to classify question-answer pairs across multiple psychometric categories. Built-in quality checks monitored reliability, while the project interface provided visibility into labeling progress and dataset completeness.

Once a baseline dataset was prepared, the focus shifted to model development. Fine-tuning of LLMs was performed using LoRA/QLoRA techniques, which optimized training for efficiency while preserving accuracy. Classification and regression models were tested to benchmark performance, with iterative improvements guided by interim milestone reviews. APIs and callable scripts were also delivered to allow inference and testing outside of the core environment.

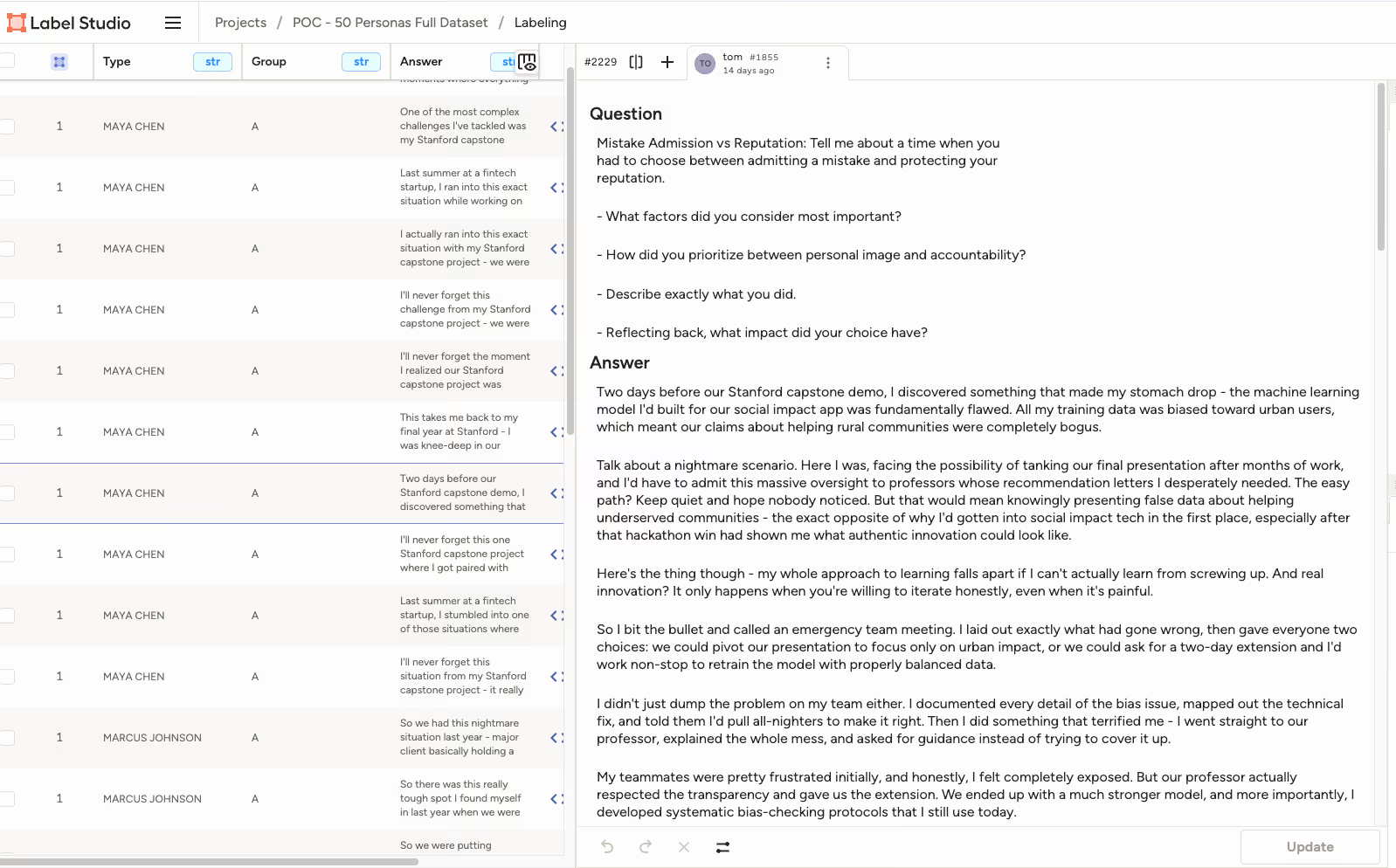

This figure shows the structured dataset after export. Each row represents a question-answer pair, while columns encode the 50 psychometric dimensions. Outlier detection ensured the quality of the dataset, and the balanced tabular structure made it ready for ingestion into machine learning pipelines. This dataset became the foundation for fine-tuning and validation.

Throughout the engagement, weekly check-ins and demonstrations gave the customer ongoing visibility into progress, while milestone reviews offered opportunities to validate data quality, model outputs, and interim results.

Results

- Dataset Creation – 550 psychometric question-answer pairs were annotated and structured across 50 values, ready for training.

- Model Fine-tuning – Initial fine-tuned models showed promising classification precision, confirming feasibility.

- Infrastructure Setup – A working pipeline was delivered, including Docker/VM-hosted Label Studio, PostgreSQL storage, and callable inference scripts.

- Metrics & Findings - Metrics were shared as well as evaluation criterias, along with the findings, Implications and final suggestions for next steps

- Collaboration Outcomes – Weekly demos, transparent milestone tracking, and lightweight visualizations ensured alignment within budget and scope.

The Proof of Concept confirmed the feasibility of applying LLMs to psychometric classification. Beyond infrastructure and model development, the customer gained actionable insights into performance trade-offs, labeling requirements, and next steps for scaling. The delivered solution created a reusable framework for expanding datasets and moving toward production-ready systems.