When Andrej Karpathy first floated the idea of “vibe coding” earlier this year, the concept felt almost whimsical: describe what you want in natural language, and let AI generate the software. For a solo developer, it was intoxicating—suddenly you could spin up an MVP in an afternoon. The internet filled with stories of weekend hackers building games, apps, and prototypes without ever touching boilerplate code.

But as the hype trickled into enterprise conversations, engineering leaders began asking tougher questions. Could this work at scale? Could a bank, a healthcare provider, or a Fortune 500 SaaS company rely on “vibes” to ship production-grade software? And what happens when dozens of developers are “vibing” at once?

What Is Enterprise vibe coding?

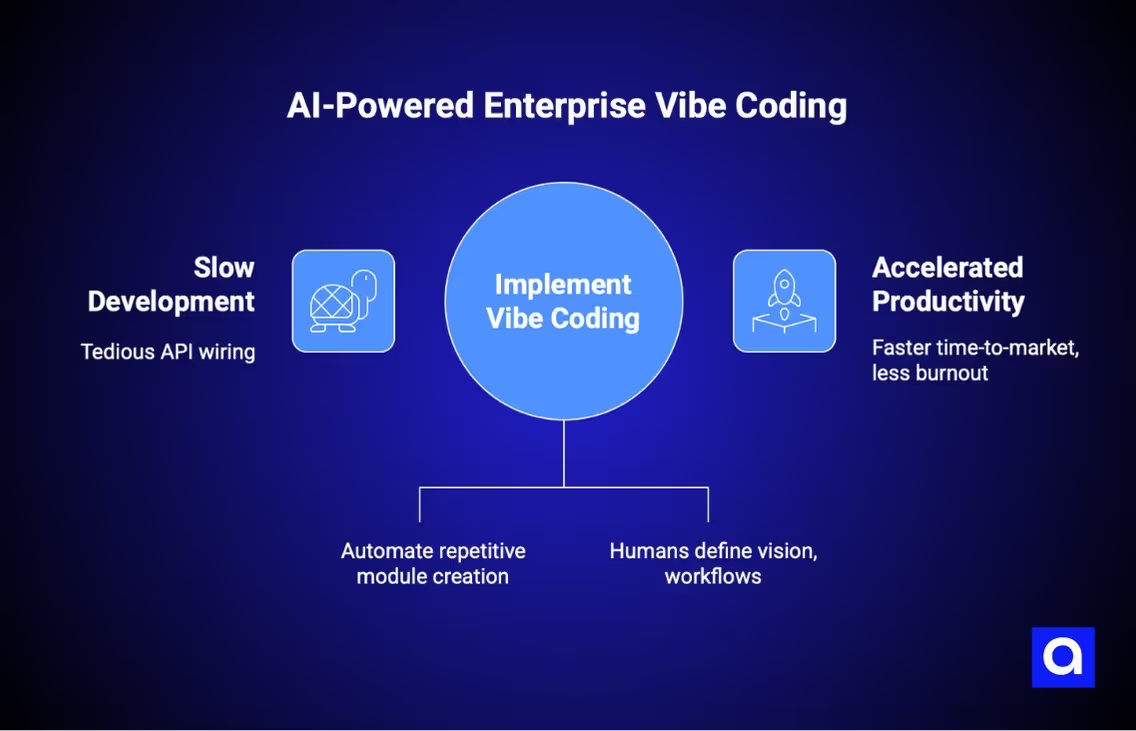

At its best, enterprise vibe coding feels like a superpower. Instead of spending hours wiring up APIs or scaffolding repetitive modules, developers jump straight into creative flow. Product managers and designers can sketch out workflows that the AI instantly translates into functional prototypes. Amazon has even positioned vibe coding within its AWS ecosystem as the natural evolution of developer productivity—where AI handles the grind and humans focus on architecture and vision (Business Insider).

For tech leaders, this is compelling. Faster time-to-market, empowered cross-functional teams, and reduced developer burnout all sit on the table. And the investment world is paying attention: when Cursor, an AI-native IDE built by Anysphere, closed a $900 million Series C at a $9 billion valuation, it wasn’t just hype. The platform is reportedly generating billions of lines of code every day, with customers including Stripe, OpenAI, and Spotify putting it to real-world use (Financial Times).

The Reality Check in Larger Teams

Yet, the honeymoon phase fades quickly when organizations try to scale. A lone developer may laugh off messy AI code, but in a team of 200 engineers, inconsistency is poison. One prompt generates a React component with a bespoke styling library, another spits out something with Tailwind, and a third produces plain CSS. Multiply this by a hundred commits, and suddenly your codebase is a Frankenstein’s monster.

Then there’s the matter of token burn. A team experimenting without guardrails can rack up costs before realizing they’ve generated more snippets than shippable features. And that’s not even touching the bigger issues: security blind spots, compliance risks, and the erosion of shared coding standards. As TechRadar observed, vibe coding carries enormous promise, but “without governance, it risks accelerating technical debt faster than innovation” (TechRadar).

Why Are Enterprises Embracing Vibe Coding?

The companies succeeding with vibe coding aren’t blindly vibing—they’re steering it with intent. They’ve learned that the real question isn’t “Can AI code?” but “Where should AI code?”

At Notion, for example, vibe coding isn’t just a thought experiment. A Wired feature documented how the company invited a journalist to participate in AI-powered prototyping sessions, using tools like Cursor and Claude to co-create features alongside its engineers. The experiment revealed both the thrill of rapid generation and the importance of human oversight to keep prototypes usable and aligned with product strategy (Wired).

In practice, successful teams enforce discipline. They establish shared prompt libraries so outputs align in architecture and style. They funnel AI contributions through the same CI/CD pipelines as human-written code, with security scans and review loops intact. And they track ROI—not just by counting tokens, but by measuring whether vibe coding actually accelerates delivery without compounding technical debt.

A Different Kind of Collaboration

Perhaps the most transformative shift isn’t even about code—it’s about collaboration. Enterprise vibe coding lets designers sketch UI flows that engineers validate, or product managers write user stories that become test scaffolds overnight. Suddenly, non-engineers aren’t just spectators to development; they’re participants.

But that power can only be unlocked if boundaries are respected. A designer can prototype, but it’s still an engineer’s job to refine and secure. A PM can generate tests, but QA must validate. In other words, vibe coding can broaden who “codes”—but it doesn’t erase the need for engineering expertise.

Common Challenges and Risks in AI-Driven Coding

Speaking from experience, the promise of vibe coding in the enterprise is huge, but it doesn’t come without some very real challenges. These are the ones we keep running into when helping enterprises adopt AI-driven development at scale:

Quality Control Issues

AI can code faster than a human, but speed rarely means quality. One day, it'll churn out clean, production-quality React components. The next day, there will be bloated functions, strange naming conventions, or only the model knows what logic. The inconsistency makes it a nightmare to maintain in the long run, especially when those future developers are going to have to come in and attempt to figure out what the AI was "thinking." Without excellent stewardship, you are at risk of trading short-term velocity for long-term technical debt.

Token Costs

Enterprise projects don’t just generate a few lines of code; they involve massive codebases, documentation, and complex integrations. Every interaction with an LLM consumes tokens, and those tokens add up quickly. We’ve seen organizations shocked by their monthly AI bills when they don’t monitor usage carefully. The good news is that with strategies like batching prompts, caching common responses, and fine-tuning models on your domain, costs can be kept under control without sacrificing performance.

Governance and Compliance Risks

AI doesn’t inherently know your company’s compliance obligations. It can just as easily generate code that violates internal policies or external regulations like GDPR or HIPAA. We’ve seen developers bypass security checks for the sake of speed, which is a compliance nightmare waiting to happen. Without guardrails, it’s easy for enterprises to end up with code that works but puts them at legal or ethical risk.

Integration with Legacy Systems

AI tools are great at spinning up new features in modern stacks like React, Node, or Firebase. But most enterprises aren’t starting fresh; they’re working with decades-old systems that weren’t designed for AI-generated code. That’s where integration gets messy. We’ve had projects where the AI wrote elegant microservices, but they choked when bolted onto a legacy COBOL or SAP backend. Stability and compatibility become big concerns when AI tries to “bridge” across generations of tech.

Bias and Error Propagation

Finally, AI models can only ever represent the training data they were given. If there is errors or bad patterns in training data, it will be seen in the code itself too. Worse, once those mistakes find their way into enterprise systems, they snowball quickly. For example, a buggy authentication path designed by the AI impacts not just one app, but has the potential to cascade to any systems it touches. That is why uncurated AI output is so dangerous: bugs compound faster than they do in regular development.

Best Practices: How Enterprises Maintain Quality While Scaling AI

At Azumo, we’ve been building with AI long enough to know that speed without discipline leads to disaster. The enterprises that succeed with vibe coding aren’t the ones moving the fastest; they’re the ones putting the right practices in place to balance agility with safety. Here’s what we recommend (and what we practice ourselves):

Establishing Clear Guidelines

Even before the AI can write one line of code, we work alongside businesses to set ground rules. That means setting coding standards, documentation guidelines, and security protocols that all AI contributions must conform to. With everybody knowing the playbook, AI is a force multiplier and not a liability.

Human-in-the-Loop Approach

Not for debate: AI does not replace developers but supplements them. Any piece of AI-generated code requires review by human entities. We advise teams to consider AI as a junior dev: can code, but a senior engineer has to approve, refactor, and check for compliance. This maintains high quality while still gaining velocity.

Automated Testing and Continuous Monitoring

You can't rely on AI to self-police the output. Therefore, we always integrate AI-coded code into continuous integration pipelines with automated tests. Unit tests, integration tests, security scans (the whole nine yards). When it gets off track, we catch it then and there, not weeks later in production.

AI Training and Fine-Tuning

Out-of-the-box LLMs are powerful, but they're not sensitive to your business. The best results are achieved when you fine-tune models off your codebase, industry trends, and regulatory requirements. We've seen quality and consistency improve dramatically once organizations started training models on their own data.

Cross-Functional Collaboration

AI coding isn’t just a dev team issue; it touches compliance, security, and business units. We’ve had the most success when data scientists, engineers, and compliance officers are in the same loop. That way, the AI doesn’t just produce functional code; it produces appropriate code that aligns with organizational standards.

Optimizing Resource Usage

Last but not least: watch your tokens like you’d watch your cloud spend. Enterprises should set up dashboards to track token usage, experiment with prompt efficiency, and leverage caching where possible. Optimizing prompts doesn’t just save money; it often also improves output quality.

Looking Ahead: Enterprise Vibe Coding vs. Agents

What makes this moment particularly interesting is that enterprise vibe coding isn’t the final destination. Research suggests we’re heading toward agentic AI coding, where autonomous agents plan, test, and iterate code with minimal human involvement (arXiv). Enterprise vibe coding may prove to be the creative front-end of this evolution—the brainstorming layer where humans and AI co-design—while agents handle the heavy lifting of execution and scaling.

For today’s tech leaders, that means preparing for a hybrid world: one where vibe coding fuels innovation sprints and agentic systems enforce rigor in production.

The Leadership Imperative

So what does this all mean for CTOs and VPs of Engineering? It means vibe coding cannot be dismissed as a fad, nor embraced as a cure-all. It must be curated. Leaders should encourage experimentation, but with discipline. They must celebrate the creativity that vibe coding unlocks, while investing in the governance that keeps enterprise systems secure, maintainable, and cost-efficient.

The frustration some teams feel—burning tokens with nothing to show—isn’t a failure of vibe coding. It’s a failure of process. And process is precisely where leaders earn their keep.

In the end, vibe coding isn’t about letting AI take over development. It’s about reimagining how humans and AI build together. The companies that figure this out first will not only ship faster—they’ll redefine what it means to scale software in the age of AI.

.avif)