.avif)

Assessing the Effectiveness of Your CI/CD Strategy

While CI/CD garners much attention for its theoretical advantages, its practical implementation often involves tools or processes operating in isolation from the final product being developed by engineers.

How can we enhance our CI/CD strategy? Often overlooked is the consideration of CI/CD strategy design with the same rigor as application design or scalability planning. What factors contribute to the definition of a robust CI/CD strategy?

One crucial aspect is performance, impacting software engineers significantly. Lengthy continuous integration or deployment durations translate to prolonged wait times for developers seeking bug feedback or user deployment.

Another key factor is the flexibility inherent in our CI/CD processes. By flexibility, I refer to the ease of extending these processes swiftly and securely, minimizing the need for extensive rework. Additionally, it's vital to assess how well our strategy aligns with our chosen CI/CD provider. While many opt for service-based solutions like Github Actions, CircleCI, or cloud providers such as AWS, Azure, or GCP, there's a need to question the rigidity imposed by these technologies. It's also pertinent to explore how seamlessly these solutions can be replicated in a local environment, mirroring remote practices. Discrepancies between local and remote processes are common within organizations, posing challenges to consistency.

Cost efficiency is another critical consideration. Historically, this was less significant due to predominantly stateful environments, where continuous uptime ensured cached dependencies and artifacts streamlined processes. However, advancements in containerization, web assembly, and the adoption of scale-to-zero strategies have introduced complexities, slowing compilation and deployment times and subsequently inflating costs.

Strategically, how much of our CI/CD logic is integrated into the product itself? Traditionally treated as an add-on, CI/CD processes possess inherent logic, from compiling applications with specific parameters to orchestrating artifact uploads and conducting integration tests. Embedding CI/CD processes within our product architecture can significantly enhance our overall CI/CD strategy.

Current State of CI/CD Systems

The current state of the system is characterized by its configuration-based, tightly integrated relationship with the provider. While this configuration provides close integration with the provider's resources, it also tends to segregate the system from its final product. Optimizing this system becomes complex due to its environment dependency and limited local execution capabilities. In summary, the current state reflects a configured environment that presents challenges for optimization and independent local execution:

- Configuration-based

- Tightly integrated with the provider

- Typically segregated from the final product

- Complex to optimize

- Environment-dependent

- Limited local execution capabilities

CI/CD-Pipelines with Dagger.io

… and escape YAML-hell along the way

Build and CI/CD systems are powerful tools that complement each other well.

Systems like Gradle or Maven help us move from code to running software artifacts. But if we move into a highly collaborative setting, the need for a central CI/CD system will emerge soon. Scalable and reproducible build environments for a team are just too helpful to ignore.

Sadly, testing and developing complex pipelines often turns out to be tedious because we have to wait for the central system to pick up and process our jobs. Wouldn’t it be fabulous if we could develop and run our pipeline steps locally without modifying them? This is exactly where dagger.io is coming into play.

Through the smart combination of using containers to isolate build steps and cueing for expressive, declarative, and type-safe configuration of our pipeline, dagger.io creates a flexible build system that—thanks to containers—can be extended in different programming languages.

The project Dagger, created by Solomon Hykes, co-founder and former CTO of Docker, solves the problem. Just like Docker helps you bridge the gap between your development and production environment by packaging your logic into Docker images, Dagger does the same for your pipelines by running your workflow within containers.

Dagger is not just about closing the gap between local and remote, it is also about abstracting away from the language of your CI/CD pipelines. You can still use your favorite CI/CD tool but define the logic of the pipeline using the language of your preference (Dagger supports Node.js, Go, and Python).

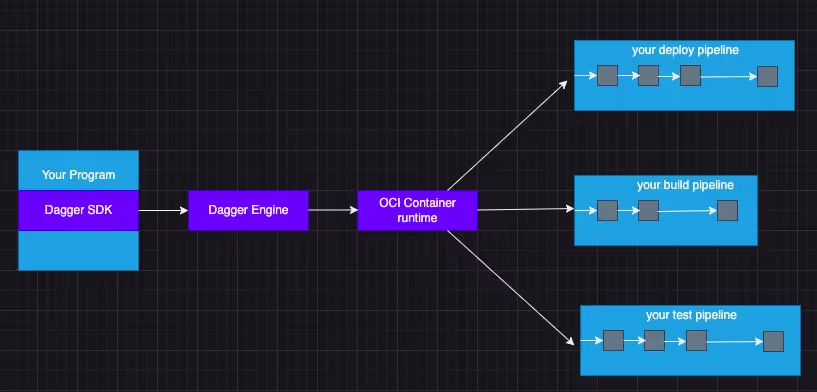

How Does It Work?

- Your program imports the Dagger SDK in your language of choice.

- Using the SDK, your program opens a new session to a Dagger Engine, either by connecting to an existing engine or by provisioning one on the fly.

- Using the SDK, your program prepares API requests describing pipelines to run and then sends them to the engine. The wire protocol used to communicate with the engine is private and not yet documented, but this will change in the future. For now, the SDK is the only documented API available to your program.

- When the engine receives an API request, it computes a Directed Acyclic Graph (DAG) of low-level operations required to compute the result and starts processing operations concurrently.

- When all operations in the pipeline have been resolved, the engine sends the pipeline result back to your program.

- Your program may use the pipeline's result as input to new pipelines.

Which SDK Should I Use?

If you are……

- A Go Developer | Use Go SDK

- A NodeJs Developer | Use NodeJS SDK

- A TypeScript/JavaScript Developer | Use NodeJS SDK

- A Python Developer | Use Python SDK

- A fan of shell scripts | Use CLI SDK

I find myself more at ease working with Node.js, in my case.

Basic Example with Dagger

Step 1: Install the Dagger Node.js SDK

npm install @dagger.io/dagger@latest --save-devStep 2: Create a Dagger Client in Node.js

In your project directory, create a new file named build.js and add the following code to it.

/**

* Developer: Esteban Martin Li Causi

* Company: AZUMO LLC

*/

;(async function () {

// initialize Dagger client

let connect = (await import("@dagger.io/dagger")).connect

connect(

async (client) => {

// get Node image

// get Node version

const node = client.container().from("node:16").withExec(["node", "-v"])

// execute

const version = await node.stdout()

// print output

console.log("Hello from Dagger and Node " + version)

},

{ LogOutput: process.stderr }

)

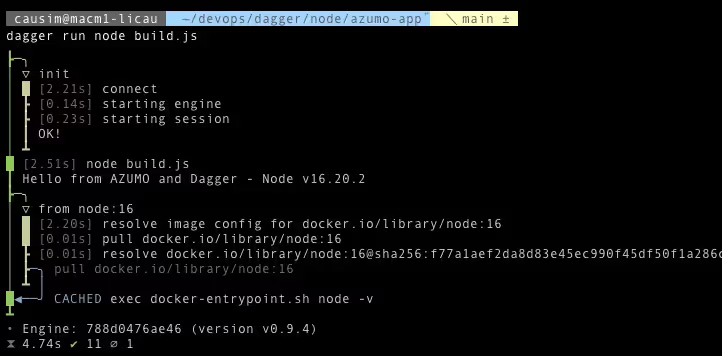

})()This Node.js stub imports the Dagger SDK and defines an asynchronous function. This function performs the following operations:

- It creates a Dagger client with connect(). This client provides an interface for executing commands against the Dagger engine.

- It uses the client's container().from() method to initialize a new container from a base image. In this example, the base image is the node:16 image. This method returns a Container representing an OCI-compatible container image.

- It uses the Container.withExec() method to define the command to be executed in the container - in this case, the command node -v, which returns the Node version string. The withExec() method returns a revised Container with the results of command execution.

- It retrieves the output stream of the last executed with the Container.stdout() method and prints the result to the console.

Run the Node.js CI tool by executing the command below from the project directory:

Node build.jsAs Dagger uses Docker, if we try to execute this test again, it should execute faster because it’s using a cache

Step 3: Test Against a Single Node.js Version

Replace the build.js file from the previous step with the version below

/**

* Developer: Esteban Martin Li Causi

* Company: AZUMO LLC

*/

;(async function () {

// initialize Dagger client

let connect = (await import("@dagger.io/dagger")).connect

connect(

async (client) => {

// get reference to the local project

const source = client

.host()

.directory(".", { exclude: ["node_modules/"] })

// get Node image

const node = client.container().from("node:16")

// mount cloned repository into Node image

const runner = node

.withDirectory("/src", source)

.withWorkdir("/src")

.withExec(["npm", "install"])

// run tests

await runner.withExec(["npm", "test", "--", "--watchAll=false"]).sync()

// build application

// write the build output to the host

await runner

.withExec(["npm", "run", "build"])

.directory("build/")

.export("./build")

},

{ LogOutput: process.stderr }

)

})()The revised code now does the following:

- It creates a Dagger client with connect() as before.

- It uses the client's host().directory(".", ["node_modules/"]) method to obtain a reference to the current directory on the host. This reference is stored in the source variable. It also will ignore the node_modules directory on the host since we passed that in as an excluded directory.

- It uses the client's container().from() method to initialize a new container from a base image. This base image is the Node.js version to be tested against - the node:16 image. This method returns a new Container object with the results.

- It uses the Container.withDirectory() method to write the host directory into the container at the /src path and the Container.withWorkdir() method to set the working directory in the container to that path. The revised Container is stored in the runner constant.

- It uses the Container.withExec() method to define the command to run tests in the container - in this case, the command npm test -- --watchAll=false.

- It uses the Container.sync() method to execute the command.

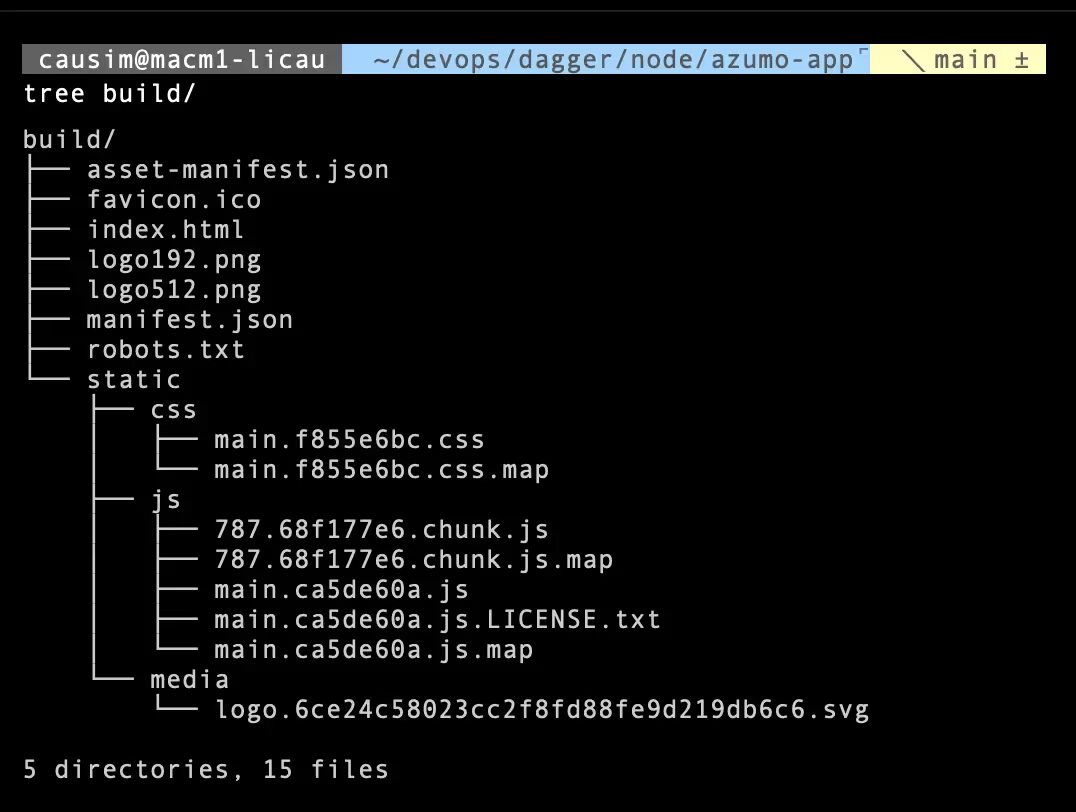

- It invokes the Container.withExec() method again, this time to define the build command npm run build in the container.

- It obtains a reference to the build/ directory in the container with the Container.directory() method. This method returns a Directory object.

- It writes the build/ directory from the container to the host using the Directory.export() method.

Run the Node.js CI tool by executing the command below:

dagger run node build.js

Step 4: Test Against Multiple Node.js Versions

/**

* Developer: Esteban Martin Li Causi

* Company: AZUMO LLC

*/

;(async function () {

// initialize Dagger client

let connect = (await import("@dagger.io/dagger")).connect

connect(

async (client) => {

// Set Node versions against which to test and build

const nodeVersions = ["14", "16", "18"]

// get reference to the local project

const source = client

.host()

.directory(".", { exclude: ["node_modules/"] })

// for each Node version

for (const nodeVersion of nodeVersions) {

// get Node image

const node = client.container().from(`node:${nodeVersion}`)

// mount cloned repository into Node image

const runner = node

.withDirectory("/src", source)

.withWorkdir("/src")

.withExec(["npm", "install"])

// run tests

await runner.withExec(["npm", "test", "--", "--watchAll=false"]).sync()

// build application using specified Node version

// write the build output to the host

await runner

.withExec(["npm", "run", "build"])

.directory("build/")

.export(`./build-node-${nodeVersion}`)

}

},

{ LogOutput: process.stderr }

)

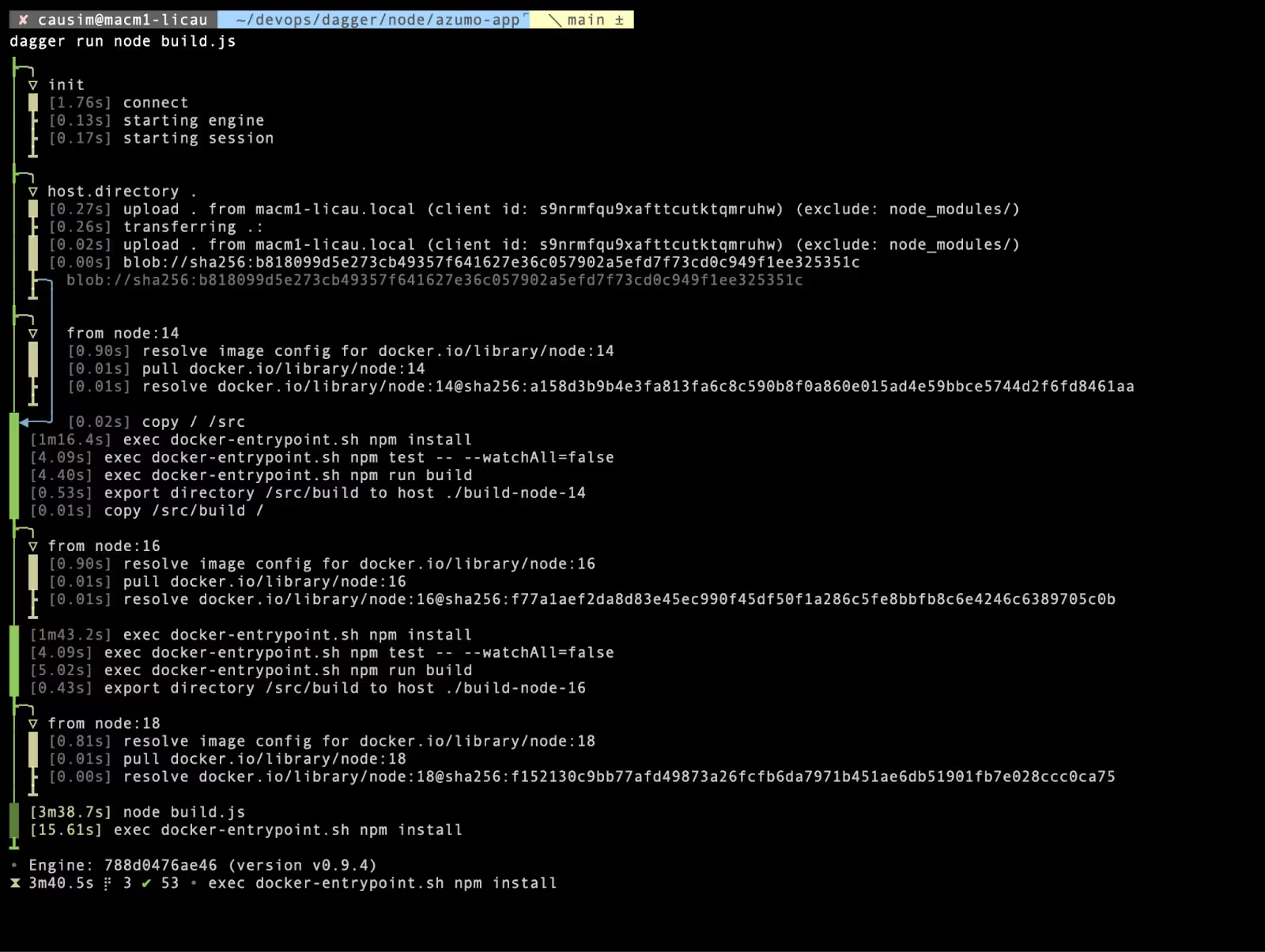

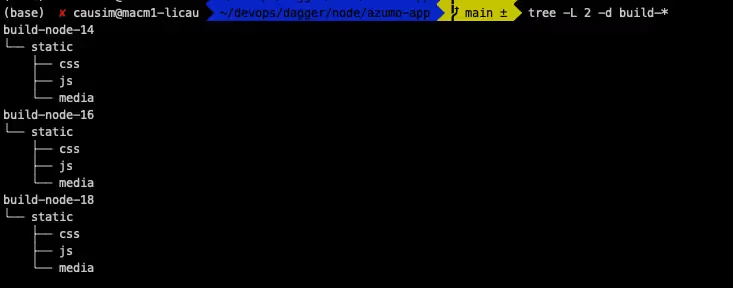

})()This version of the CI tool has additional support for testing and building against multiple Node.js versions.

- It defines the test/build matrix, consisting of Node.js versions 14, 16, and 18.

- It iterates over this matrix, downloading a Node.js container image for each specified version and testing and building the source application against that version.

- It creates an output directory on the host named for each Node.js version so that the build outputs can be differentiated.

Run the Node.js CI tool by executing the command below:

dagger run node build.js

Conclusion

In essence, Dagger empowers developers with a flexible, consistent, and platform-agnostic approach to running CI/CD pipelines:

- Boosting Flexibility: Dagger allows writing pipelines in familiar programming languages (Python, Node.js, Go) compared to YAML definitions in tools like GitHub Actions. This enables more intricate workflows and fosters a more readable codebase.

- Ensuring Consistency: Local development and CI/CD execution leverage the same codebase, eliminating discrepancies and streamlining the development process.

- Promoting Platform Independence: Dagger functions agnostic to the chosen CI/CD platform, reducing vendor lock-in and fostering adaptability.

References

- https://archive.docs.dagger.io/0.9/145912/ci/

- https://dagger.io/blog/dockerfiles-with-dagger

- https://archive.docs.dagger.io/0.9/sdk/nodejs/783645/get-started

.avif)

.avif)