Enhancing Search Capabilities using Term Similarity

Search engines are important tools that are used to find information on the internet. The accuracy and quality of search results can have a direct impact on user experience, bounce rate, and monetization. Therefore, it is crucial to optimize search engines to achieve the best possible results.

One way to improve the accuracy and quality of search results is by enhancing a documents' TF-IDF (Term Frequency - Inverse Document Frequency). We will show in this post that this can be done by making a few key changes that will allow for up to a 20% increase in results.

For example, when you are searching for an entity based on capabilities that it can offer you, a common practice is to perform a boost of the query based on synonyms. However, this can be improved by creating your own dictionary to enhance the search based on terms that belong to the same domain. With this new approach, we convert our search engine into a system that learns from the domains we are searching for, and enhances the queries using terms that the entities offer together, rather than simple synonyms.

We assume you already know how to set up a search engine based on TF-IDF like ElasticSearch, Solr, etc, and we are going to focus on creating a boost to improve the score of the query.

However, if you are not familiar with TF-IDF, it is a short for term frequency-inverse document frequency. It is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus.

The TF-IDF value increases proportionally to the number of times a word appears in the document and is offset by the number of documents in the corpus that contain the word, which helps to adjust for the fact that some words appear more frequently in general.

TF-IDF is one of the most popular term-weighting schemes today. A survey conducted in 2015 showed that 83% of text-based recommender systems in digital libraries use it.

Term Similarity

Text similarity is an important concept in natural language processing, which has many applications in the industry. Our challenge is to increase the power of the system based on text similarities, but these similarities are not related to their meaning, it is a domain similarity. To achieve this, we use word2vec and train an unsupervised learning model to obtain vector representations of words.

Let's give an example to clarify the idea:

Domain 1:

- marketing campaign

- graphics design

- social networks

Domain 2:

- soccer team

- balls

- shin guards

- football shirt

Domain “n”:

- term 1

- term 2

- …

- term “n”

In the above example, if we are looking for "marketing campaign" similarities, we would like to get "graphic design" or "social networks" as results.

We will also need to find similarities for terms that are not present in our training dataset. So if we search for "soccer", for example, we get "players" as a result. In each case thew domain informs the dataset and hence the results.

Generating the domains

Our research dataset consists of many documents drawn from companies data, their industries, as well as their web pages that include product and user information, and even plain text reviews of their service or product.

We clean the data as a first step, removing punctuations, HTML tags, URLs, stopwords, emojis, and all other useless information.

After cleaning up the text, we use Spacy to extract the entities we are interested in detailing. If you are trying to mimic our approach you could use a pre-trained NER (or train one on your own) to detect the terms that the user is likely to search on your engine.

In our case we trained a NER to detect the capabilities of a company, if you are interested in we could expand on this later, just ask in the comments.

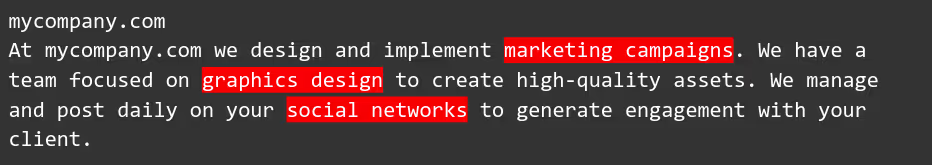

Basically, from a text like this:

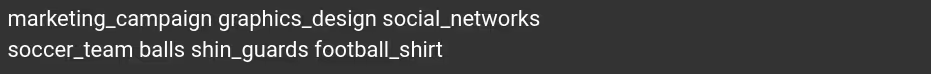

We create a file input_data.txt:

Working with terms instead of separate words

This trick is really simple and it is used in other libraries like sense2vec. To use terms instead of separate words, we need to replace the spaces with underscores, so when the word representation is generated, it will be recognized as a single word.

Example: “marketing campaign” becomes -> “marketing_campaign”

Which word2vec library to use?

There are two major word2vec libraries, Glove and FastText.

The key difference is Glove treats each word in the corpus like an atomic entity and generates a vector for each word. In this sense Glove is very much like word2vec - both treat words as the smallest unit to train on.

FastText is essentially an extension of the word2vec model that treats each word as composed of character ngrams. So the vector for a word is made of the sum of the character ngrams. This difference enables FastText to

- Generate better word embeddings for rare words (even if words are rare their character ngrams are still shared with other words - hence the embeddings can still be good)

- Out of vocabulary words - they can construct the vector for a word from its character ngrams even if the word doesn't appear in the training corpus. Both Glove and word2vec cannot

For our effort, we chose FastText as it is an open-source, free, lightweight library that allows users to learn text representations, and can return similarities of terms that are not present in our training dataset, by enriching our word vectors with subword information.

Choosing the model for computing word representations

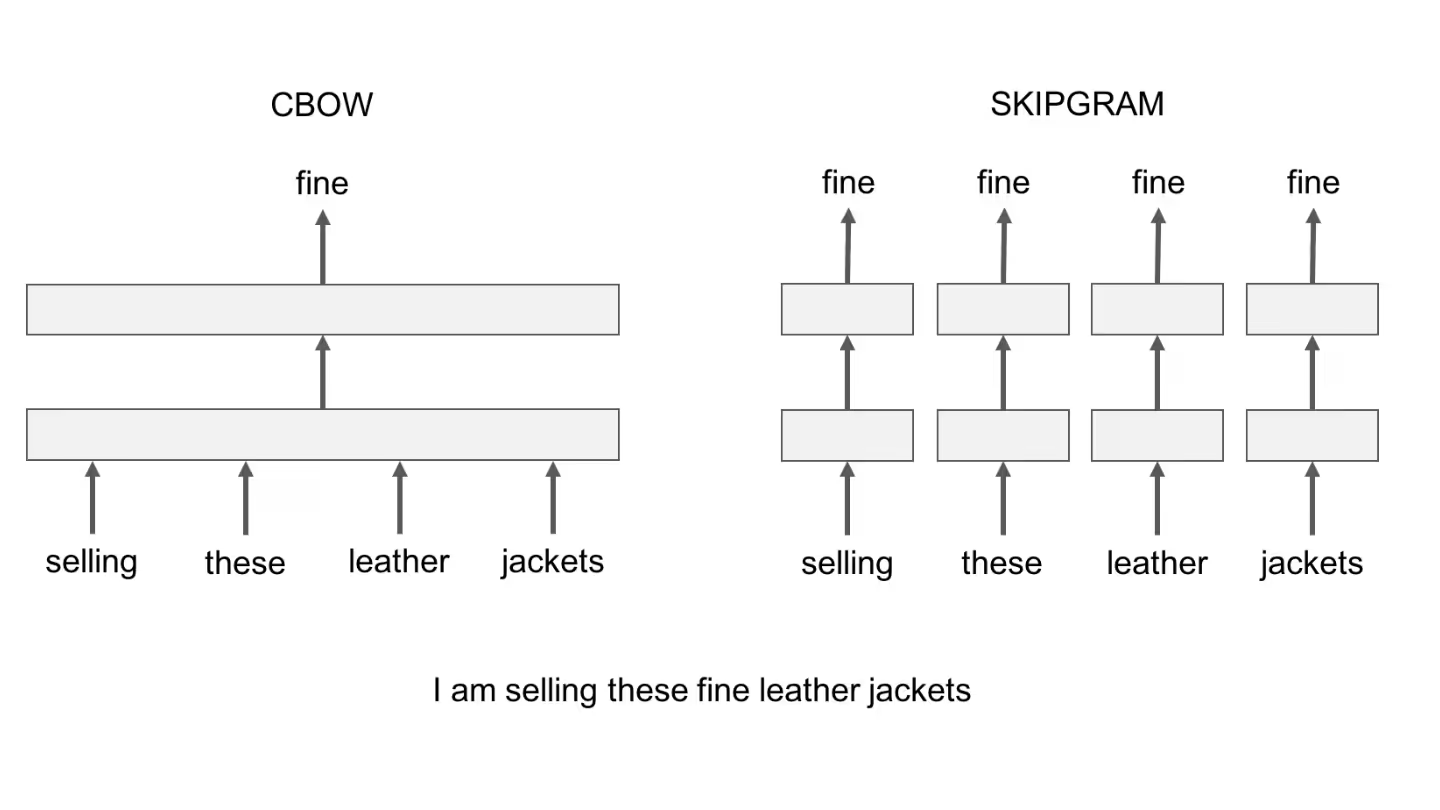

FastText provides two models:

- skipgram: learns to predict a target word thanks to a nearby word.

- cbow (continuous-bag-of-words): predicts the target word according to its context. The context is represented as a bag of words contained in a fixed size window around the target word.

Example:

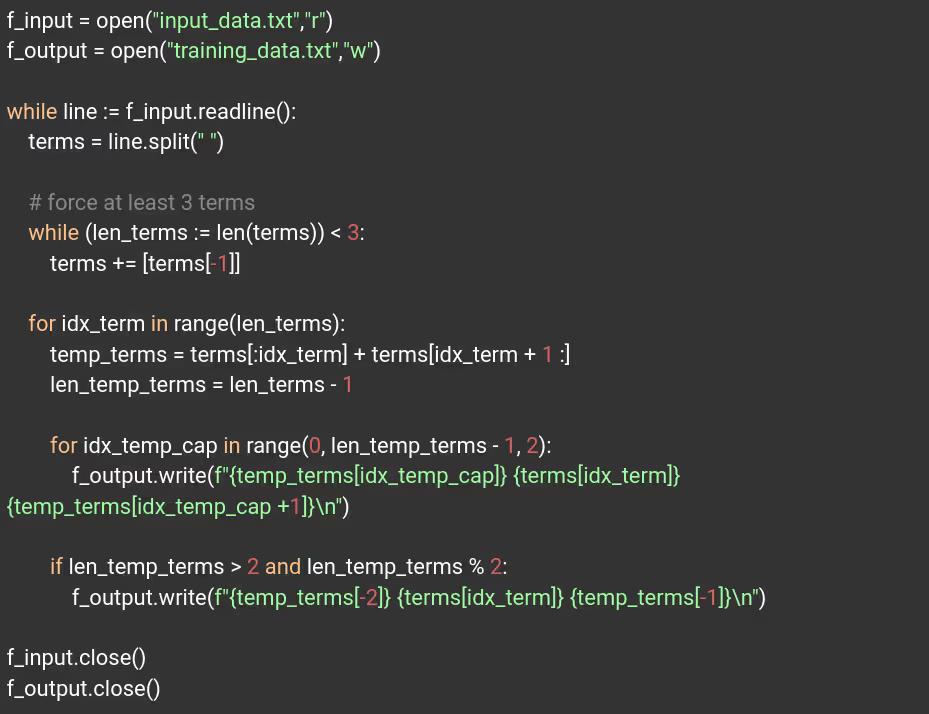

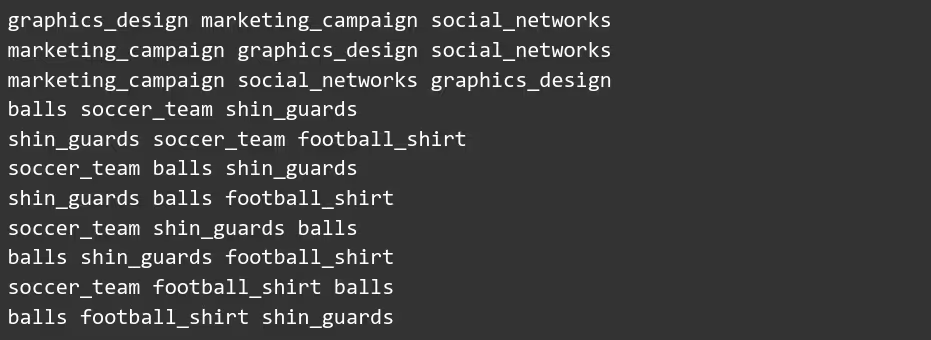

The “cbow” model suits our needs. It will generate the vectors based on the word context (nearest words), so to force this nearness we generated a file with multiple permutations of terms belonging to the same domain. Always with a length of 3, we must ensure that each term is in the middle between the neighbors of the domain.

Example: term_1 with 4 term domains

Sampling threshold

The main goal of sampling threshold (t) is to balance word weight based on its occurrence. During the training stage, words with a high occurrence will be discarded. This is useful to avoid common words like pronouns or articles take an important place in the model.

In our scenario every term has the same importance, so we need to omit this behavior, choosing value 1 we ensure that any word will be discarded because of a high occurrence.

Loss functions

There are two possible functions:

- Negative sampling (ns): is a way to sample the training data, similar to stochastic gradient descent, but the key is you look for negative training examples. Intuitively, it trains based on sampling places it might have expected a word, but didn't find one, which is faster than training an entire corpus every iteration and makes sense for common words

- Hierarchical softmax (hs): provides for an improvement in training efficiency since the output vector is determined by a tree-like traversal of the network layers; a given training sample only has to evaluate/update. This essentially expands the weights to support a large vocabulary - a given word is related to fewer neurons and visa-versa.

We have chosen “hs” because it seems to work better with infrequent terms.

Generate training data

Generate permutations:

Example of input_data.txt:

Example of training_data.txt:

Training FastText model

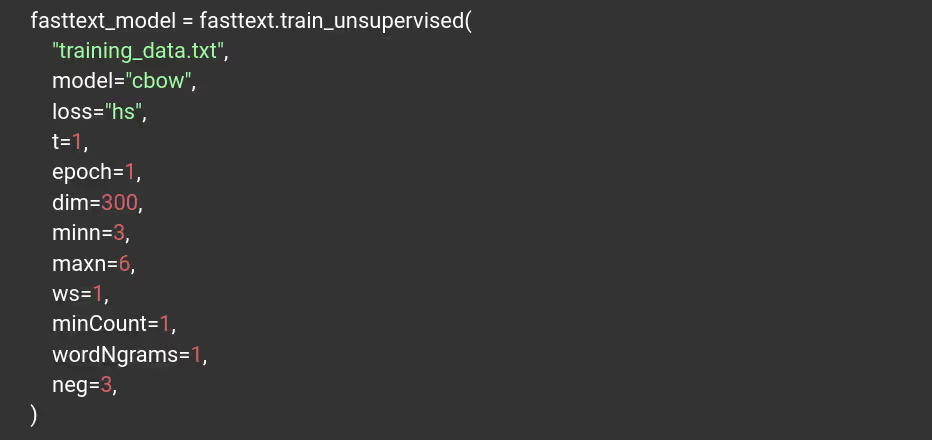

Unsupervised training with the following parameters:

- minCount: Minimum count for inclusion in vocab. We choose 1 to include every word in the vocab.

- dim: controls the size of the vectors.

- epoch: Number of times the FastText model will loop over your data. We choose one because it gives us a better result to avoid overfitting.

- ws: Set windows size. We choose 1 because we generated a training dataset of 3 terms for each line, forcing the middle term to have just 1 term on each side.

- Minn and maxn: Set min and max length of char ngram. We choose 3 and 6 because it gives the best result to predict terms outside the vocabulary.

- wordNgrams: Max length of word ngram. We choose 1 because we generated a training dataset of 3 terms for each line, forcing each term to consider 1 term near the target.

- neg: Number of negatives sampled. We choose 3 because it gives us the best result.

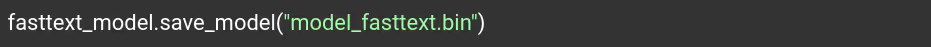

Saving the model:

Tests and results

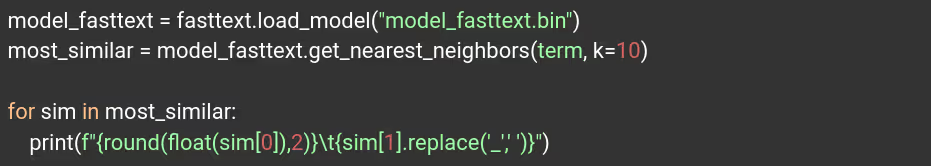

Similarities of “marketing_campaign”:

Similarities of “shin_guards”:

Similarities of “soccer_player”:

To summarize what these numbers mean, let's take the example of "marketing campaign" and define a threshold of 0.9. For this case, we would enhance our search using terms such as "graphics design" and "social networks", which gives us a much more specific and focused range for the type of entities that the search engine will provide us.

On the other hand, terms such as "soccer team" or "balls" are negative terms and should be avoided to enrich the query. We can also use this technique to disambiguate entities in a query. For example, if we have a query for "soccer player", it will give us relevant results related to that entity, such as "soccer team" and "balls". However, if we have a query for "shin guards", it will give us more relevant results related to that entity, such as "football shirt".

Implementing Our Search Engine Boost Approach

Everything we've done brings us to this point, now we're going to create a boost to improve the results of the query. We assume you have a search engine based on IT-IDF, in this example we use Elasticsearch.

We have created an index named “index-test” with a property named “text” of type “text”, which is populated with every document of the dataset as raw plain text.

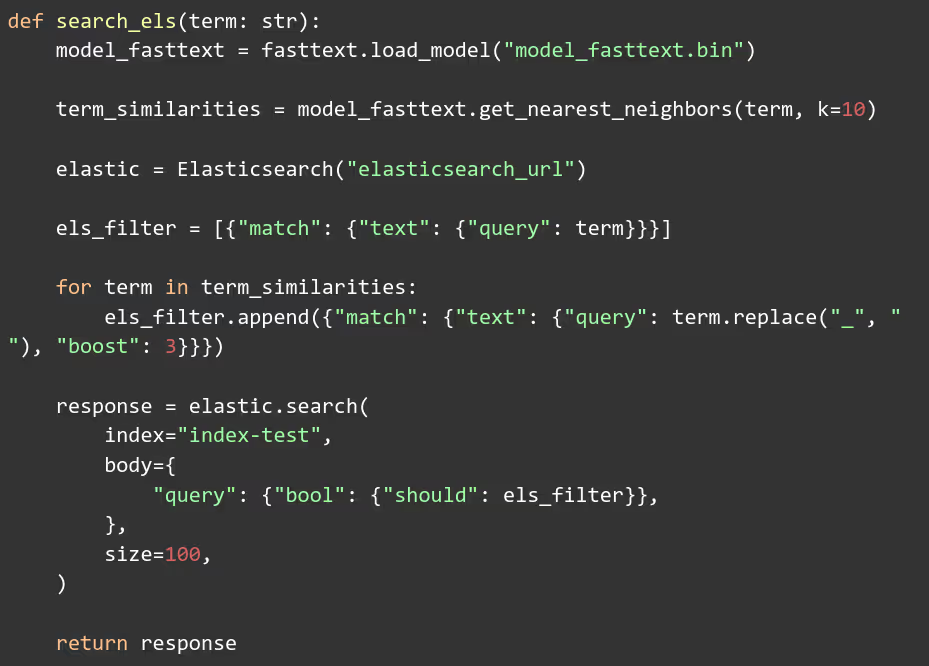

We use the following code to boost the query:

Performance Improvement

To measure how this implementation affects the final results, we need to define:

- Which entities do we expect as a result of a specific query. We do it based on categories that we had previously tagged. This allows us to categorize as "correct" or "incorrect" a result.

- The dataset of queries to compare the results. We created 100 different queries, and we define to use the top 20 results of each one to analyze their performance.

Without the booster, it gave us an average of 13.4/20 (67%) correct results. By adding the booster, the results went up to 17.5/20 (87.5%), more than 20% improvement.

Thinking about specific results, we were able to improve the number of significant results in a top 20 search from 13.4 to 17.5. In other words, four new relevant entities are shown to the final user.

Conclusion

We have seen how easy it is to create a word representation of similar terms without the requirement of having tons of data or horsepower to train the model. This is a powerful tool and could have multiple uses, in our case it was used as a boost to improve queries in Elasticsearch for an Azumo customer.

The project was developed by Alejandro Mouras and Maximiliano Teruel, with mentoring of Juan Pablo Lorandi.

.avif)